Content strategists, brace yourselves! I’m about to make content auditing a whole lot easier for you. But before I do, please allow me to be brutally honest. Content audits suck! (Although they don’t have to). No digital marketer in the world has ever woken up in the morning and said, “Ahh, I can’t wait to manually audit tens of thousands pages today!”

I’ve recently spent a lot of time performing content audits for medium and large size business websites, across a multitude of vertical and horizontal markets. Over the past 2 months alone, I’ve spent approximately 270 hours working on content audits. Since I’ve been tracking my time, turns out that on average, it takes me 90 hours to complete a content audit from start to finish.

Now to put my role in context, I’m a Digital Marketing Analyst. I’ve been fortunate enough to gain bountiful experience in the various facets of the digital marketing world. Being primarily a hybrid of SEO & Content Strategy, I’m able to bring both of these wonderful perspectives together to draw out very actionable, data-driven insights for our clients.

Why Bother With Content Audits?

Before you put yourself through the gut-wrenching pain and misery of auditing thousands of webpages, you should always ask, “Why are we doing a content audit in the first place?” Well, here’s why:

- Determine the best (and worst) performing pages on your site.

- Determine if your site has been hit by Panda. If so, why?

- Identify content that is (and isn’t) attracting shares & links.

- Identify content gaps in your customer’s decision journey.

- Identify & eliminate content that doesn’t align to the specific needs of your customers.

- From a topical perspective, identify what content you should be producing more (and less) of.

- From a UX/UI perspective, determine how to improve readability, format, and visual appeal.

- Eliminate, prune, and/or consolidate cruft!

By the way, “cruft” is my favorite new marketing lingo. Although its probably not that new, I was first introduced to cruft by Rand Fishkin in a very smart and actionable edition of Whiteboard Friday, in which he breaks down why cruft is so detrimental and how to get rid of it.

So yeah, marketers hate cruft. Cruft is evil.

What’s the common theme here? Besides the fact that manually auditing thousands of pages is a gut-wrenching task, and cruft is evil… Content audits directly inform your content strategy! That’s right. You need to understand your past in order to better predict the future, and content audits are the way to do it.

Before even doing a content audit, there are a few things you should know. Primarily, you should know the answers to these 2 very important questions:

-

Who are my target customers?

-

What are their specific needs as they go through their purchase decision making process?

If you don’t know the answers to those 2 questions, you can still do a content audit, but first I’d recommend reading Mike King’s post on how to understand and build personas.

Alright, so let’s get into it. What are the 9 biggest problems with content audits, and how do you solve them?

Problem #1 – Auditing Huge Websites

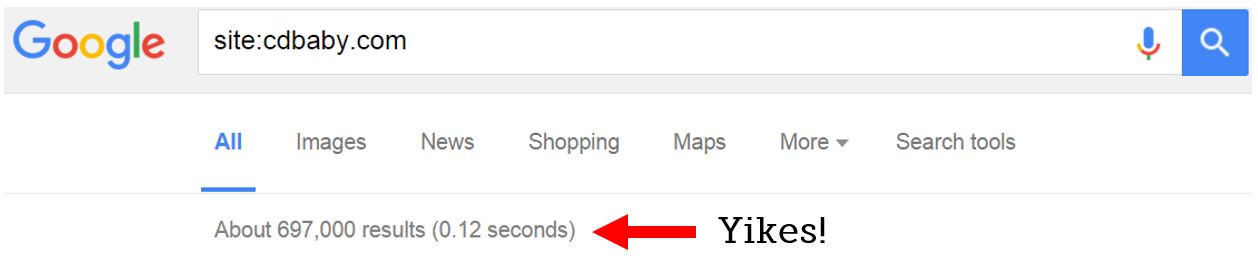

Hypothetical scenario. Let’s say your client is CD Baby. The site: search is no longer the most accurate method to determine how large a site is, but it can still give you a ball park estimation. At this point, it looks like the site is almost 700k pages deep. Yikes! This is the type of stuff that drives digital marketers to insanity. Data collection of this magnitude is likely going to be a nightmare.

Luckily, this whole nightmare can be avoided with some good ol’ fashion communication. Did you ever stop and think to ask the client about their goals? (I hope you did, or someone on your team did). Because after looking over the CD Baby site, it’s evident that the vast majority of pages are product driven.

Content audits for E-Commerce sites can get ugly real quick, but maybe there’s an educational or blog section of the site your client cares more about. Do you really think your client wants you spending countless hours auditing hundreds of thousands of repetitive product pages? Do you really want to beat yourself up that bad? Come on now, let’s get smart about this!

Solution – Determine What’s Most Important Before Data Collection

Turns out that simply asking the client what they care about the most just saved you from doing a ton of unproductive work! You mistakenly assumed the need to audit their entire site, but in reality – all of their valuable content lives on a blog sub-domain. In this case, it’s still a good idea to audit some of the product pages too. Just remember, if the goal is primarily to inform content strategy, then you should primarily be auditing content.

So, let’s compare. Would you rather audit 700,000 pages or 5,000? Yeah, I think I’ll go with the latter.

Problem #2 – Data Collection: Crawling Huge Websites

It’s not always as simple as the client saying “Yeah, just go with blog sub-domain.” You can’t hope that you’ll never have to deal with huge data sets, because you will! Crawling huge websites can become problematic for so many reasons. Time, cost, high probability of exceeding CPU memory limits, deadlines getting pushed back, etc.

In situations where have to crawl a massive site, you can’t just pop the domain into Screaming Frog and let her rip. From my experience with Screaming Frog, it takes around 1 hour to crawl approx. 15,000 unique URLs. That adds up to be a long time when crawling hundreds of thousands of pages. You’re also not always going to be fortunate enough to know the exact directories or sub-domains to crawl. You’ll have to figure all that out on your own. Here’s how to do it.

Solution – Focused Crawls: Identify & Crawl Segmented XML Sitemaps

First, identify segmented XML Sitemaps via Robots.txt

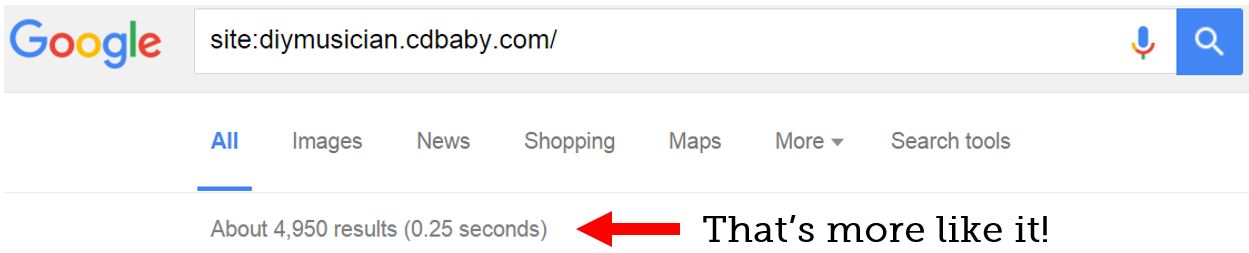

For this example, I’ve decided to go with Apple. Now, being the behemoth that they are, we’d expect them to have a site that’s well maintained. And sure enough, they do. Notice how they’ve neatly segmented their sitemaps. Looks like they went with a /shop directory XML sitemap, and a separate XML sitemap for everything else. From a content auditing perspective, I don’t want to audit a bunch of product pages, so I’ll go for the main sitemap as opposed to the /shop directory.

Then : https://www.apple.com/sitemap.xml into chrome and type CTRL + U for “View Source”

Copy all the URLs from the XML Sitemap.

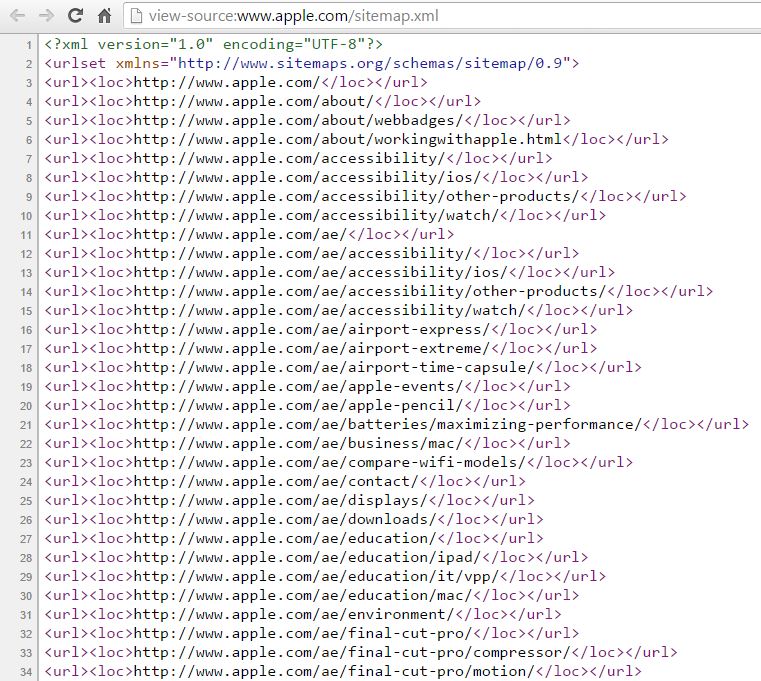

Then, dump them into Screaming Frog for a list crawl.

Bada bing, bada boom! Easily execute a focused crawl by leveraging XML sitemaps.

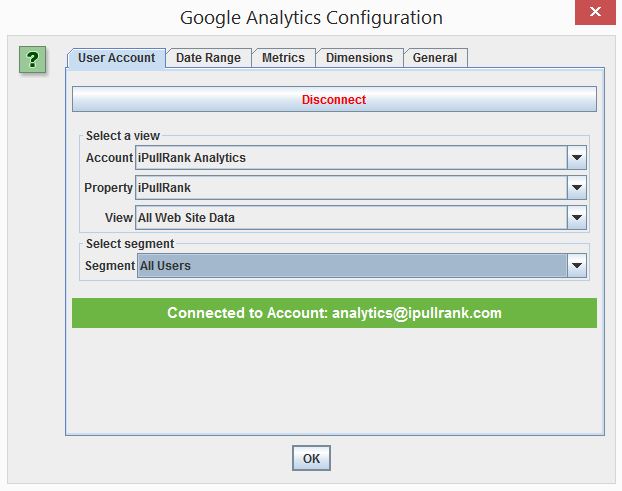

Pro Tip: Use the Analytics API to collect data from Google Analytics into your crawl.

Here’s the path: Configuration -> API Access -> Google Analytics

Problem #3 – Crawl Issues: Limited Memory

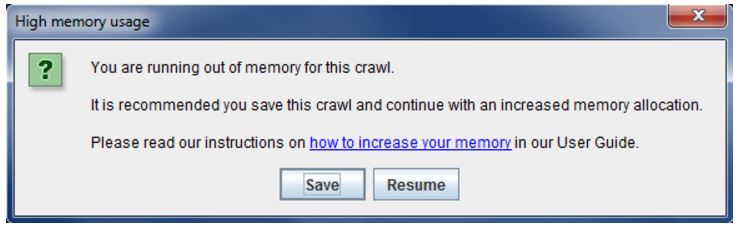

I’ve been a huge fan of two crawlers that are very well known in the digital marketing world – Screaming Frog & URL Profiler. I think most marketers would agree that these are fantastic tools that are able to execute a number of tasks when it comes to data collection.

However, no tool is indestructible and at some point the inevitable will happen. When dealing with giant data sets (100k URLs and up) the crawlers are likely to run out of memory, freeze, choke, and/or crash.

Any SEO will tell you – this is by far one of the most frustrating things you can encounter during a crawl!

Luckily, there are ways around it.

Solution – Leverage Cloud Based Services Instead of Local Memory

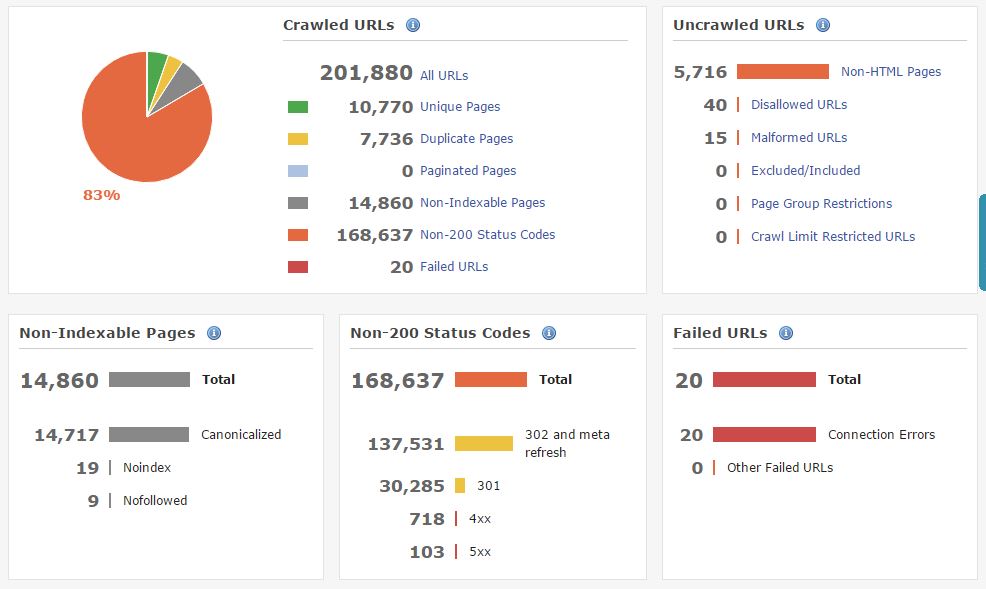

Option #1: DeepCrawl

DeepCrawl is one of my favorite SEO spiders. The reason why marketers love this tool so much is because of its user friendliness and clean interface. This is truly one of those “set it and forget it” crawlers that does the heavy lifting for you without running out of memory due to its cloud power. You can see that in this example, DeepCrawl made it to 201,880 URLs with no problem.

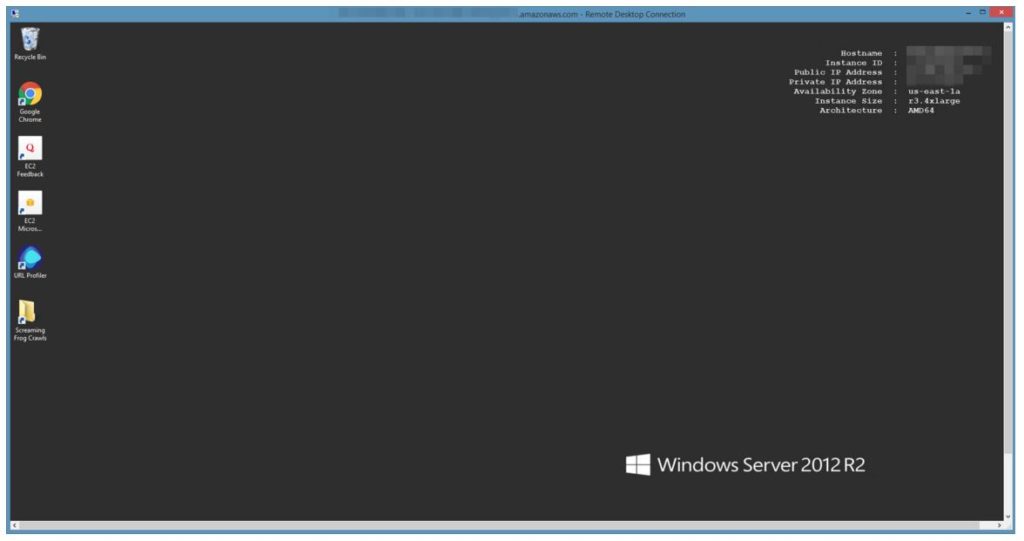

Option #2: Run Screaming Frog & URL Profiler on Amazon Web Services

We literally had to write an entire post just on this topic alone. Read Mike’s post if you need step by step instructions on how to set up Screaming Frog & URL Profiler on AWS. Long story short, you can crawl gigantic data sets with Screaming Frog & URL Profiler on AWS because of the freedom & flexibility of the cloud. It’s all about the cloud!

Problem #4 – Quantitative Analysis: Collecting The Right Data Points

Here’s where things start to get fun. You’ve finally got your list of URLs ready to go. You know exactly what you’re going to be auditing. Time to get dirty.

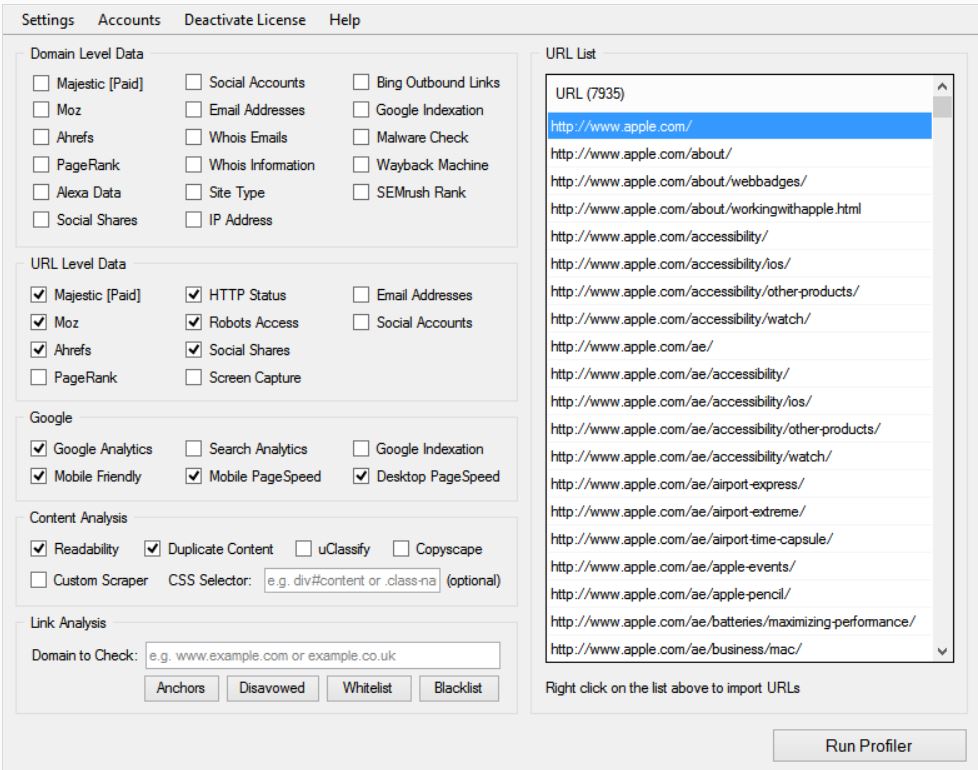

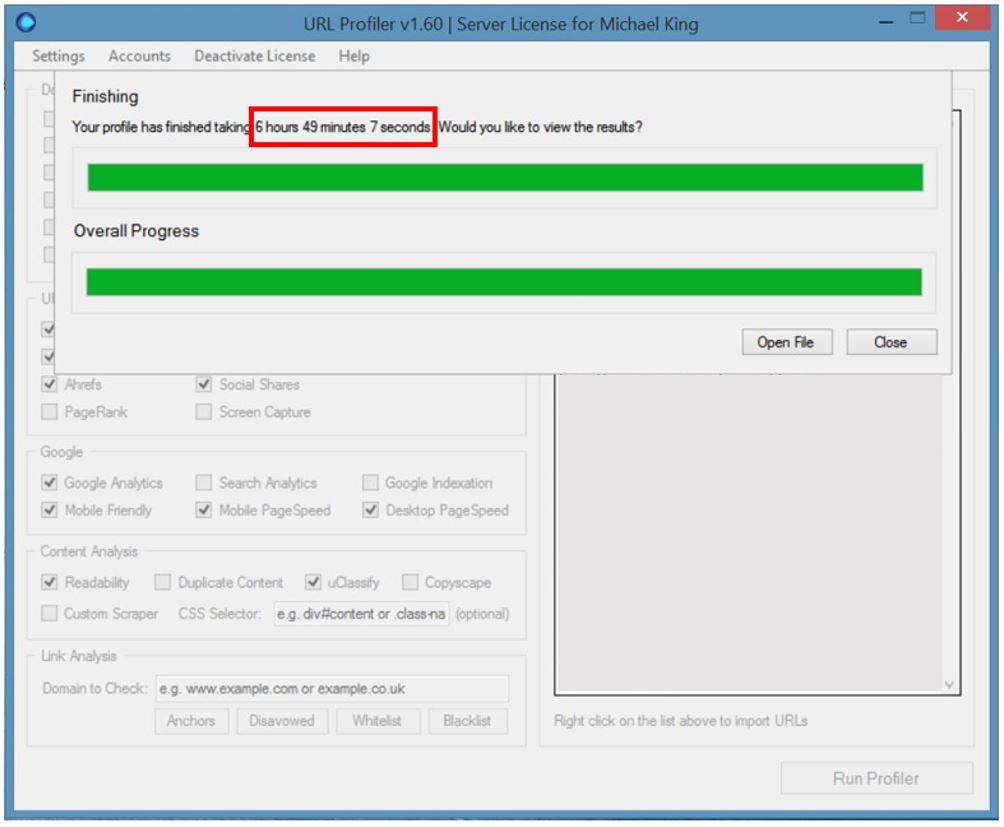

Solution – URL Profiler: The Secret Weapon

Turns out you’re not getting that dirty after all. URL Profiler does the heavy lifting for you. These are the data points I collect when running a URL Profiler crawl for content audits. (Still using the Apple example).

A word to the wise – Allow ample time for this crawl to complete!

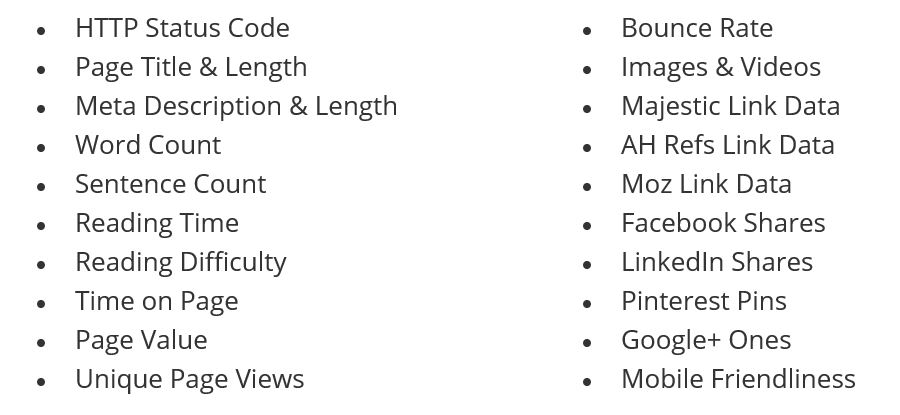

Consider Leveraging These Quantitative Metrics:

Problem #5 – Qualitative Analysis: Too Many Pages to Manually Review

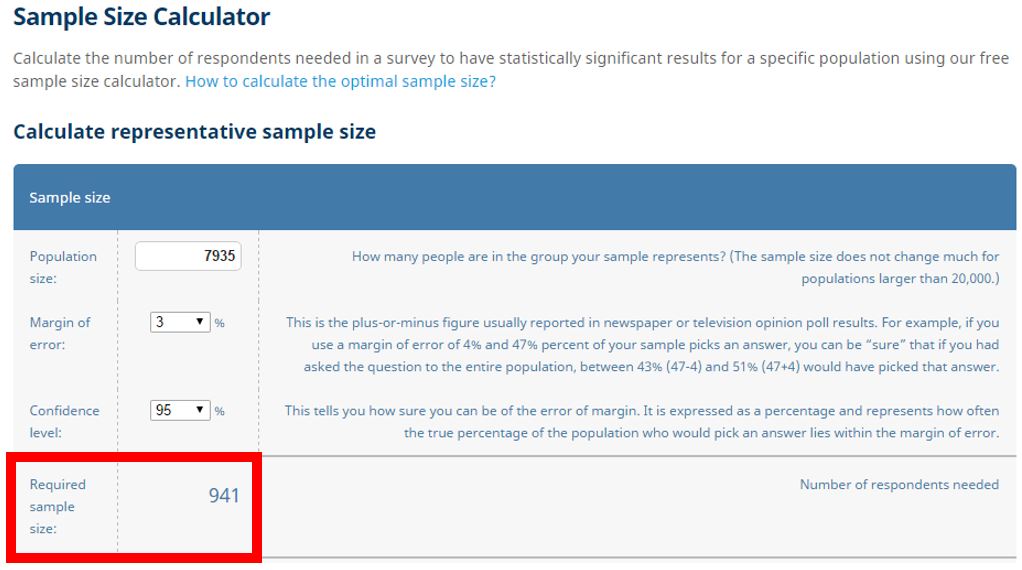

If we continue using the Apple.com example, there would be 7,935 pages in our quantitative data set at this point. Ask yourself, do you really want manually audit 7,935 pages? Is that even realistic? Probably not.

Solution – Qualitative Sampling

Say hello to your new best friend. Sample size calculator to the rescue.

What we’re able to do in this case is take a statistically significant sample in order to satisfy an adequate number of pages to review qualitatively.

7,935 total pages.

3% Margin of error.

95% Confidence.

Required Sample Size: 941 pages.

I think I’ll go with 941 pages instead!

Thank you very much sample size calculator. I owe you a drink later.

Problem #6 – Qualitative Analysis: Determining What To Evaluate

Now that the qualitative sample has been narrowed down to 941 pages, it’s time to figure out how to evaluate this data in the most effective way possible. If you fail at this stage, your insights are going to be all out of wack. This is one of the most critical parts of the content audit.

Solution – Standardize Your Qualitative Metrics

This isn’t the end all, be all of qualitative metrics. However, these are some of my favorites. I should mention that we’re still playing around with some new ideas. We haven’t got it down to a science quite yet, but we’re getting close. I’d love to hear what some of your qualitative metrics are.

Alternative Solution – Download Our Content Audit Template

Want to significantly improve your content strategy, with ease? Try our cheat sheet!

Problem #7 – Qualitative Analysis: Not Properly Defining Your Metrics

Once you’ve decided on your metrics, how do you define them? This can get messy if you don’t properly define your metrics. Clients will want to know exactly why you rated a piece of content the way you did. You’ve got to be ready to explain your methodology in a clear and concise manner.

Before you go off on a tangent, I’d highly recommend you check out Google’s Search Quality Guidelines. In a rare and unprecedented move, they revealed (with specific examples) what they consider to be very high quality pages, and very low quality pages.

Solution – Clearly Define Your Qualitative Metrics With Supporting Data

Here’s how I define some of my qualitative metrics:

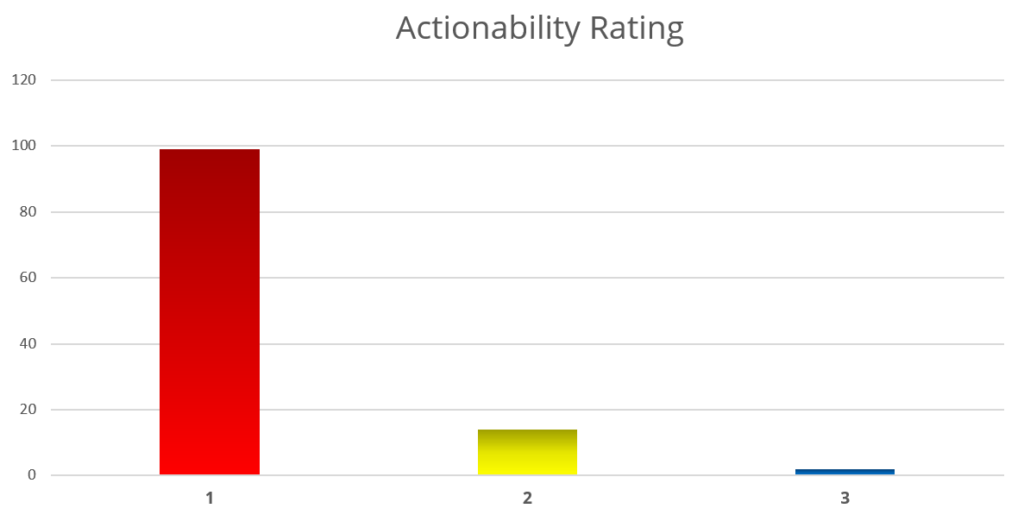

Actionability is how likely a visitor will be to convert a business goal, social share, or subscribe to a mailing list on any given page.

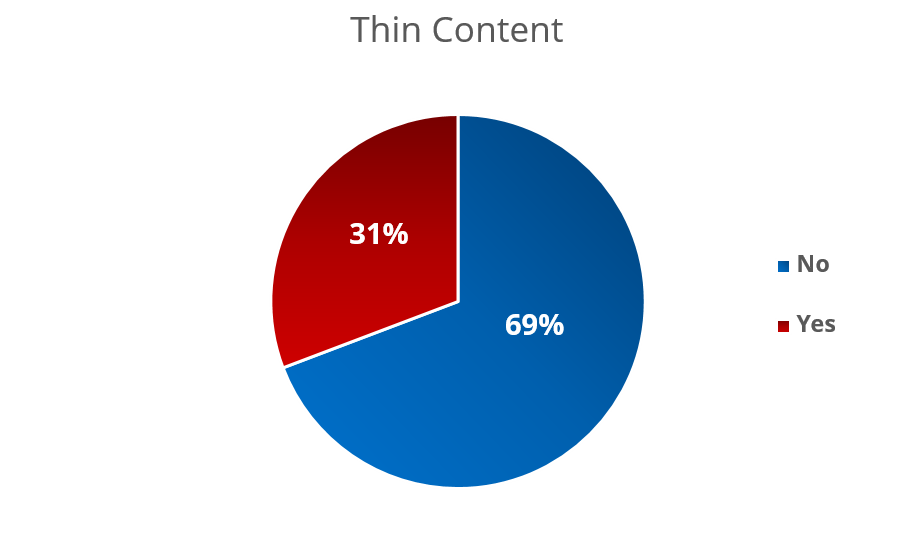

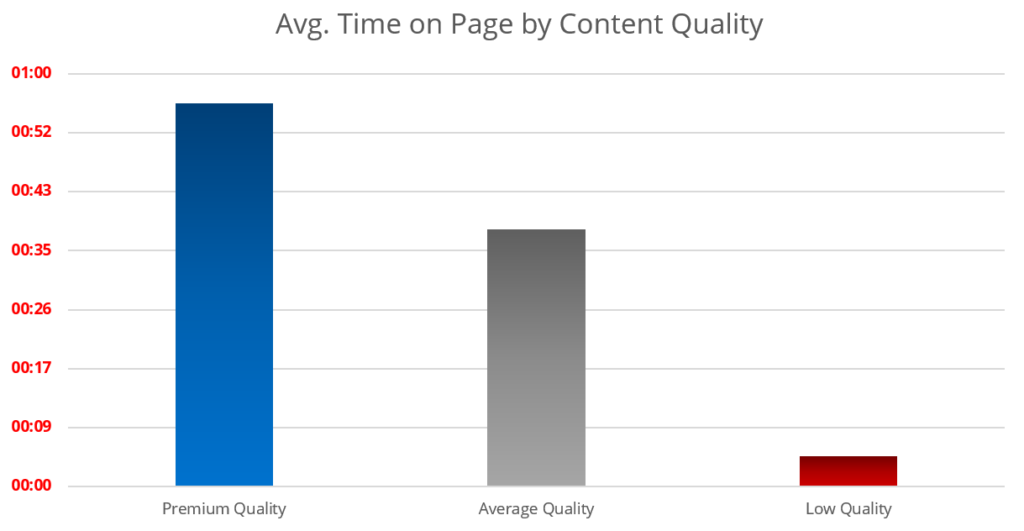

I define thin content content to be any page with a word count of 275 words or less, that yields a time on page of 30 seconds or less.

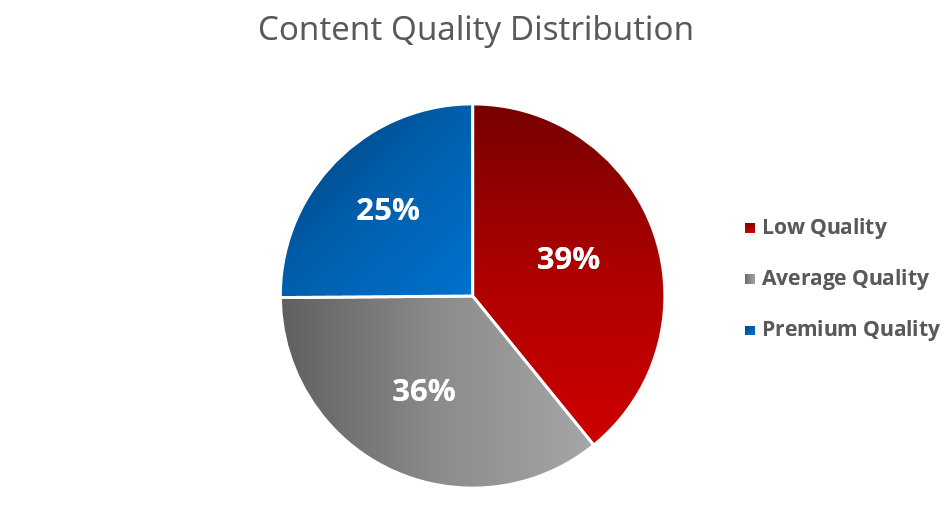

I use a combination of inbound links, shares, readability, unique views, avg. time on page, page authority, & page value to determine content quality.

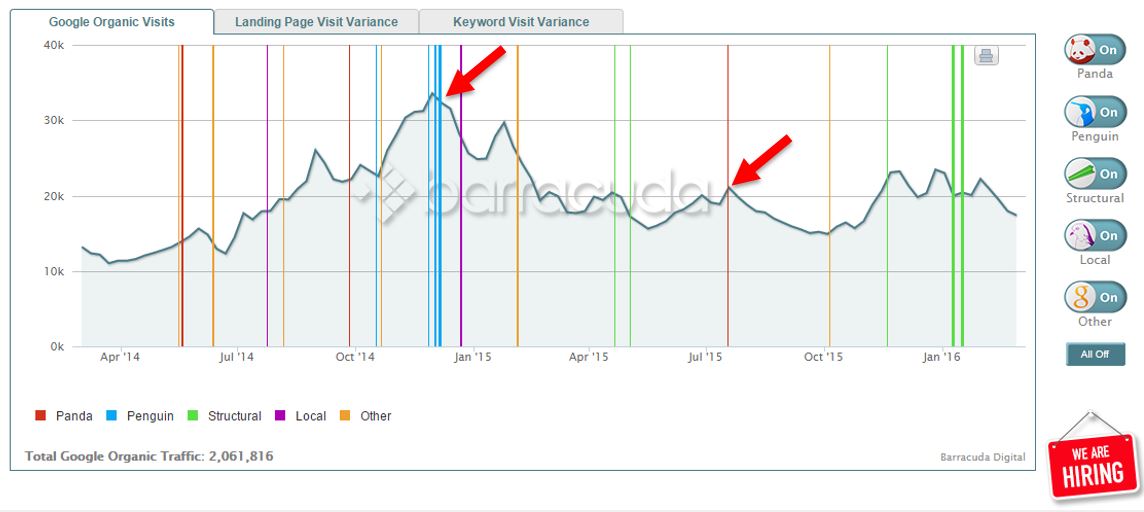

I also cross-check Google Analytics data against Barracuda’s PANGUIN tool to identify if organic traffic has been impacted by an algorithm update.

Problem #8 – Drawing Powerful Insights

You’re always going to have your “go-to’s” aka the easy to find insights. Things that are largely quantitative, such as pages that are getting the most views. Or, pages that are getting the most links. Don’t get me wrong. Those are cute. I love those. But there are ways to get deeper, more actionable insights.

Solution – Cross Tabulate Your Qualitative & Quantitative Data

You truly get the best insights when you combine your qualitative & quantitative analyses. From here, its pretty much pivot until your data tells a compelling story.

Here are some of my favorite examples:

On average, pages with at least 1 video were over 3x more valuable than pages without videos.

On average, visitors were far more engaged with premium quality content.

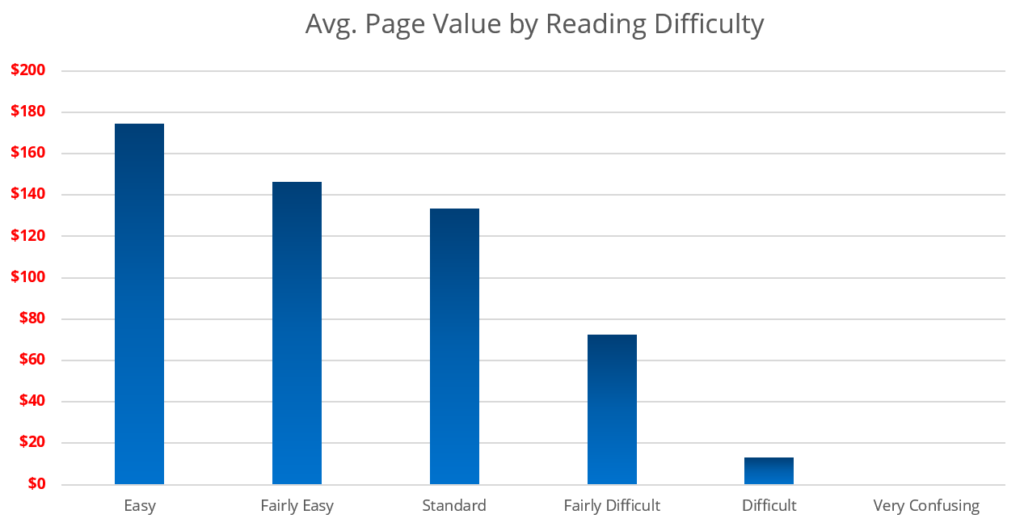

There was a direct correlation between reading ease and page value.

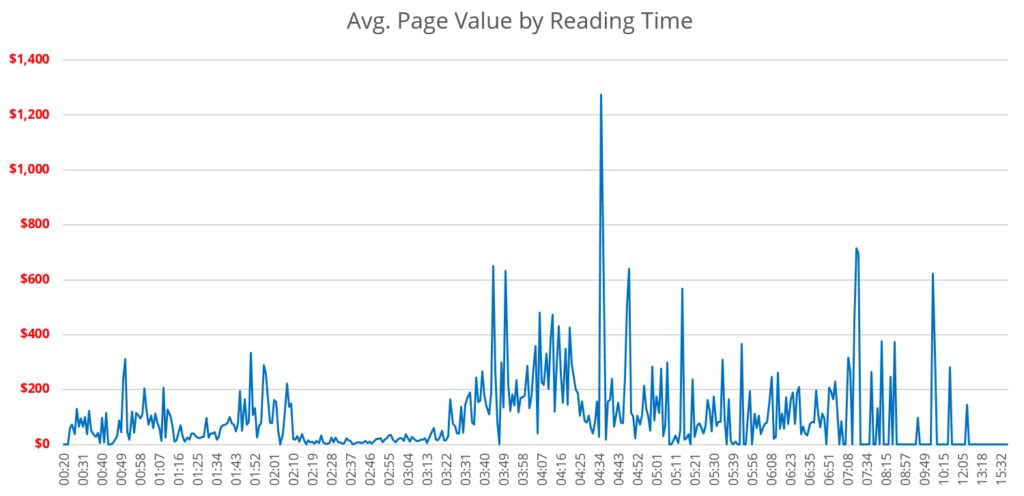

In terms of reading time, approx. 3:30 – 5:30 is the sweet spot for driving revenue.

Problem #9 – Spare Your Client: Avoid Data Overload

If you’ve read this far, it’s possible you might be overwhelmed. There’s a lot of sh*t in here, I know. Now, just imagine how a client might feel after all this. Not only do you completely pick apart the entire site, but you’ve showed a ton of things that are wrong. The first thing a client is going to think after all this data is “Whoa, where do I begin?”

Solution – Clearly Lay Out “Next Steps” For Your Client

Here’s an example of some next steps I might suggest after a brutal content audit:

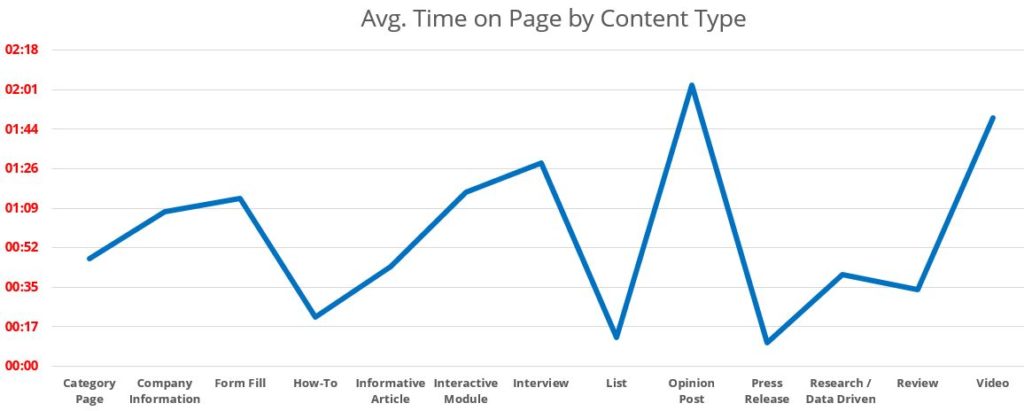

Let’s analyze a specific example:

The data shows that opinion posts & videos performed the best. Meanwhile – list content, how-to’s, & press releases performed the worst.

Based on that information, you can conclude there’s a few questions to ask:

-

Why did opinions & video content perform so well?

-

Why did lists, how-to’s & press releases perform so bad?

-

Where are the most immediate opportunities to improve?

You can then refer to your qualitative analysis spreadsheet, and review those specific content types for clues. Maybe some of that how-to content is dynamite, but it wasn’t promoted properly. Maybe there were canonical duplication issues pointing traffic somewhere else. It could just be, that the content quality sucked. This is the beauty of the content audit. It gets you thinking in the right direction!

My Final Call To Action

I’m dying to know what you think of all this! It’s super in-depth and analytical, I know.

Please, I urge you. Hit me with your most honest, raw feedback. Be as harsh, or as friendly as you want.

I’m also curious to know how you approach your content audits. We’re here to make each other better.

I look forward to reading your comments and reactions. Let’s have some geeky content strategy dialogue. Thanks for reading!