As I tweeted about a month ago my top draft picks for relatively unknown SEOs that are about to make tsunamis for 2012 are Dan Shure (@dan_shure) and Jon Cooper (@pointblankseo). Both of whom are not taking that declaration lightly and have come out swinging. Last week Jon Cooper invented a new metric for guest post prospecting called Scrape Rate and I’m going to build upon that a little bit here.

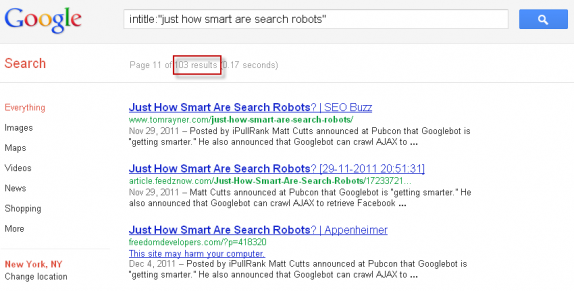

The basic premise is that posts on many popular blogs are scraped and placed and/or syndicated onto other websites and therefore writing a guest post containing one link back to a given site will have a multiplier effect once the post goes live and is scraped. This is something that I’ve noticed in the past in writing for SEOmoz. As seen below there are 103 pages that have scraped and republished the “Just How Smart are Search Robots” post that I wrote at the end of November 2011.

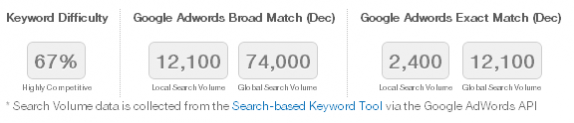

And for that reason alone I am on the first page (between 7 and 9 depending) for the keyword “Googlebot,” a highly competitive keyword according to Moz’s Keyword Difficulty Report.

It’s pretty safe to say that this is very powerful stuff that should be weighed when considering where to guest post.

Shareability Rate (or Share Rate)

Immediately after seeing Jon’s post I thought “this is brilliant but why aren’t we computing this for the propensity of posts on a given site to be shared.” For example as soon as a post goes live on the main blog of SEOmoz there are roughly 300 tweets immediately no matter what due to their devoted following and RSS scrapers.

For example Andrew’s post went up sometime early this afternoon and already has 537 tweets.

Computing Share Rate

I skipped the explanation of how to compute scrape rate with good reason; shareability rate will play into what I believe to the most effective way to compute scrape rate.

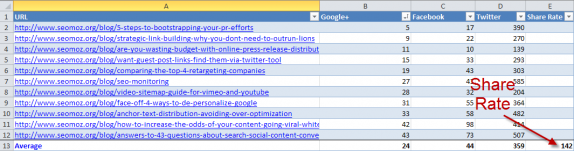

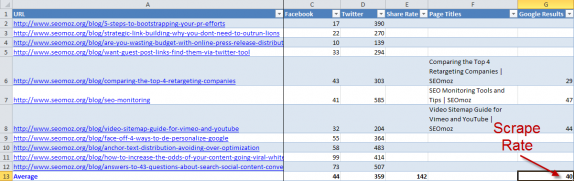

Share metrics are available via free APIs and or even directly in Excel via Neil Bosma’s SeoTools plugin and therefore are far easier to come by than Google results numbers. So while Jon suggested that you pull 3 random URLs in computing scrape rate, I’m going to suggest something more empirical. We’re going to pulls all the URLs for the section of the site that we want to guest post on and then pull social metrics on all of those URLs and then calculate an average of all the social metrics for those URLs. For speed and simplicity of creating this post I’m using 11 URLs from the SEOmoz blog.

- Download and Install Neil Bosma’s SeoTools – That is if you haven’t already.

- Download the XML Sitemap

- Open the XML Sitemap in Excel – Hat tip to Justin Briggs for this one. I had no idea that Excel opened and properly formatted XML files.

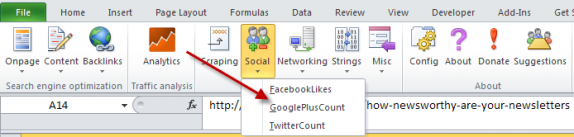

- Pull the Facebook Likes, Google Plus Ones, and Tweet Counts for each URL. The functions are =FacebookLikes(), TwitterCount() and =GooglePlusCount().

- Sort the values from lowest to highest – this is important later for computing Scrape Rate.

- Calculate the average of each

- Calculate the average of the averages

Computing Scrape Rate

Jon talks about pulling 3 random URLs in order to determine the Scrape Rate. While this is a good quick and dirty judge of the Scrape Rate it’s not empirical. That is to say without a more empirical approach you may end up getting a Scrape Rate that isn’t truly indicative of the site’s propensity to be scraped. This is where Share Rate can inform the Scrape Rate. Using the share counts as an indication of popularity allows you to weigh the importance of pages and therefore you can get a better Scrape Rate by taking average of the 3 pages at the mean of the Share Rate.

- Find the median of the URL list. So if there are 200 URLs the median is 100th URL.

- Pull the median URL, the URL preceding and the URL following.

- Pull the page titles for these 3 pages using =HtmlTitle() in SeoTools.

- Run “intitle:” queries with these 3 page titles and record the number of results Google returns. Note: SeoTools has a function for pulling the number of Results (=GoogleResultCount) but I couldn’t get it to work properly with concatenate and and intitle query.

- Take an average of these numbers.

Voila, we have an empirical Scrape Rate!

Conclusion

Guest posting takes a lot of time and effort and you always want to get the most bang for your buck. Scrape Rate and Share Rate are great metrics for determining how worth your while it will be to write a guest post on a given site. As always you know I’m not into all this manual labor for computing something like this so you know I’m already working on a tool… Stay tuned.

As always your thoughts and feedback is appreciated. Also big shout out to Jon Cooper on his great work!

Happy guest posting!