GoogleBot is actually Chrome: The Jig is Up!

Why a Search Giant decided to build the Fastest Browser ever…

Background

Over the years, Google has built one of the most impressive technology companies in the world, with products ranging from an Advertising Empire to one of the top Mobile Platforms (Android). They’ve raised a pile of money, and acquired some of the most talented people in the world all under one banner; and all of this was accomplished on the back of a single flag-ship offering: Google’s Web Search.

For those of you who have been under a rock for a decade or so, Google Search is probably the most popular search application anywhere in the world, owning roughly 65% market share in the US alone.

This freely available product is guided by a deceptively simple mission statement:

“To organize the world’s information and make it universally accessible and useful.” [i]

It’s not unreasonable to state that all of Google’s achievements were in some way dependent on Google’s Web Search. The proficiencies gained from large-scale web crawling, and content categorization have made the engineering teams at Google experts and innovators in a wide variety of fields, from Computer Hardware (motherboards with batteries, mean time to failure on consumer hard drives) to Database Systems (Big Table, MapReduce), and even File Systems (GFS).

They have also advanced the fields of Optical Character Recognition, and Natural Language Processing through the Google Books and Google Translate project; both of which were probably aided by the natural language data derived from web-scale crawling.

Google Ads would be impossible without Google Web Search; and without the revenue brought in from Google Adwords during the early stages, Google may not have been able to invest so heavily in acquisition and innovation.

Since search drives Google’s success, why would Google even bother to get involved in the browser wars? The browser market is fiercely competitive, and not particularly lucrative. Building a browser also comes with the burden of supporting users, maintaining standards compliance, and mitigating privacy or regulatory concerns.

Yes, user data from the opt-in program is highly relevant and highly valuable to what they do… but this could have been achieved through plugins, add-ons, and integration agreements as they have done with the Google Toolbar and the Firefox Search Licensing arrangement without the extra burdens related to managing and supporting the Chromium Project.

And if that weren’t enough to make you curious, Google didn’t just take WebKit’s Rending Engine and call it Chrome… they created a new JavaScript Engine known as V8. This new JavaScript engine is perhaps the fastest engine available, and Google chose to add engineering complexity by making it standalone/embeddable; incidentally making projects like NodeJS possible. The engineering energy that went into creating the V8 Engine Is no small matter, as it was written entirely in C++ and designed to convert JavaScript to machine code to increase speed. The V8 Engine firmly demonstrates the level of talent Google has at its disposal.

Additional changes to the WebKit Core were developed by Google, creating a unique browser where each “tab” is a unique processor thread, providing an unparalleled fault tolerance in the event of unsafe script executions. A tab can crash without crashing the entire browsing session. This seemingly minor change would have required some major rewrites to the WebKit Core, and again demonstrates a high level of familiarity not only with CPU Architecture, but the underlying code of the WebKit project.

So honestly, why all this effort for a free product? Why fork WebKit and create Chromium instead of simply contributing directly to the WebKit project?

What if Chrome was in fact a repackaging of their search crawler; affectionately known as Googlebot, for the consumer environment? How much more valuable would user behavioral data if it could be mapped to their Search Stack on a 1:1 basis?

Feasibility of a Native Browser Spider

The first question we should probably ask ourselves, is “Can a browser even be used as a Spider?” Fundamentally, a browser is just software that provides an implementation of the W3C DOM Specification via a Rendering Engine, and a scripting engine to enable any additional scripting resources.

If the source code is available, it’s absolutely possible to edit the Rendering Engine to perform feature extraction tasks such as returning the Page TITLE element, or returning all ANCHOR elements on the page.

WebKit and The Mozilla Foundation both provide open source rendering and scripting engines, and the Chromium Project represents Google’s own foray into the market. The lesser known KHTML is yet another open source Rendering Engine.

WebKit is developed in C++ which is one of Google’s primary development languages, and is in fact the same language the V8 Engine was written in. Interestingly it also provides native Python Bindings through QT and GTK, making it even more attractive for Google. The Chromium Project even offers a remote administration & testing tool in Python, making it possible to interact with the browser from Python Scripts across servers.

The Mozilla Foundation offers the open source Gecko as a Rendering Engine and Rhino as a Scripting Engine, which seem to have GTK bindings via MozEmbed. I haven’t found much documentation about a MozEmbed QT Binding; there seems to be one, but it may not be well used.

Though WebKit was only open sourced in 2003, KHTML and Gecko have been around for quite a long while; KHTML was the initial basis for WebKit/Safari prior to Apple’s open sourcing of the project.

If we wanted to remove specific engineering expertise from the equation, The Selenium Project also provides a web browser automation toolkit that Google could leverage, though I believe the engineering overhead would make this an unwieldy solution. Direct integration with the browser would provide the best performance… especially when your browser is engineered to have a unique processor process per tab and a lightning fast JavaScript Engine. Mozilla’s Project offers a command line interface which can be used remotely, though it seems to be far less usable than a direct implementation.

Based on the age of the open source rendering engines, and Google’s unique engineering expertise, it’s certainly possible for Google to use a Native, DOM Compliant browser in their crawling or indexing activities. In fact thanks to Chromium Remote, Selenium, and other related regression testing projects, it’s possible for a skilled SEO Professional to attempt to implement native browser based SEO Tools.

Whether Google leverages a simple browser plugin, a custom implementation of a browser, or a special remote testing suite to access the browser remotely, having access to a native and compliant DOM implementation is of great benefit to anyone seeking to extract ranking features from web pages.

Advantages of a Native Browser as a Spider

Having established that it’s highly possible for Google to implement a native browser as a spider or indexing utility, we now have to ask ourselves “What are the benefits of doing so?” It’s certainly not an easy thing to do after all, so there should be a significant benefit in doing so.

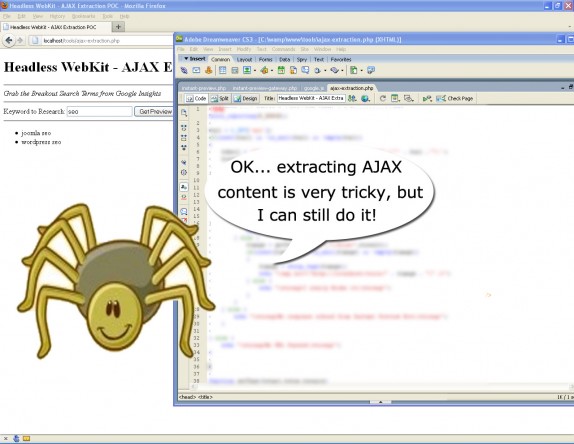

A native browser implementation enables JavaScript and CSS Support, provides full DOM Access, and even opens up the possibility to index/access dynamic content including AJAX. Deep integrations for Flash, PDF, and other non-HTML content are available via plugins.

Page and Dependency Load Times are trackable, while DOM Access provides the ability to manipulate form elements or extract data from elements within a page. Using the GetComputedStyle method it’s possible to use simple JavaScript to detect hidden elements even if they hidden after the page loads using a JavaScript transformation.

There are synthetic implementations of the DOM, such as jsDOM, BeautifulSoup, and HTMLUnit which enable some of the benefits of a native DOM implementation via a browser, such as extracting elements by name or id, or fill/click elements but they lack the ability to detect DOM Transformations by Scripting languages or extract computed styles. These missing features are critical for attempting to detect hidden links or text with a suitable degree of accuracy. These synthetic DOM implementations also do not afford any access to plugins or AJAX content.

Perhaps the most important benefit of using a native browser for crawling and indexing documents is that it closely emulates the user experience across a host of parameters. Page load time, final markup and content, and even the positions of the elements within the DOM will mirror the experience of a user almost 1:1; barring any non-standard implementations of the DOM in the rendering engine.

Making the Case: Googlebot is a Browser

So far we’ve seen that it’s not only viable, but of substantial benefit to use a web browser for crawling; but what evidence do we have that Google has ever considered this? For this we can turn to the patent filings by Google and their competitors in the search sphere.

In the patent “Document segmentation based on visual gaps” [ii] we see Google discussing Computer Vision methods for determining the structure of a page and separating content, some of which rely on an understanding of the underlying DOM of the page.

“[0038] In situations in which document 500 is a web page, document 500 may be generated using a markup language, such as HTML. The particular HTML elements and style used to layout different web pages varies greatly. Although HTML is based on a hierarchical document object model (DOM), the hierarchy of the DOM is not necessarily indicative of the visual layout or visual segmentation of the document.” [emphasis added]

“[0039] Segmentation component 230 may generate a visual model of the candidate document (act 403). The visual model may be particularly based on visual gaps or separators, such as white space, in the document. In the context of HTML, for instance, different HTML elements may be assigned various weights (numerical values) that attempt to quantify the magnitude of the visual gap introduced into the rendered document. In one implementation, larger weights may indicate larger visual gaps. The weights may be determined in a number of ways. The weights may, for instance, be determined by subjective analysis of a number of HTML documents for HTML elements that tend to visually separate the documents. Based on this subjective analysis weights may be initially assigned and then modified (“tweaked”) until documents are acceptably segmented. Other techniques for generating appropriate weights may also be used, such as based on examination of the behavior or source code of Web browser software or using a labeled corpus of hand-segmented web pages to automatically set weights through a machine learning process.” [emphasis added]

This patent describes a process of visual segmentation which may rely on the behavior of a browser, and which showcases an understanding of the DOM and its shortcomings. While not a smoking gun it clearly shows that Google is familiar with the DOM, and has considered the value that a browser can bring to their analysis of a document.

Google’s Instant Snapshot product may well be a byproduct of this research into visual gaps, and page structure, though we’ll return to that a little later.

Browsers don’t just render the DOM Hierarchy of HTML, they include transformations via CSS and JavaScript, and for Google to extract the most meaningful features from a web page it would be necessary to have access to these transformations.

In the patent “Ranking documents based on user behavior and/or feature data” [iii] we see the following claims:

“The document information may also, or alternatively, include feature data associated with features of documents (“source documents”), links in the source documents, and possibly documents pointed to by these links (“target documents”). Examples of features associated with a link might include the font size of the anchor text associated with the link; the position of the link (measured, for example, in a HTML list, in running text, above or below the first screenful viewed on an 800.times.600 browser display, side (top, bottom, left, right) of document, in a footer, in a sidebar, etc.); if the link is in a list, the position of the link in the list; font color and/or attributes of the link (e.g., italics, gray, same color as background, etc.); number of words in anchor text associated with the link; actual words in the anchor text associated with the link; commerciality of the anchor text associated with the link; type of the link (e.g., image link); if the link is associated with an image (i.e., image link), the aspect ratio of the image; the context of a few words before and/or after the link; a topical cluster with which the anchor text of the link is associated; whether the link leads somewhere on the same host or domain; if the link leads to somewhere on the same domain, whether the link URL is shorter than the referring URL; and/or whether the link URL embeds another URL (e.g., for server-side redirection). This list is not exhaustive and may include more, less, or different features associated with a link.” [emphasis added]

Many of these features, such as the font color, styling, and size would require direct access to the Cascading Style Sheets and any JavaScript transformations related to the element in question. Most modern browsers support a “GetComputedStyle” method within their DOM Implementation, allowing a client to request the final style of an element based on current script transformations.

[insert image of getcomputedstyle via chrome js console]

Another interesting patent; “Searching through content which is accessible through web-based forms,” [iv] related to Google’s ability to index content behind HTML FORMs with GET Methods[v] gets us closer to uncovering the technology behind Google Crawlers. This patent describes a process where a crawler can actually manipulate a GET form for the purposes of crawling or even redirecting a search query through the form for the end user.

“Many web sites often use JavaScript to modify the method invocation string before form submission. This is done to prevent each crawling of their web forms. These web forms cannot be automatically invoked easily. In various embodiments, to get around this impediment, a JavaScript emulation engine is used. In one implementation, a simple browser client is invoked, which in turn invokes a JavaScript engine. As part of the description of any web form, JavaScript fragments on a web page are also recorded. Before invoking a web form, the script on the emulation engine is executed to get the modified invocation string if any. The parameters (and their mapped words or internal values) are then concatenated to the invocation string along with the values for any hidden inputs.”

Here we see Google claiming an implementation of a simple browser client, with a JavaScript engine, further confirming that crawlers can and may take advantage of both native DOM via a browser and a JavaScript engine.

Patents don’t necessarily offer proof of implementation though, as they serve mainly to document and protect intellectual property. It’s wholly possible that these patents are merely proofs of concept designed to secure the safety of future innovations within Google. To find evidence of implementation, we’ll have to dig deeper…

In addition to the previously mentioned Webmaster Central Article “Crawling through HTML Forms” of 2008, we find evidence of the implementation of both patents thanks to the effort of some SEO Professionals sharing their test results with the SEO Community via SEOMoz’s popular YouMoz blog.

In “New Reality: Google Follows Links in JavaScript”[vi] we see evidence that not only is Google executing and crawling links within JavaScript, it’s actually able to do so within jQuery AJAX requests. This example is interesting, as it dates to roughly the same period as the Webmaster Central announcement and highlights some very advanced JavaScript functionality… even today it’s commonly believed by SEO Professionals that Google has no access to AJAX content, though it appears they have had access to at least ANCHOR elements generated by AJAX since at least 2008/2009. This post also seems to confirm that the techniques of the patent “Ranking documents based on user behavior and/or feature data” are being deployed, as shorter URLs under the parent domain were extracted and crawled first in accordance with the following:

“whether the link leads somewhere on the same host or domain; if the link leads to somewhere on the same domain, whether the link URL is shorter than the referring URL;”

A more recent article in Forbes “Google Isn’t Just Reading Your Links, It’s Now Running Your Code”[vii] discusses some advances made during the “Caffeine” update of June 2009, further confirming that Google doesn’t just parse JavaScript, but executes it in an attempt to understand the underlying behavior. There is a brief discussion on the difficulties related to understanding JavaScript without execution, and makes the case that execution is the only way to really understand what a snippet is attempting to do, and when it may start/stop its operations.

These assessments lead us to conclude that Google is absolutely parsing, and even periodically executing JavaScript… but does that necessarily require a browser? V8 is a standalone, embeddable JavaScript Execution engine after all.

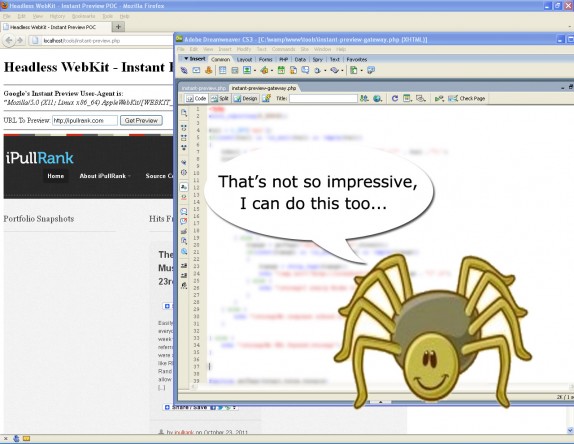

This is the moment where we return to Google’s “Instant Preview” product, which may in fact be a practical extension of the Visual Gap Analysis patent, re-packaged as an enhancement to Google Web Search. Google’s own FAQ for Instant Preview indicates that visual previews can be generated “on-the-fly” by user request, or during a crawl by Googlebot. The Instant Preview will include keyword highlighting relevant to the user’s query. The FAQ continues to explain how you can tell the difference between an “on-the-fly” preview and a crawled preview:

“Q: What’s the difference between on-the-fly and crawled previews?

A: Crawled previews are generated with content fetched with Googlebot. When we generate previews on-the-fly based on user requests, we use the “Google Web Preview” user-agent, currently:

Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/[WEBKIT_VERSION] (KHTML, like Gecko; Google Web Preview) Chrome/[CHROME_VERSION] Safari/[WEBKIT_VERSION]

As on-the-fly rendering is only done based on a user request (when a user activates previews), it’s possible that it will include embedded content which may be blocked from Googlebot using a robots.txt file.”

Notice the Chrome/Webkit User-Agent, flagged as Mozilla Compatible. The standard GoogleBot User-Agent also identifies itself as being Mozilla compatible, without explicitly stating whether or not it is in fact Chrome. While we still do not have definitive evidence that the Chromium project is the underlying source for GoogleBot, we have certainly verified that GoogleBot is a browser.

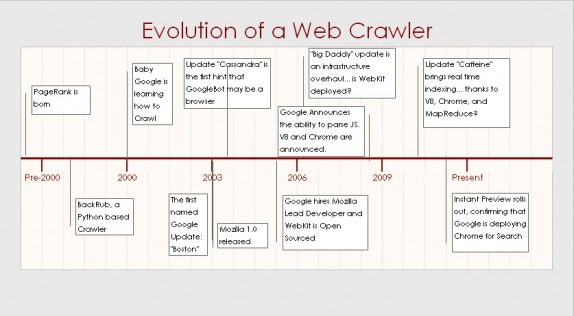

Evolution of a Web Crawler

BackRub Era, pre-2000

A Python based crawler, designed primarily to extract links for citation analysis. This is the birth of PageRank.

Early Google, 2000 to 2003

Early on, Google is primarily streamlining what was BackRub. The team is probably converting the crawler to C++ and working on a distributed crawling system and overcoming the massive architecture challenges related to web-scale crawling and indexing.

As time goes on, Google is probably looking for ways to improve their feature extraction and cope with the issues inherent in the initial PageRank algorithm and expanding their indexing beyond citation analysis. Crawlers are probably still sophisticated parsing scripts with an artificial DOM implementation, based largely around pattern matching.

2003 sees the first named Google update; Boston. This heralds a habit of naming and announcing major updates and engaging the search community at large.

Google 2003 to 2006

In April of 2003 the Cassandra update arrives, offering changes to the algorithm to detect hidden links and text. At this stage, Google would definitely benefit from having a native browser and Mozilla 1.0 is available under an open source license.

In 2004 we see the “Austin” update which again implements functionality that hints at a native Browser behind the crawler. Austin attempts to put an end to hidden text. 2004 is also the year of the Google IPO.

2005 sees Google hiring away a key Mozilla developer; leading to suspicions of a Google Browser, and the Big Daddy update. Big Daddy is claimed as an infrastructure update, and rollout carries over into 2006. WebKit is officially open sourced in June 2005, and Big Daddy begins in December; if Google were shifting away from Gecko/Mozilla, in favor of WebKit this would be the time they do so.

Google 2006 to 2009

2006 through 2008 represent feature enhancement updates; Google spends some time revamping how we interact with search. They’ve got a lot of data, and now they’re sharing it to make the user experience richer and more useful. We see universal search expanding the types of information returned, and Suggest reveals the depth of Google’s natural language processing capabilities.

2006 is one of the first occurrences of GoogleBot 2.1 with its Mozilla/5.0 Compatible user-agent.

Webmasters also begin noticing new behavior, such as GoogleBot requesting CSS resources. [viii] It is in April 2008 that Google announces their ability to crawl through HTML Forms, and parse some JavaScript.

During this seemingly slow period for major algorithm or infrastructure updates, the Chromium and V8 projects are introduced. They are launched on September 2nd, 2008. Chrome is touted as one of the fastest browsers ever thanks in part to the V8 JavaScript engine.

Interestingly, 2009 signals what will soon be a major shift in Google’s infrastructure with real time indexing and a preview of Caffeine. The innovations and advances in the Chromium project are likely partially responsible for this great leap forward, as crawling and feature extraction can essentially happen as one step through the use of Chrome as a crawler. With a real time, flexible MapReduce queue it would certainly be possible to index and rank documents in near real time.

Google 2009 to Present

2010 saw the full roll out of Caffeine, a major infrastructure update for Google enabling near real time indexing. Google Instant, and Instant Preview were also launched. Instant Preview offers the most compelling evidence of WebKit/Chrome in the search stack and will probably lead to some very interesting algorithm updates in the future as Google gathers data and refines their Computer Vision techniques.

Content Quality and Social Signals have become increasingly more important to Google, as showcased by the Panda update and the DecorMyEyes scandal. I expect social to be increasingly more important, especially as Google+ enables Google to construct behavioral profiles and establish author identity with more certainty. We’ll certainly see social media’s equivalent to Link Spam in due time.

Conclusions

It seems the evidence leads us to only one logical conclusion; Googlebot is a browser. Which browser is anyone’s guess, though we have some clues that we can use to draw some solid inferences. The user-agent makes Mozilla’s Gecko engine a possibility, and the stand-along nature of V8 doesn’t entirely eliminate that possibility.

Another nod in favor of Gecko is the age and stability of the project; Gecko has been open source since 2003, while WebKit has only been open source since 2005. Google has also hired many talented Mozilla Engineers over the years. The recently leaked Search Tester document also demonstrates that Google requires its quality testers to lean on Firefox for their query testing.

However, based on the proposed timeline, it’s not entirely necessary for the underlying code base to be available to Google prior to 2005. This is especially true, as the V8 Engine and Chromium were announced simultaneously and precede a major Google Update; Google could have easily shifted gears and implemented Chrome during this time.

This becomes even more plausible when you consider the major changes made to Chrome; each tab being a unique thread creates a high level of fault tolerance and redundancy. If a GoogleBot instance were a single server running Chrome via Chromium Remote, with say 3 to 5 tabs, it would be possible for Google to provide threaded crawling and indexing with minimal risk. If a tab crashes, the other tabs are isolated and protected; this behavior is not available in even the most recent editions of Firefox.

In short, Chrome was engineered for multi-threaded crawling; lightning fast browsing is just a pleasant side effect for users.

Some Closing Thoughts

The importance of Google Web Search to Google’s entire empire cannot be understated. Whether it provides revenue opportunities, data to mine, or simply presenting technological hurdles leading to the next generation of innovative solution, Google is fueled by Web Search.

Another important take away is that Google is highly dependent on Open-Source software to fuel their innovations, and aren’t afraid to borrow what they need to create something that will change the world. It’s not all sunshine and roses though; Google has offended many important players, including the late Steve Jobs, and Sun. They’ve run afoul of Federal Regulations, and are a total privacy quagmire. Despite their reliance on Open Source Software, and their love of data, Google is still very much a Walled Garden and there’s lots of room for the next generation to do what they do, only better.

Big Data and the ability to accurately extract it, classify it, and store it has enabled Google to become a leader in so many fields, and for the first time in history the same tools and techniques are at the fingertips of the average developer.

Projects like Hadoop bring MapReduce into the Open Source arena, while the NoSQL Movement and the growth of the Cloud are making it possible for anyone to build a mini-Googleplex. Projects like NLTK bring Natural Language Processing out of the University and into the hands of mad scientists like Michael King, and the explosion of APIs is making more and more data available without the added complexity of crawling.

So what are we waiting for? If you’re developing SEO for enterprises, Googlebot plays a massive role in your crawling strategies. The next iteration to consider is how Googlebot for desktop and mobile might impact the next step of a mobile-only indexing environment. Let’s move forward and build the future.

Thanks and Acknowledgements:

Thanks to Bill Slawski of SEO by the Sea for pointing me at some very relevant patents, and being so willing to share. He’s quite a nice fellow.

Special thanks goes out to Mike King for encouraging me to keep pecking away at this crazy idea, and for always being available to hear my zany ideas. It’s a privilege to call him a colleague, but it’s truly an honor to call him a friend.

References:

[i] “Google Enterprise.” Google. Web. 28 Oct. 2011. <https://www.google.com/enterprise/whygoogle.html>.

[ii] “Document Segmentation Based on Visual Gaps.” Google. Web. 28 Oct. 2011. <https://appft1.uspto.gov/netacgi/nph-Parser?Sect1=PTO2&Sect2=HITOFF&u=%2Fnetahtml%2FPTO%2Fsearch-adv.html&r=1&f=G&l=50&d=PG01&p=1&S1=20060149775&OS=20060149775&RS=20060149775>.

[iii] “Ranking documents based on user behavior and/or feature data.” Google. Web. 28 Oct. 2011. <https://patft.uspto.gov/netacgi/nph-Parser?Sect1=PTO2&Sect2=HITOFF&u=%2Fnetahtml%2FPTO%2Fsearch-adv.htm&r=1&p=1&f=G&l=50&d=PTXT&S1=7,716,225.PN.&OS=pn/7,716,225&RS=PN/7,716,225>.

[iv] “Searching through content which is accessible through web-based forms.” Google. Web. 28 Oct. 2011. <https://patft.uspto.gov/netacgi/nph-Parser?Sect1=PTO2&Sect2=HITOFF&u=%2Fnetahtml%2FPTO%2Fsearch-adv.htm&r=8&f=G&l=50&d=PTXT&p=1&p=1&S1=%28%28browser+AND+crawler%29+AND+google%29&OS=browser+AND+crawler+AND+google&RS=%28%28browser+AND+crawler%29+AND+google%29>.

[v] “Crawling through HTML Forms.” Official Google Webmaster Central Blog. Web. 28 Oct. 2011. <https://googlewebmastercentral.blogspot.com/2008/04/crawling-through-html-forms.html>.

[vi]“New Reality: Google Follows Links in JavaScript. – YOUmoz | SEOmoz.” SEO Software. Simplified. | SEOmoz. Web. 29 Oct. 2011. <https://www.seomoz.org/ugc/new-reality-google-follows-links-in-javascript-4930>..

[vii]“Google Isn’t Just Reading Your Links, It’s Now Running Your Code – Forbes.” Information for the World’s Business Leaders – Forbes.com. Web. 29 Oct. 2011. <https://www.forbes.com/sites/velocity/2010/06/25/google-isnt-just-reading-your-links-its-now-running-your-code/>.

[viii]“GoogleBot Requested a CSS File! – Updated – EKstreme.com.” Webmaster, SEO, Tools and Tutorials. Web. 29 Oct. 2011. <https://ekstreme.com/thingsofsorts/seosem/googlebot-requested-a-css-file>.