Contributor: Emilija Gjorgjevska

Generative AI has shown the marketing sector’s potential while highlighting the risks of AI technology. At WordLift and iPullRank, we’ve seen both firsthand as we build products and strategies that combine powerful AI workflows enabled by human expertise.

One important lesson we’ve learned across these projects is the limited AI knowledge in the digital landscape. Using AI without focusing on data quality and understanding its complexities leads to unexpected consequences and risks to your reputation. That’s why putting safeguards and QA processes in place is critical to using these tools.

We’re also committed to promoting a more thoughtful AI implementation by sharing our insights and advocating for behavioral change in the SEO sector. Having had the privilege of pioneering intelligent digital marketing strategies in this sector for a collective span of over 30 years, we strongly believe in propelling this initiative further.

Safeguard your Brand Reputation: Use AI Wisely in Content Creation

AI can be really helpful for businesses, making things quicker and more efficient. But, there’s a catch – it can also bring some risks. Imagine you’re running an online store that sells candles and Christmas decorations. To save time, you decide to use targeted AI to write descriptions for your products. It’s a time-saver, but you’ve got to be careful that the AI doesn’t write things that aren’t true or aren’t appropriate. That’s where ethics come into play – it’s about doing the right thing!

In this article, I’ll dig into the topic of using AI in a fair and right way when creating content. I’ll look at the risks and good things it brings and suggest a new way to do it so that you get what you want without losing the uniqueness and quality of your content.

Go on, read on!

The Ethical Risks of AI in Content Marketing and SEO

- Bias and unfairness: Imagine you have a travel website that uses AI to write descriptions of places to visit. If the AI learns from biased information, it might end up favoring certain destinations and leaving out others based on stereotypes or cultural biases.

- Privacy and protecting information: Think about having an online store. If you use AI to suggest products to customers based on their browsing and buying history, it needs to be super careful with their private info. We’ve got to make sure their data is safe and follow the rules about privacy.

- Who’s responsible?: Let’s say you have a blog that uses AI to give ideas to writers. If the AI writes something, we need to know who should take the credit or the blame. We’ve got to set clear rules so everyone knows what’s what and who’s responsible if there’s any problem.

When we discuss using AI ethically to create content, we look at two main things:

- Intellectual property (IP): This means who owns the ideas or creations made by AI.

- Avoiding copying and making things too similar: We want to stop AI from just copying other stuff or making things that are too much like something else already out there.

These aspects are critical not only to ensure originality but also to increase value for users and search engines. Let’s see what it means in detail and try to analyze valuable examples for a better understanding of what we may be up against and how to get out safely!

Stop the Copycats: Keep Your AI Content Original and Cool

Imagine someone taking credit for your hard work and ideas. That’s a big worry when using AI to create content because it might copy what you’ve done. Sometimes, it’s hard to tell if something is original or just copycat content, which raises questions about who came up with the idea first.

It’s super important for people who manage content and do marketing to be really careful. They need to check where the AI gets its info, give credit if needed, and make sure they’re not copying others. Also, setting clear rules for using AI in making content can help avoid legal problems and make sure things are fair and honest.

A fake David LaChapelle created with Bing Image Creator powered by DALL·E 3 (without any consent from the artist).

David LaChapelle Springtime from the Earth Laughs in Flowers series (more information available on the artist’s website)

Copyright laws in the US and EU protect things like writing, music, movies, and more. When someone creates something original, like a song or a story, it’s automatically protected. But to make sure it’s legally protected, they should register it.

There are rules that let you use copyrighted stuff without asking for permission. In the US, the ‘fair use’ rule allows using copyrighted things for things like schoolwork or commenting on them. In the EU, the law says you need permission to put someone’s copyrighted work online.

But there are exceptions. For instance, you can use copyrighted things in the EU for parody or satire without asking permission.

Using AI that creates stuff without permission is a big worry. If it copies things that belong to someone else, like their ideas or work, it could get a company into big trouble. They might have to pay a lot of money – up to $150,000 – for each time they use something without permission.

In tandem with addressing IP concerns, an important consideration lies in the control of the data used to train AI models. While specific models, like Bloom, provide clear insights into their data sources, most widely used models, including OpenAI’s models, lack this transparency.

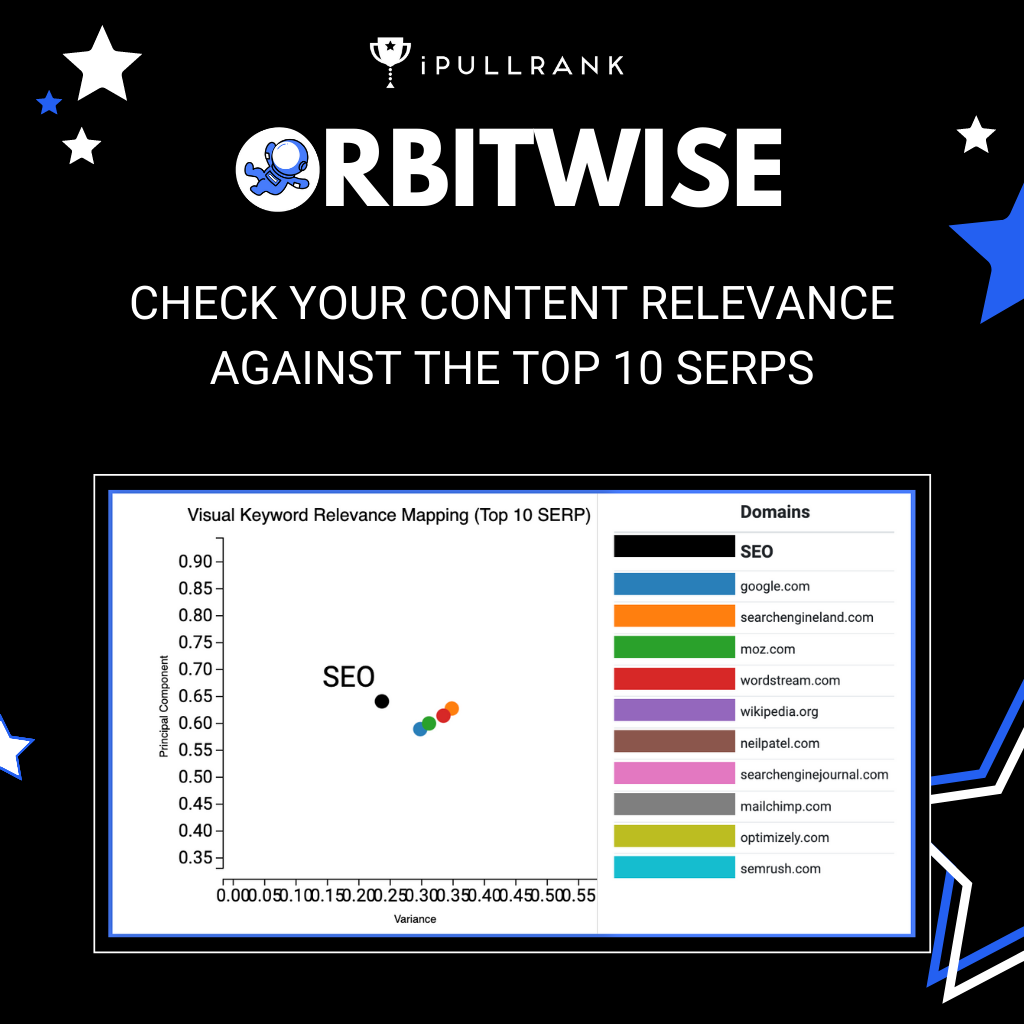

One option to address this need is to train the models using your data. This is where semantic data curation and knowledge graphs come into play.

Imagine semantic curation as organizing a library. It’s like arranging books by types – like adventure, mystery, or science – so it’s easy to find what you want. Semantic curation helps teach a language model by sorting information about things like animals into groups, such as mammals or birds.

Now, think of knowledge graphs like a huge mind map. Just like connections between friends on a social network, knowledge graphs link information. They show how different ideas are connected, helping the model understand the context.

These things are really important for making a language model better. Let’s say we’re talking about space exploration. We want the model to be correct and creative. Semantic curation helps by organizing details about rockets, planets, astronauts, and missions. This gives the model a clear way to learn. And knowledge graphs show how all these things in space exploration are linked. This helps the model create content that makes sense.

Without these tools, using a language model is like being in a messy room without a map. It can get confusing. But with semantic curation and knowledge graphs, we can guide the model to produce content that matches what we want and stays original. It’s like having a reliable guide on a complicated trip, making sure we’re on the right track. However, it’s important to remember that these models were trained using other data, so it’s essential to decide what information is suitable unless it’s your own private data.

#SEOntology: a framework for content optimization. Think of it as an operating system for your content strategy. This is a first draft. pic.twitter.com/hZHhaFMpWp

— Andrea Volpini (@cyberandy) October 21, 2023

We can teach a model to be more creative by changing its temperature levels, where increased temperature makes the answers more random and creative, while colder will do the opposite. However, warmer temperatures can influence the LLM to start using words or names it learned before. This might not look dangerous, but if the model starts to mention famous people or things, it can cause legal problems for your company.

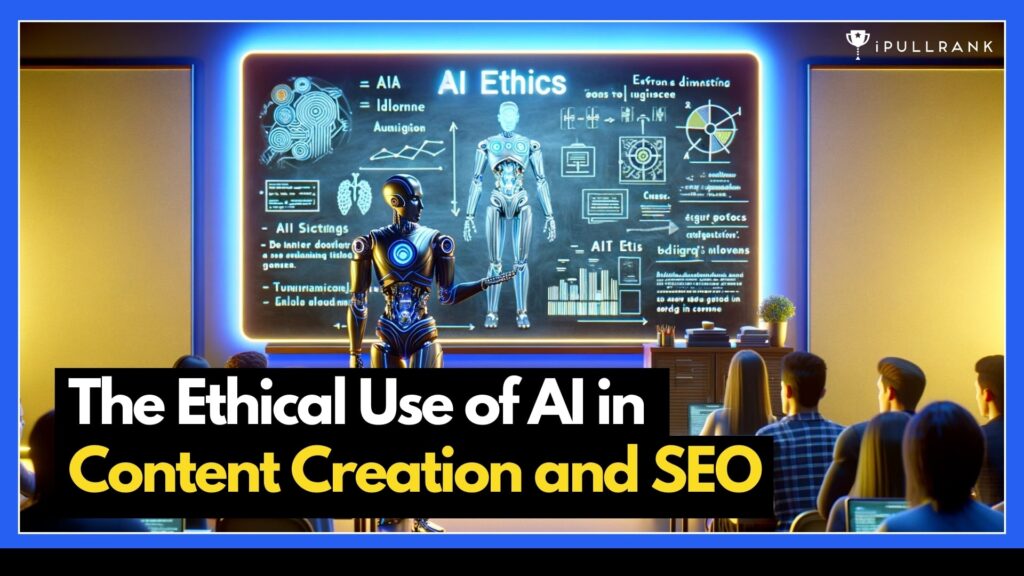

To avoid this, we use a technique called “NIP” (Not In Prompt). This technique spots any names that weren’t in the original question or request. For example, if the model talks about someone famous like “Audrey Hepburn” or mentions specific things that weren’t asked about, it gets flagged for review.

The prompt is derived from our Knowledge Graph (KG). The KG not only contains data about each entity but also rules about their attributes. For example, we ensure consistent casing when mentioning materials, opting for “acetate” over “Acetate.”

Mitigating Plagiarism and Derivative Work in AI-Generated Text and Images

AI systems are good at putting information together and recognizing patterns. But sometimes, they might copy content without giving credit or making it different enough. So, people who manage content need to have verification rules in place to ensure that what AI makes is original and unique. They can also use technology that quickly checks for plagiarism to stop the unintentional sharing of unoriginal things.

OpenAI is aware of this issue and is working on a way for users to say they don’t want their work used from the models to generate content. We’re still figuring out exactly how this will work, but it shows they take the problem seriously. When companies make sure the ethical use of AI to create content, it helps keep their reputation intact and makes the online world more trustworthy for everyone.

Strategies to Identify and Prevent Bias in Generative AI Content

AI is a powerful tool that could help the world economy a lot, potentially adding trillions of dollars. It has the potential to make us more productive. But there’s a catch. We need to be smart about how we use Generative AI. We have to be careful about possible biases and risks.

Let’s simplify it. AI learns from stuff people make, like books or social media posts. But people can sometimes be unfair or not totally right. AI learns from that, so it might pick up those same unfair ideas without meaning to.

Here are some important steps:

- Understanding Users: Know who’s using the AI and how they use it. Figure out what they need and how much they’re involved.

- Teaching and Getting Better: Make sure the people using the AI know a lot and are really good at it. This helps them notice and fix any unfairness.

- Checking the Stuff: Decide what you want the AI to do. Find problems in what it learns and make plans to fix them. Also, make sure you can see if things are getting better over time.

AI as a Complement, Not a Replacement

Using AI in SEO and content marketing isn’t about replacing humans, it’s about making their work better. AI helps a team do more by handling lots of data, spotting patterns, and doing repetitive tasks quickly and accurately. It also helps find trends and makes content better.

But it’s still super important to have humans involved. They make sure the content fits the brand’s style and rules. We can’t rely on AI alone to write just like us. Instead, we teach and check the AI’s work to make it better.

When AI and people work together, it makes SEO and content marketing stronger. It’s not about getting rid of humans, it’s about making things better and moving forward.

How Generative AI Augments the Role of Marketing and SEO Specialists

Thinking of AI as a helpful friend rather than something scary brings lots of benefits for people working in SEO and content marketing.

Better content:

AI tools check content for mistakes and make it easier to read. They also suggest ways to improve sentences, words, and tone, making content better.

More types of content:

AI can create different kinds of content like articles, blogs, social media posts, and videos. This helps teams make content that people like in different ways. For example, they can quickly make infographics, podcasts, or short videos to go with their writing.

Personalized content:

Teams can use AI to see what users like and then make content just for them. For instance, in an online shop, AI can suggest products based on what a person bought before. This makes it more likely they’ll buy again.

Saving time:

AI does tasks that repeat, so the team can spend time on creative stuff and making smart plans.

Using AI in making content helps teams make better, more varied, and personal content on a big scale. It lets them focus on the smart, creative parts of making content and improves how they make plans. Working with AI helps make content that’s more effective and interesting for the people who see it.

A Responsible Approach to AI Opportunities

AI is a powerful tool for companies. However, with great power comes great responsibility. That is why taking a responsible approach is necessary. It means harnessing the technology for growth and efficiency and ensuring ethical use, transparency, and accountability.

Human factors matter and we need to ensure that a proper hybrid but symbiotic relationship between humans and machines is in place.

Together, let’s look at how you can use AI ethically to drive innovation and have a positive impact on your business.

Ethical AI Integration for Content Marketing and SEO Excellence

Let’s check out three ways we can use ethical AI in content marketing and SEO to make things better and do the right thing.

Make Content Fair:

We can use AI to find and fix unfair stuff in our content. For example, it can point out if our words are leaving out some people or if we’re accidentally saying things that aren’t fair.

Make Things Personal, but Private:

Instead of snooping on what people do online, AI can look at general info to give good recommendations without knowing who the person is. For instance, if someone’s shopped for shoes, the AI suggests shoes without knowing who bought them.

Check if the Info is Good:

We can use AI to check if the stuff we use in our content is right and trustworthy. It checks info from different places to make sure it’s true. For example, if there’s news, AI looks at lots of sources to see if it’s really true and reliable.

By incorporating these ethical AI practices, we can elevate our content marketing and SEO strategies to be more user-centric, reliable, and inclusive. This approach aligns with ethical principles, resulting in improved user experiences, higher search engine rankings, and ultimately, long-term success in the digital landscape.

WordLift's Role in Promoting Ethical AI Integration for SEO and Content Marketing Success

At WordLift, we prioritize responsible AI use in content generation, especially in light of Google’s recent emphasis on quality content.

Our Content Generation Tool is a team effort with experts in writing and SEO, made for different types of businesses. It uses your info and a special model to keep your brand’s style. You can also set rules to check if the content matches what you want.

This tool makes it easier to make lots of good content that fits your brand. It saves time and money while following fair AI rules. With WordLift, businesses can make content that people like and show what they stand for.

SEO CODE RED

Will Google’s AI Overviews (SGE) tank your organic traffic?

INCLUDED IN THE AI OVERVIEWS THREAT REPORT:

- Threat Level by Snapshot Type: Local, eCommerce, Desktop, and Mobile will have a different impact on your organic traffic. Which types are appearing for your keywords?

- Threat Level by Result Position Distribution: Your visibility within AI Overviews can indicate the likelihood of organic traffic. Where in the Snapshot do your links appear?

- Automatic AI Overviews vs Click to Generate: Will your audience be force-fed AI Overviews or will that extra click to generate the snapshot save you from missing out on traffic?

- Threat Level by Snapshot Speed: Slow AI Overviews load times might not impact your organic visibility. How fast do AI Overviews load?

Leave a Comment