Search engines have morphed and grown leaps and bounds in the last twenty-five years. Google hardly resembles the standard 10-listing results page of yesteryear. The computer science that powers search technology and information retrieval is more sophisticated and smarter than ever. Voice search and mobile search have changed the way users find and consume information and everyone – we mean everyone – is in on the content game.

As the engines change, so does SEO. Long gone are the days of simple keyword placement and link building. Don’t get us wrong – those principles are still the foundation of search but where before you could optimize a title tag and description with a single keyword, you must now optimize your entire website for a universe of targeted keywords. Same principles, different and newer techniques.

Modern SEO takes into account the changing landscape and shifts the focus from one-off optimization efforts to a concerted practice that views your website the way the search engines currently are. Check out our “You Don’t Know SEO” presentation to get a better understanding of the modern search algorithms that are indexing and retrieving information.

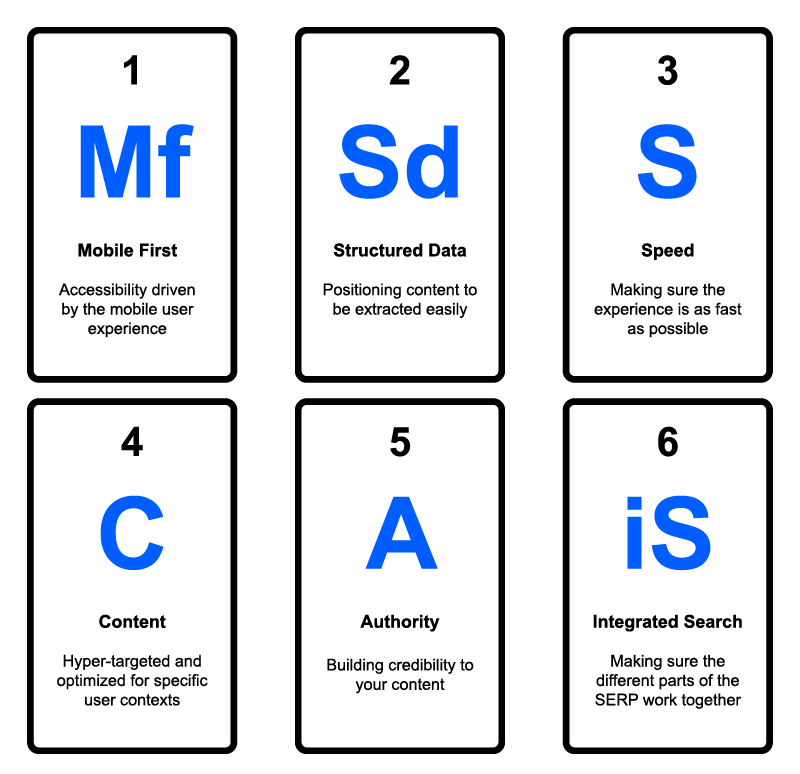

So what does this mean for the practitioner? How do SEOs keep up with the evolving technology and implement recommendations to help their webpages rank? Today’s SEO works on six elements that are building on the foundation while keeping pace with the future.

1. MOBILE-FIRST

Back in the day, there was one device. Now there are hundreds and twice as many display and screen sizes. Mobile-first indexing means that search engines are now looking primarily to mobile experiences to power the SERPs and influence rankings. Bridget Randolph’s great post How Does Mobile-First Indexing Work and How Does it Impact SEO? outlines what mobile-first is and how sites should optimize for a mobile index.

While mobile has been changing the SERPs since Mobilegeddon, mobile-first is more than just a preference toward mobile sites. It is a full out methodology and index for mobile pages. Bots are looking at the mobile experience first and foremost and ranking based on that. Does your client have a mobile site or responsive desktop site? No? With mobile-first it is paramount that they have one and that the mobile experience is optimal for search.

What that can include is:

- Ensuring your mobile site (if a separate domain) has high-quality content and informative structured data

- Optimizing metadata for a mobile experience. This means optimizing titles and meta descriptions for not just mobile crawlers but mobile searchers.

- Properly verifying your mobile site with Search Console, implementing switchboard tags and ensuring country/language mobile variants are notated with the hreflang.

Voice search is also adding contextuality to language understanding. Google’s indices have been using natural language processing and voice search for a while. What this means for modern SEOs is that keyword research has to be more than an export. It needs to reflect how people actually speak.

2. STRUCTURED DATA

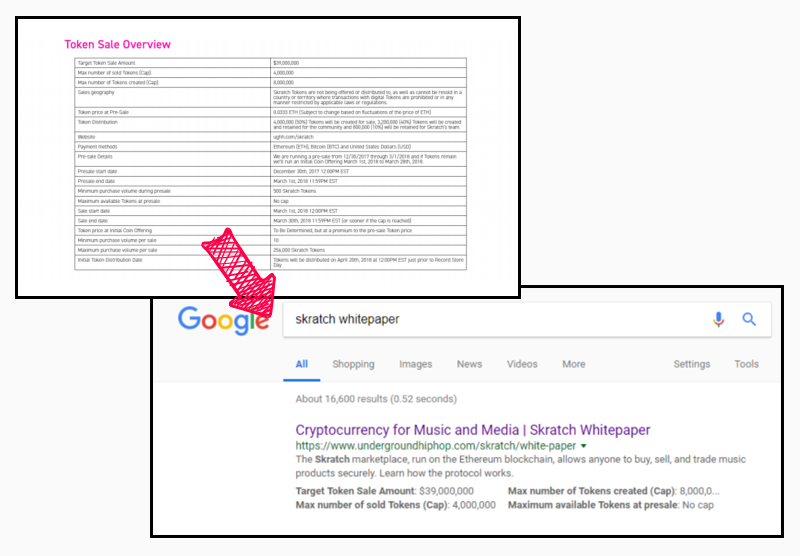

Structured data is more than just Schema.org. Search engines appreciate good direction and the more you tell them about your content the better. In tests we’ve performed at iPullRank, we’ve seen markup displayed in the SERPs that was populated through old-fashioned tables.

The key here is that the relationships between the data points are clear to Google. What this means for modern SEOs is that we have to embrace every semantic structural opportunity there is and provide Google with the context to best display that data.

3. SPEED

It’s clear that Google has pretty high-speed expectations (above the fold content should load within 1 second!) and it uses speed as a measure in its scoring function of a page. From our experience, larger sites are crawled more when they are faster.

With site speed as a key metric for crawling and rendering, what is a site to do? The Critical Rendering Path is the first place to look and SEOs should be asking: what are the minimal things a page has to do/load in order to be rendered?

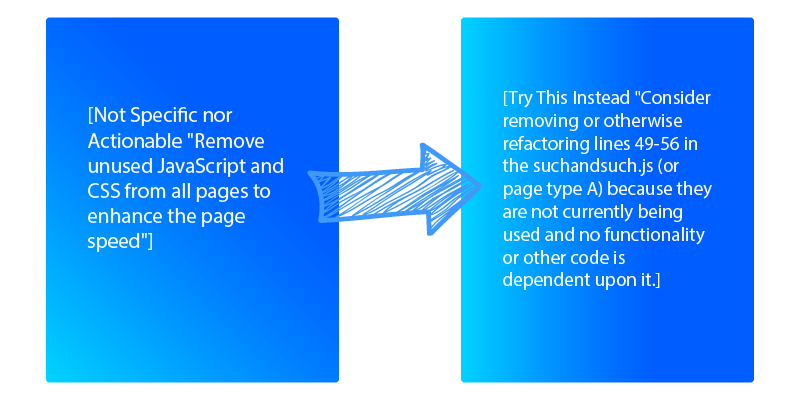

However, in improving site speed, recommendations need to be both actionable and optimal. Major database changes can be time and resource consuming and modern SEOs should factor in this difficulty.

This means:

-

- Segmenting crawls: Different page types are governed by different mechanisms, so segmenting your crawls can help you assess the problems with a particular page type and get those fixes implemented.

- Segmenting search opportunity: Look at your keyword portfolio for the greatest opportunity and work from the largest benefit.

- Be specific: Give context to client teams on the what and why of code changes to make implementation straightforward and easy to understand.

4. CONTENT

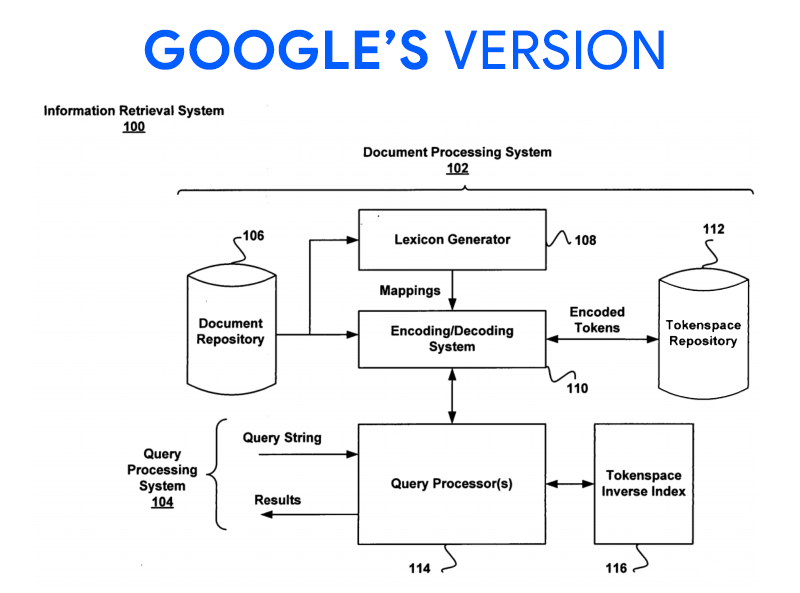

While the underlying algorithms to retrieve content are more sophisticated than ever before, the fact is they still rely on content (words, keywords, phrases, topics & themes) to classify and serve users the best and most accurate information. That’s a relief. The biggest difference in content today than before is that search algorithms have a greater understanding of semantics (the meaning and relationships between words). Instead of parsing single keywords from a searcher’s query and matching it exactly with the keywords used on-page, engines are looking at phrase length, co-occurring keywords, synonyms and close variants, page segmentation and phrase-based indexing.

Some factors of new age content optimization include:

- Keyword Usage: Keywords are still the first line of defense in ranking algorithms. The concept of keyword usage has expanded and now individual words and phrases are indexed as tokens, also known as N-Grams, to discover patterns and correlations.

- Entity Salience: Where keywords and keyword phrases were once looked at as a unit, Google is now breaking down queries into entities. What this means is that queries are now assigned entity identifications to help Google organize keywords into topical groups. Those groups provide context to the engines, allowing them to infer the relationship between keywords within a group.

- Example: If you search George W. Bush, Google will identify this as an entity of U.S. Presidents.

- Pro Tip from Bill Slawski: Google Trends categorizes search terms by entities so if you are curious about what entity your term is viewed as, search Google Trends. FYI: Kim Kardashian is viewed as an American Television Personality entity.

- Synonyms & Close Variants: Algorithms have been looking at keyword relationships and variations since as early as 2001 and was explicitly confirmed in 2013 with Hummingbird. This includes synonyms of keywords and close variants that can signal to engines what a page is about topically. The inclusion of keyword variants helps search engines match your content to a number of related queries versus simply targeting a single phrase.

- Semantic Distance & Term Relationships: Terms that are closer together can directly correlate to their relationship and helps engines understand the greater meaning/semantic intent of a document.

- TF-IDF: Term Frequency – Inverse Document Frequency is a method of assigning importance to terms based on their frequency in relation to other words. This turns the idea of keyword density on its head.

- The idea is that common words (the, and, or) are used very frequently in most documents and are thus are of low importance to the topic, while target keywords (and their variants) are of lower frequency and are likely more important. Ryte’s Content Success tool measures a page’s TF-IDF. Use our keyword research guide to learn how to incorporate TF-IDF.

- Phrase-Based Indexing & Co-Occurrence: Similar to TF-IDF, phrase-based indexing surveys a group of documents on a topic and looks for common, or co-occurring keywords, that appear in relation to that topic. Those phrases will typically be similar across a number of documents and help search engines build a repository of phrases related to any given topic.

Check out Cyrus Shephard’s stellar post on 7 Advanced SEO Techniques for On-Page SEO and learn more about these techniques and how to use them in on-page optimization.

5. AUTHORITY

Link building is the modus operandi for building authority, however, in modern SEO it can NOT and will NOT work in a vacuum. With that said, PageRank is still the measure and as modern SEOs we have to better understand this metric to fully capitalize on its impact for link building.

Authority building of the past has relied heavily on home-spun metrics including Page Authority, Domain Authority, Citation Rank, Cemper Trust, etc that all build off of PageRank. While these metrics have been great directional valuations of a page’s authority, we need to be clear that they are only approximations.

Though PageRank may continue to be nebulous, here is what we do know about building authority in the modern age:

- Google generates quotes from the linking page and evaluates that content to determine parity and relevance on both sides. Meaning a link from a site that is highly relevant to your topic is of much more value than a larger quantity of irrelevant or lower-value pages.

- Internal linking is one of the most valuable things you can do, especially on larger sites. Internal linking leads to more crawl activity and improves the flow of link equity throughout a site.

SEOs can take advantage of this disparity by computing their own site’s PageRank and using it to improve internal linking between those pages.

6. INTEGRATED SEARCH

While Google does NOT use Google Analytics data (it can not due to legal and privacy implications) it does use evaluation measures to determine the success of the SERPs. Though often confused, these evaluation measures are not the same as your site’s bounce rate or time on page.

Information retrieval systems like Google do factor in these metrics based on how users interact with the results page. Some examples of these measures include:

- Session Abandonment Rate

- CTR

- Session Success Rate

- Zero Result Rate

This information is primarily pulled from queries and click logs that Google stores with tons of data about what happened during a session and if it was successful. While Google has said that query logs are notoriously noisy and full of information they do not use, the Time Based Ranking Patent shows that they do in fact have the capability to use it. Rand Fishkin has run multiple tests that show CTRs impact on the SERPs.

Additionally, with Google’s move toward machine learning, Nick Frost has said that part of the training models for search learning is whether a person clicks and stays on a page. While it is not confirmed if these training models are impacting the SERPs, what this all means is that SEOs and webmasters have to look at the experience and success of a page and use that information directionally in terms of what pages they want to rank.

That includes:

-

- Writing compelling meta descriptions to entice and improve CTR

- Leveraging data from Paid Search, Analytics and Competitors to get a full picture of what success looks like

There’s no doubt about it that search has changed vastly and as search technology continues to grow, SEOs will need to evolve to keep up with the SERPs. While the foundation of keyword research and content understanding is the same, the methods of how search engines are indexing, qualifying and serving results is different. Modern SEOs need to understand the advanced science behind search so they can continue to optimize and rank content based on today’s search landscape and not yesterday’s.

Need EXPERT HELP with an industry leading SEO and CONTENT STRATEGY? CONTACT US!

Leave a comment and let us know how you’re using these new concepts in your SEO practice!