Explore this topic in AI Search

Want a different perspective? These links open AI Search platforms with a prompt to explore this topic, how it works in AI Search, and how iPullRank approaches it.

*These buttons will open a third-party AI Search platform and submit a pre-written prompt. Results are generated by the platform and may vary.

When I recently wrote a blog that walked through the process of performing a query fan-out and building a content plan, I was actually surprised by how many reputable sources were cited by LLMs and on the Google SERPs during my research.

I wrote about how a fictional financial firm could strategize content for queries relating to proper retirement saving (very much a “Your Money or Your Life” topic) and many results cited universities, government organizations like the IRS and the Department of Labor, and long-standing financial institutions like Fidelity and Charles Schwab.

The LLM results for this important YMYL topic were actually giving me information that I didn’t immediately discard like I would have when AI models first appeared. But how often does that actually happen these days? Was this a rare case?

And sure, Google has made some recent edits to its Search Quality Rater Guidelines (SQRG) to meet the needs of AI Search today, but are they helping? And is it enough?

This blog will discuss how AI Search is impacting Google’s search guidelines and look at some YMYL AI searches to see how their citations stack up.

AI Impacts on Google’s Search Quality Rater Guidelines

Think of Google’s SQRG as sort of the blueprint Google uses to train its algorithms. Around 16,000 humans rate and classify content based on its quality and value. And now that various LLMs, AI Overviews, and AI Mode are being used for searches, we need to understand their impacts on how Google defines quality.

1. The Expansion of YMYL

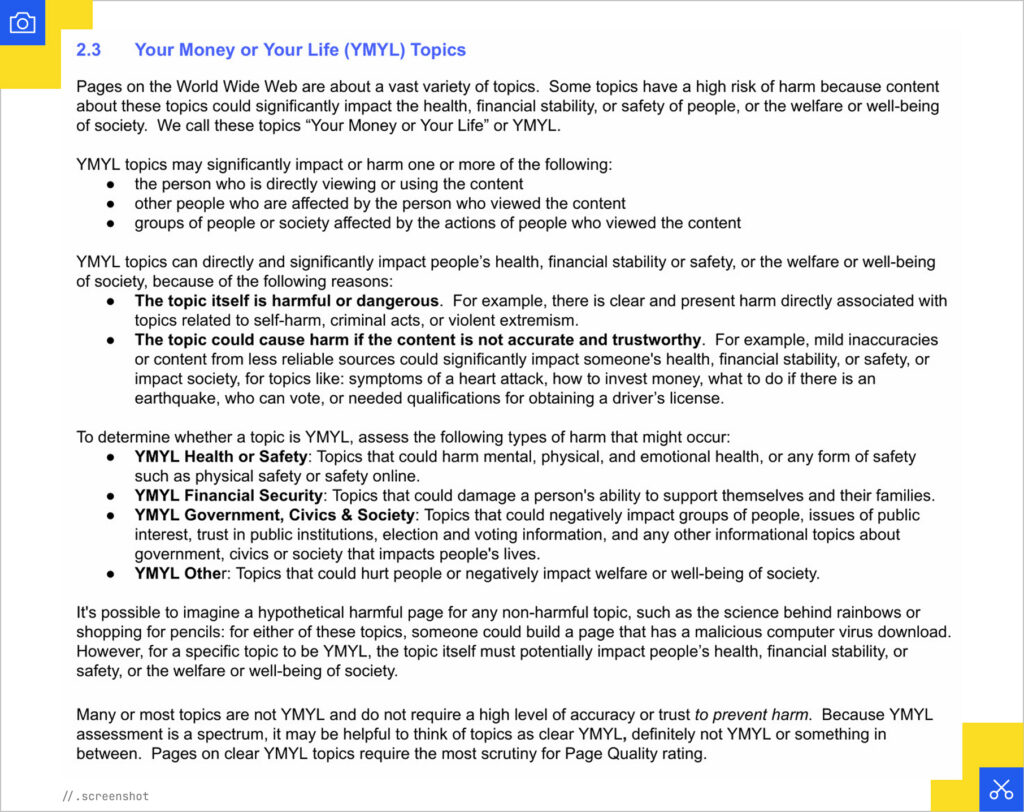

Your Money or Your Life topics can have a risk of harm or negative impact to a person’s health, financial stability, or safety. In the latest SQRG updates in January and September 2025, Google broadened the scope of YMYL:

- Government, Civics and Society: This category now includes explicit mention of election and voting information, as well as content that impacts trust in public institutions.

- Health or Safety: Google clarifies that while any page can be hypothetically harmful, YMYL status is reserved for topics that could “potentially impact people’s health, financial stability, or safety, or the welfare or well-being of society.”

2. The Rise of Scaled Content Abuse

With AI making it easy to churn out thousands of pages of content, the January 2025 update introduced a guideline that focused on spammy scaled content to better align with Google Search Web Spam Policies.

Google’s guidelines now explicitly state that the lowest quality rating applies if content is:

- Copied or paraphrased from different sites, or AI-generated in a “low-effort way”

- Lacking originality or added value compared to the other existing pages

- Essentially filler created at scale meant to inflate a page’s length without adding substance, regardless of if a human or AI created it

If a chatbot could have written your article in 10 seconds without human oversight, Google’s raters are going to mark it as low quality.

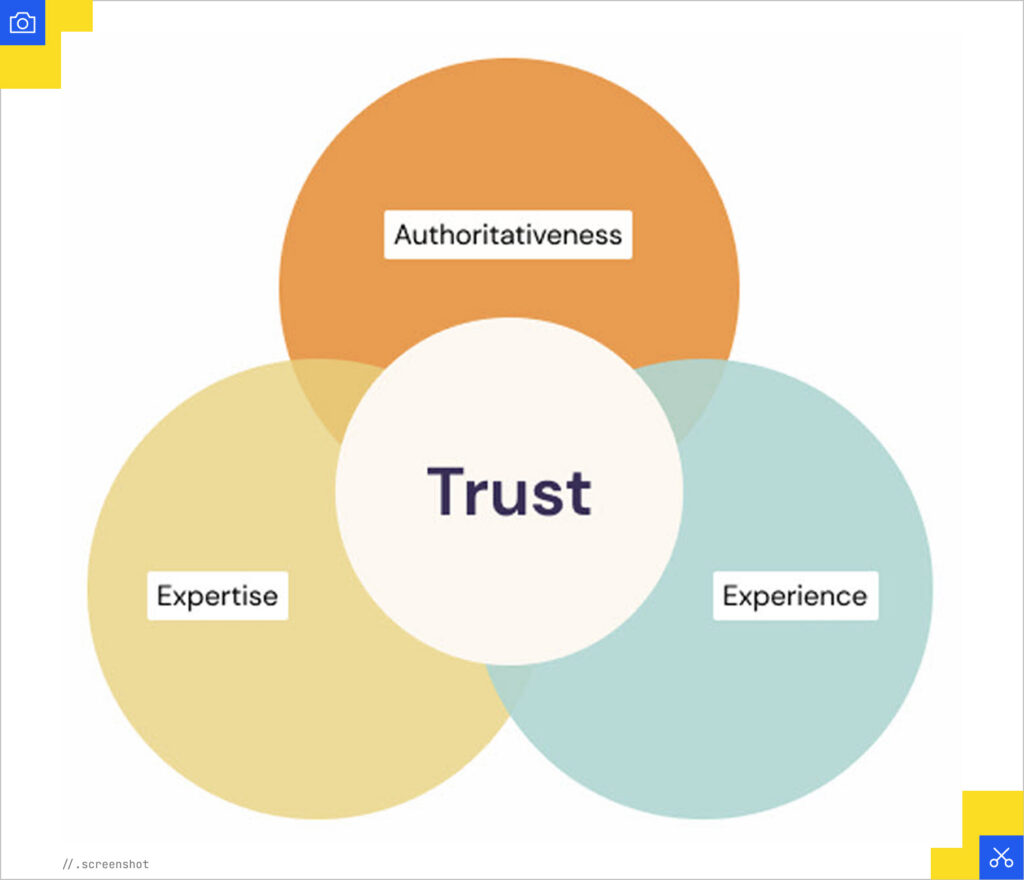

3. E-E-A-T: "Experience" is the AI-Killer

In a slop-infested web, that first “E” in E-E-A-T (Experience) can become your most valuable asset. AI can summarize expertise, but it cannot experience things for itself.

Google’s systems prioritize content that proves real-world involvement. They want to see first-hand life experience. To align with the guidelines, your content should:

- Use First-Person Insights: Use phrases like “In our tests,” or “Our survey found that…”

- Include Evidence of Effort: Original images, unique data sets, and case studies are still essential to distinguish your business.

- Strengthen Author Entities: Ensure your authors have visible credentials and a digital footprint (LinkedIn, professional bios) that Google’s AI can verify.

This is a very important point that we continue to drive home with Relevance Engineering by focusing on proprietary data, creditable research, and high-quality content.

4. Optimizing for Needs Met

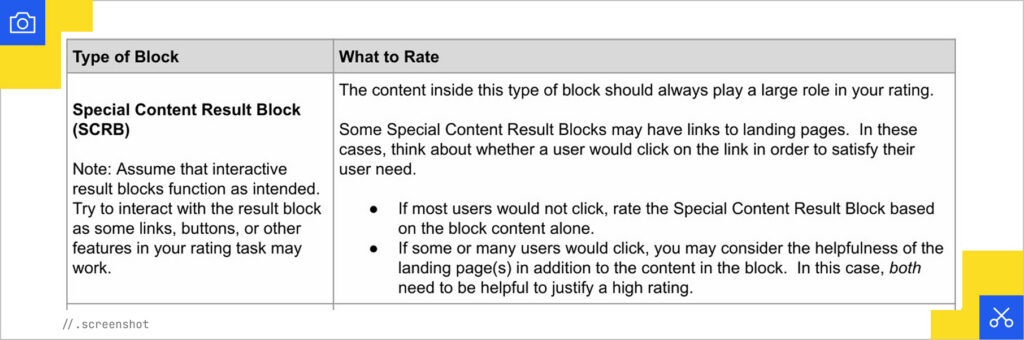

The January 2025 update added new guidance on “assessing minor interpretations and intents for Needs Met ratings and added illustrative examples.”

It’s not as groundbreaking as the other updates, but I feel like this was a missed opportunity to include some specific language referring to AI Overviews or AI Mode. I think they fit into the category of Special Content Result Block (SCRB) pretty well, but maybe Google is saving that for a later update once they’ve been in use for several years (or once they finally take the leap and make AI Mode the default).

However, Google still refers to the internet as the “World Wide Web” in the guidelines, so they’re obviously not fully up to date quite yet.

ChatGPT Health and Finance

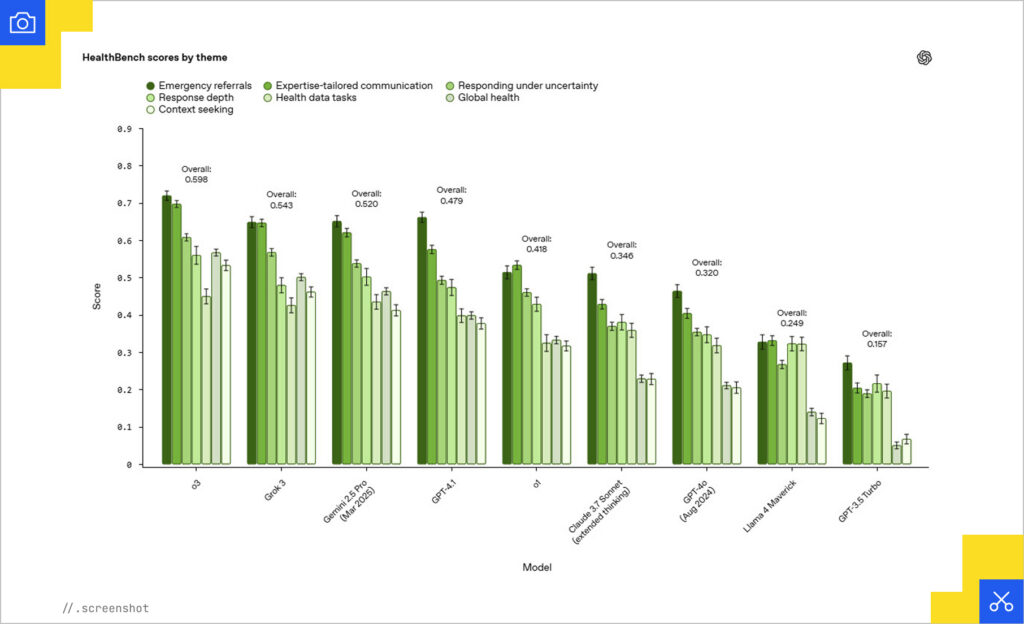

One of the more concerning product releases of the past year has to be ChatGPT’s HealthBench. It’s a benchmark that was created to measure the capabilities of AI systems for health-related queries. As OpenAI writes, “Built in partnership with 262 physicians who have practiced in 60 countries, HealthBench includes 5,000 realistic health conversations, each with a custom physician-created rubric to grade model responses.”

They claim that their models improved by 28% thanks to HealthBench and outperformed other models such as Claude 3.7 Sonnett and Gemini 2.5 Pro.

To put it bluntly, they could’ve trained their models with 5 million health conversations and I still wouldn’t trust it for health advice. AI is still just predicting text. It’s not understanding the full context, wider health issues and symptoms, reactions to certain treatments and, of course, how incredibly personalized and varied healthcare can be for each person.

In addition to trying to improve its health knowledge, OpenAI has also been focused on financial benchmarking. The company says they are “accelerating financial analysis, generating executive-ready summaries, automating routine documentation, and brainstorming strategic financial plans—all while considering compliance and accuracy standards.”

I couldn’t imagine revenue forecasting or budget planning with an AI tool for many of the same reasons I listed above. It’s very specific to each company. When budgeting, you have to consider promotions and raises, future employees, training and development, ad spend, equipment purchasing, events, etc. Entering in and calculating all of that is manual, so I don’t see much time savings with LLMs.

Who knows what can happen in the future, though.

YMYL in AI Search

You probably know by now how much I love screenshots, so let’s do some AI Search querying for serious, high-risk topics and see what kind of sources are being cited these days (and which model does the best job). I chose three queries in the categories of finance, health, and safety.

Finance YMYL Query: Choosing the Right Life Insurance

For my first search, I queried “how to choose the right life insurance.”

The AI Overview didn’t cite any sources, but the AI Mode result did, focusing on financial groups like the Western and Southern Financial Group, Farm Bureau Financial Services, and Stolly Insurance Group, as well as the nonprofits National Association of Insurance Commissioners and the Insurance Information Institute.

We know at this point that ChatGPT is allergic to citing sources unless you specifically prompt it to, and even then, it’s hit or miss on whether the source is a real one or the information is accurate. But this time, when I asked it to cite sources, its links to the Institute of Financial Wellness, Forbes, and the Louisiana Department of Insurance were legit and lead to helpful articles – a vast improvement from when ChatGPT first launched and cited broken links, fake studies, or nothing at all.

Gemini cited:

- New York Life Insurance

- Western and Southern Financial Group

- Trusted Senior Specialists

- Forbes

- NerdWallet

- Mutual of Omaha

- Experian

I noticed a difference right away in that Gemini didn’t cite any nonprofit organizations, just mostly insurance companies (which can’t always be trusted as unbiased). I found this interesting since, you know, Gemini is owned by the same folks who own AI Mode. One would think they’d show similar results.

Perplexity only cited NerdWallet and a few insurance companies, one of which I had never even heard of before (Thrivent).

The Winner: If I had to choose a winner here, I would probably go with AI Mode for the most unbiased and helpful citations (like the two nonprofits it cited).

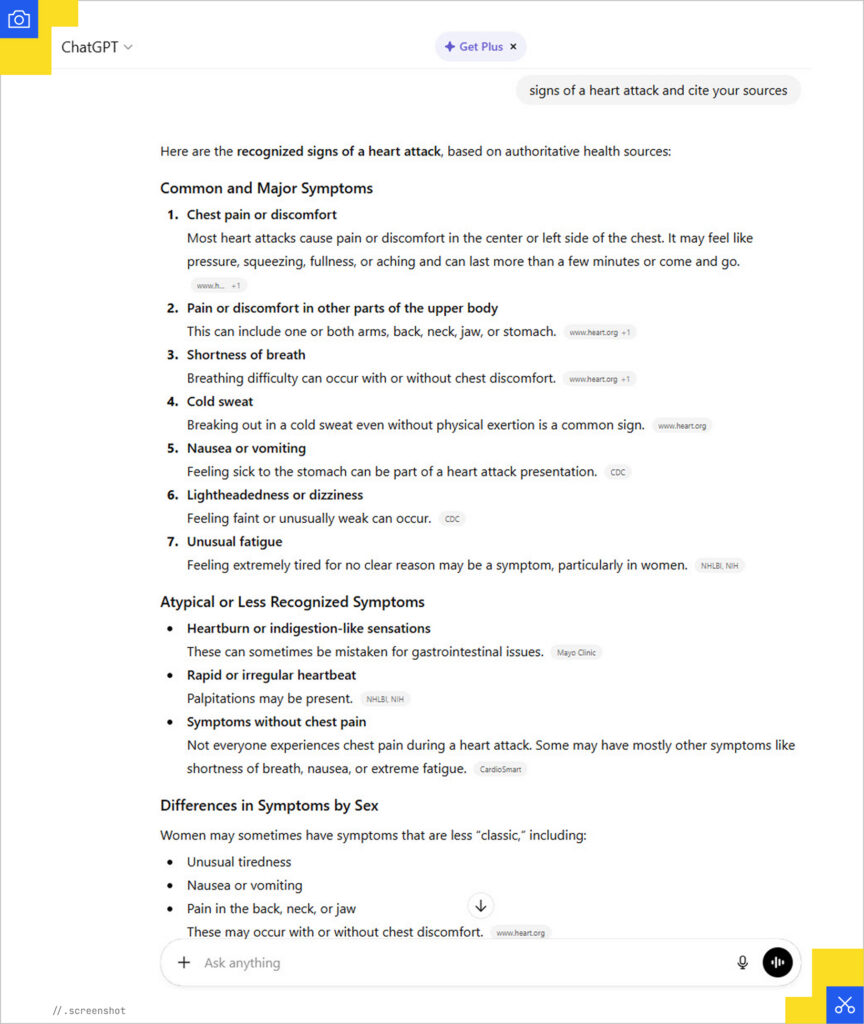

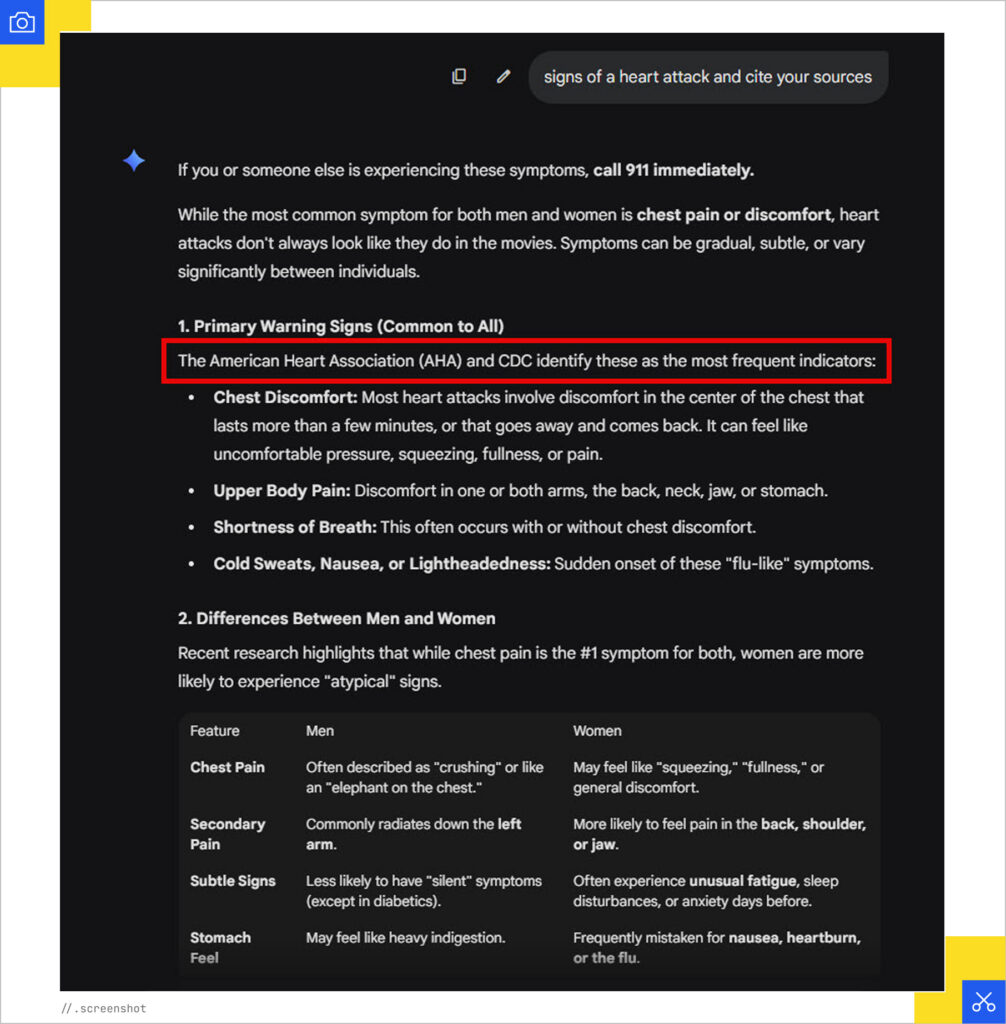

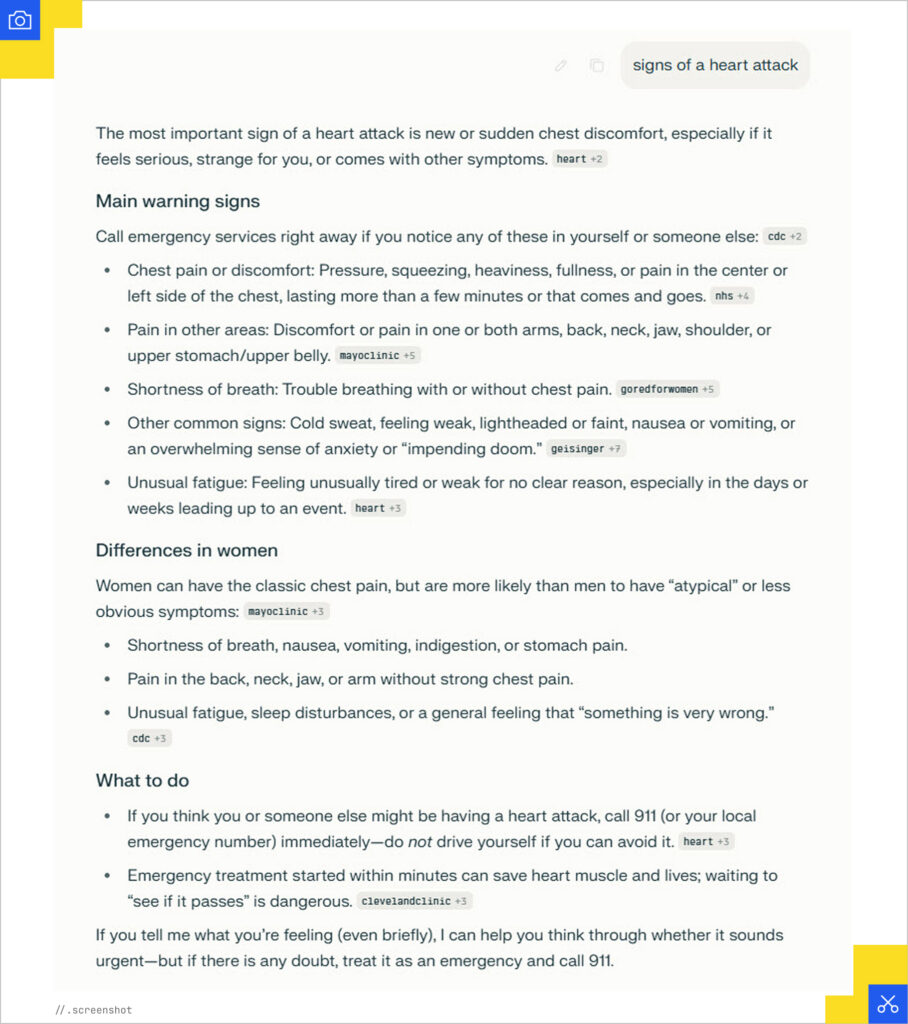

Health YMYL Query: Signs of a Heart Attack

My next query was “signs of a heart attack.”

I did not receive an AI Overview when I searched for this, but it gave a simple bullet list of symptoms from the Mayo Clinic in a Featured Snippet.

AI Mode cited:

- Mayo Clinic

- American Heart Association

- Geisinger Health (a clinic)

- Dignity Health (a clinic)

- Houston Methodist (a clinic/academic institute)

I think this is another great selection of trustworthy sources.

ChatGPT cited:

- American Heart Association

- The CDC

- National Heart, Lung, and Blood Institute

- Mayo Clinic

- CardioSmart (American College of Cardiology)

But, again, I had to specifically tell it to cite its sources. I don’t understand why I still need to do that when every other model cites them openly.

For some reason, Gemini did not cite any sources for this query. I used my ChatGPT method and told it to cite its sources and it still provided no links, but it mentioned in the text that the American Heart Association and the CDC were referenced. I would love to understand the reason behind that because I can’t figure it out.

Perplexity cited:

- National Heart Association

- CDC

- National Health Service

- Mayo Clinic

- Geisinger Health

- Cleveland Clinic

No complaints here. I think these are great sources to cite.

The Winner: I think this one is a 3-way tie between AI Mode, ChatGPT (even though I had to prompt it to cite sources) and Perplexity for citing great, legit sources. I’m still mad at Gemini for not citing anything until I asked, and then only citing two with no links to anything for additional research.

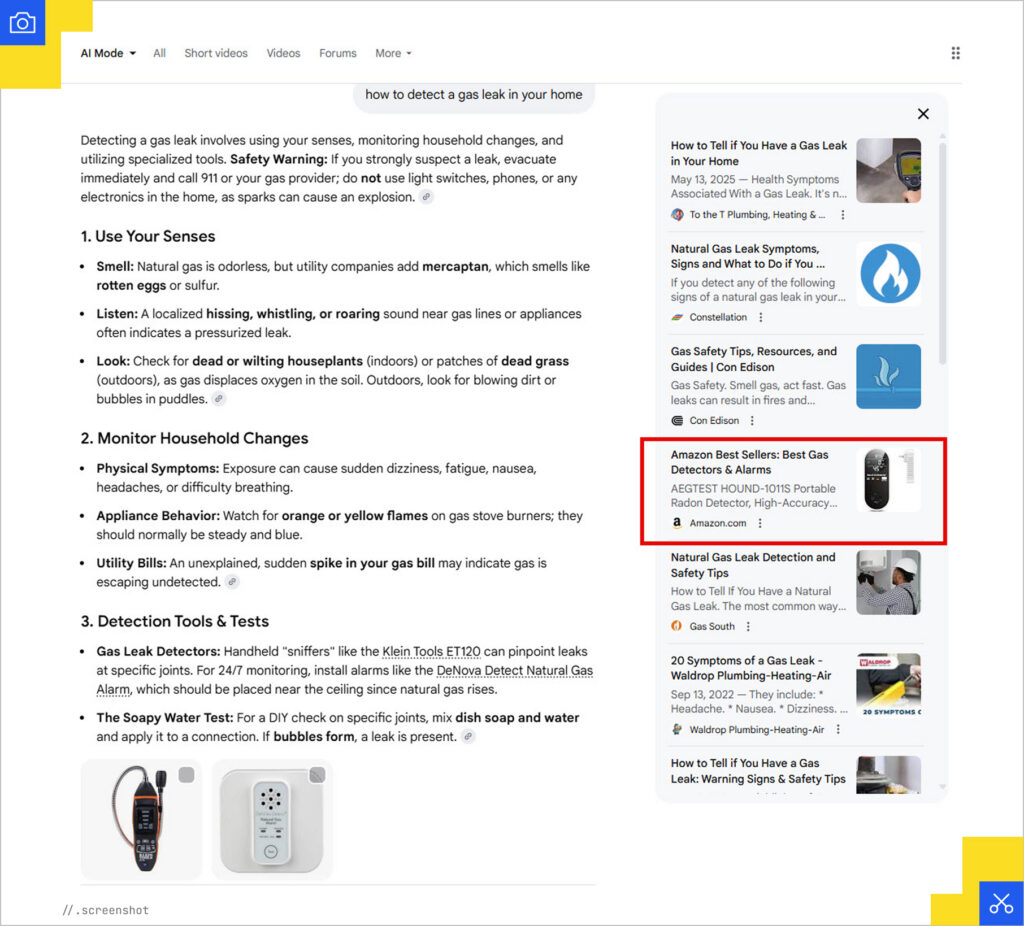

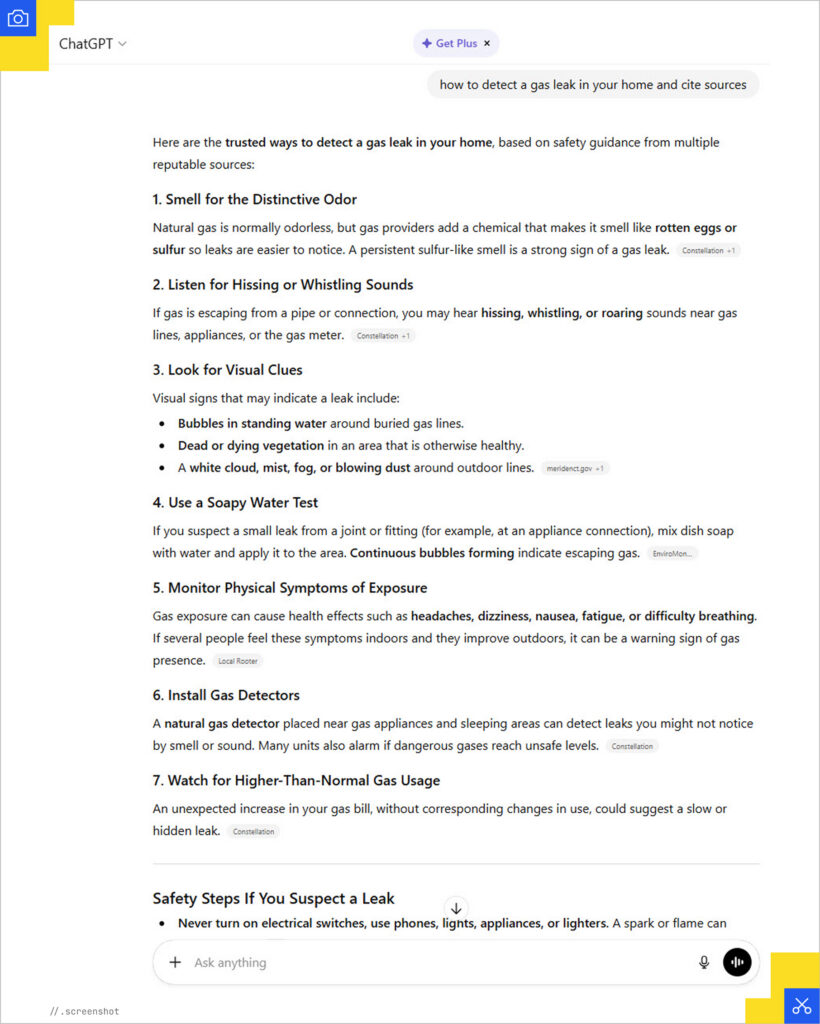

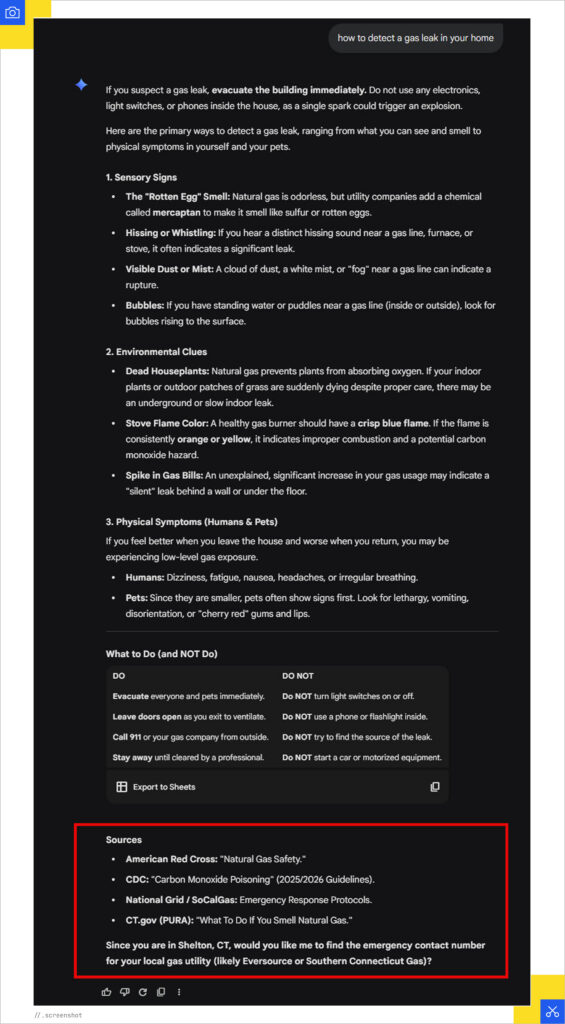

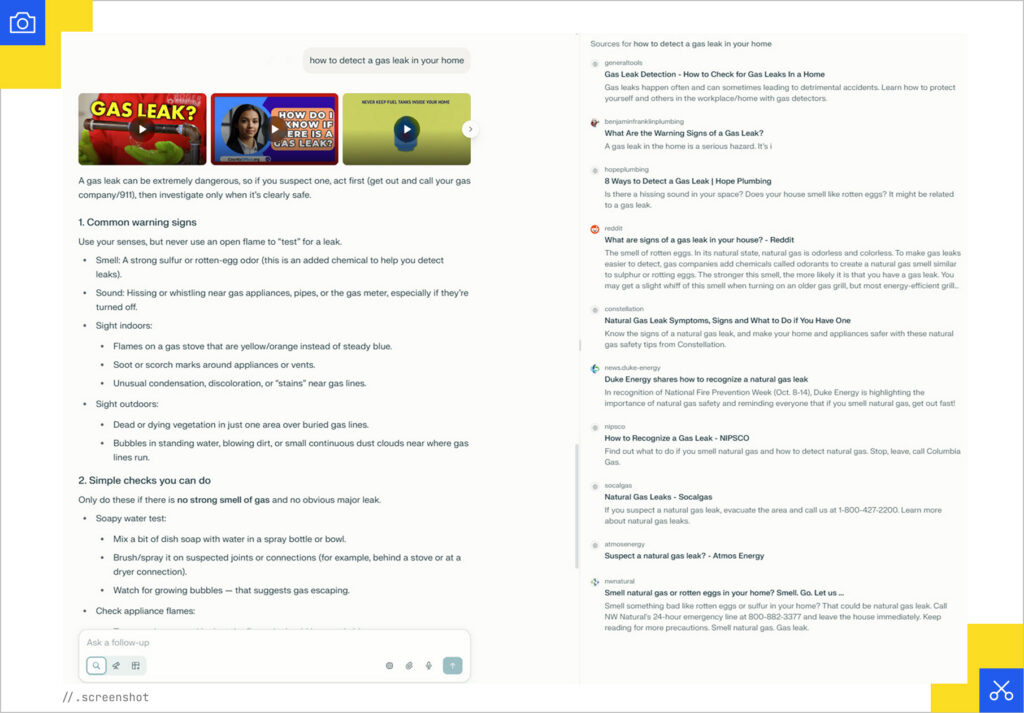

Safety YMYL Query: How to Detect a Gas Leak

For my final query, I chose “how to detect a gas leak in your home.” This is an interesting query because it focuses on safety but the results could also be product-oriented when displaying detection tools a person can buy, which could lead to biased content.

Again, there was no AI Overview for this query.

AI Mode cited mostly heating, plumbing and gas/energy supplier companies such as:

- To the T Plumbing and Heating

- Constellation (energy supplier)

- Con Edison (energy supplier)

- Gas South (energy supplier)

- Waldrop Plumbing, Heating and Air

It also cited a page of Amazon “Best Sellers” listings for natural gas detectors:

Again, ChatGPT needed me to specify that I wanted it to cite its sources and it cited:

- Constellation

- Eversource

- Renke (environmental monitoring devices like sensors)

- Local Rooter and Plumbing

- AAA

- California Public Utilities Commission

Gemini cited its sources at the bottom for me this time without my asking (though it didn’t identify which information was taken from what source like the others). It cited:

- American Red Cross

- CDC

- National Grid/SoCalGas

- CT.gov (recognizing that I’m in Connecticut)

It even asked me if I wanted the emergency contact number for the local gas utility in my town because it knows where I live:

Perplexity gave me several videos to watch, which was interesting and unlike the other models. The videos were by a plumbing education brand, a local government organization called County Office, and an energy supplier (DTE Energy).

It cited many articles by various gas, plumbing, and energy companies, but it was the only model to cite Reddit. Roll your eyes if you want, but there were useful answers provided (aside from the person who wrote “A good way to tell is if you light a match and your house explodes you probably have a leak”).

The Winner: I think I have to give this one to Perplexity. Though I appreciated that Gemini knew my location and offered to provide localized help, the diversity of sources offered by Perplexity, as well as the useful videos, put it over the top.

YMYL in the AI Search Age

AI Search is impacting how trust is synthesized and displayed for the most important queries. While my initial skepticism regarding YMYL topics was met with surprisingly high-quality citations, it’s still kind of the wild west out there. Always be sure to fact-check the information you’re getting from AI models.

The winning LLMs to me in this case varied by query, and the selection of citations was vast, but the models all appeared to be catching on to the importance of trust.

Some takeaways:

- AI can synthesize information, but it cannot replicate the “I was there” factor. Personal case studies, original imagery, and first-person insights are still your strongest defense against being labeled as “low-effort” scaled content.

- With the 2025 updates, YMYL now covers more than just health and wealth. If your content impacts civic trust or societal well-being, expect the highest level of scrutiny from both algorithms and human raters.

- Every AI model is different, so you’ll need to find your flavor when performing important searches. Regardless, whether it’s Perplexity’s diverse sources or Gemini’s localized emergency help, being the cited authority is the goal.

Google might still be calling the internet the “World Wide Web” in its guidelines, but the reality is that we’ve moved into an Answer Engine world. To stay relevant, businesses must build entities and prove through proprietary data and expert credentials that they are the source the AI (and humans) should trust.