Your product feed used to be a technical checklist for Google Shopping that your dev team handled while your content team told the real story elsewhere. They were different systems with different goals.

That time is over.

OpenAI’s product feed specification recognizes that your catalog is your content now. Every title, attribute, and image becomes training material for AI models that will decide whether to recommend your product or your competitor’s.

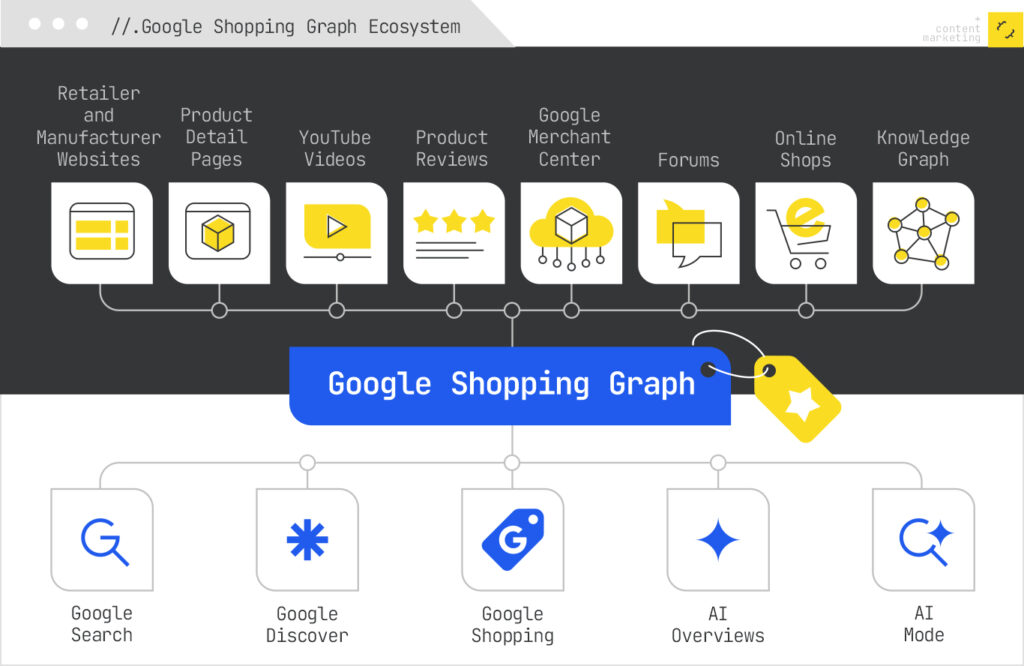

But the product feed itself is the lever you control. Your merchant feed is the canonical source that powers the broader shopping graph, the web of product information that AI systems (and traditional Google Search) use to understand what you sell.

Everything else (your reviews, videos, blog posts, product detail pages, etc.) contributes to whether AI recommends your product. The feed is where you define the product’s semantic identity.

The models care about all of it: what’s in the feed, what’s in your reviews, what’s in your YouTube descriptions, and whether all of those things tell the same coherent story.

Discovery now happens through conversation with AI, and conversations require context, emotion, relationships, and narrative consistency across every place your products appear.

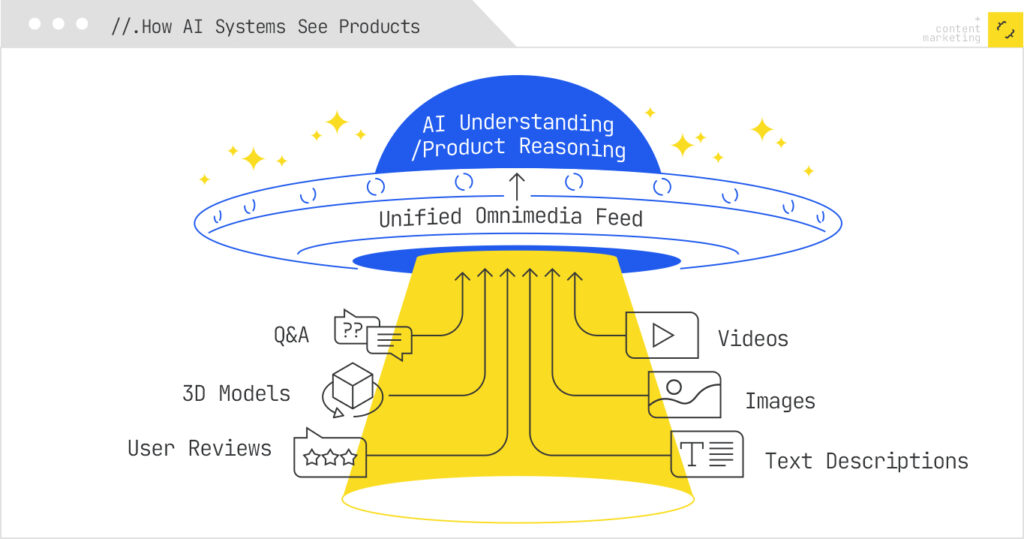

This is omnimedia strategy for AI search: turning fragmented product information into a unified content engine that works whether a customer finds you through Google Shopping, asks ChatGPT for recommendations, or watches a YouTube review.

Here’s how ecommerce brands can make it work.

Your Product Feed Is Now Content

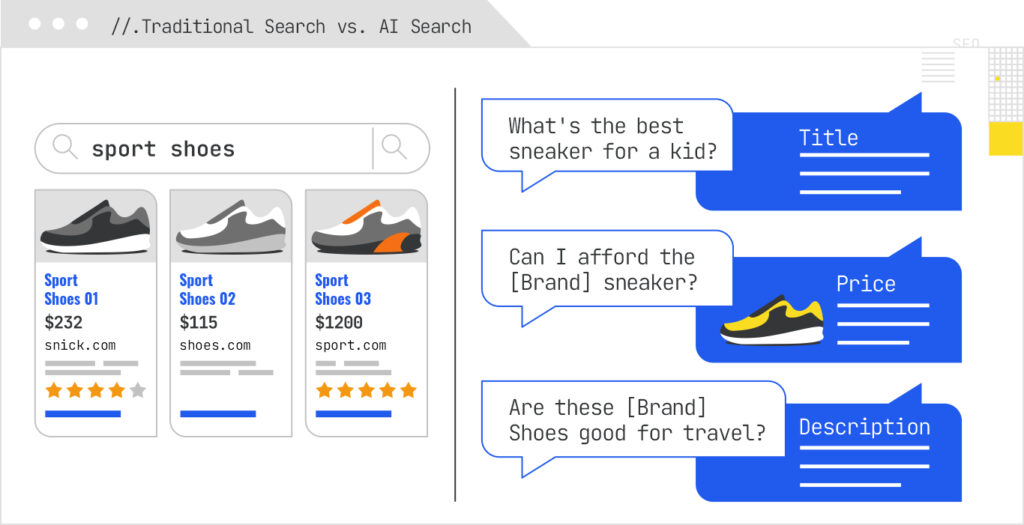

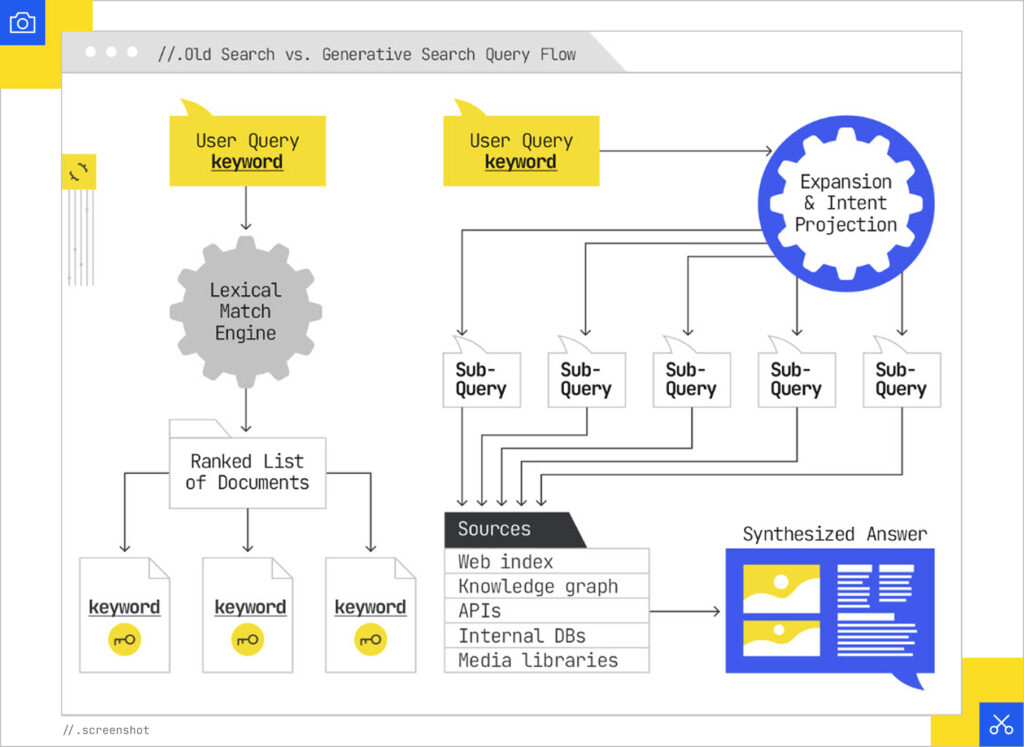

The Google Shopping feed traditionally operated with content strategy being pretty siloed: technical teams optimized product feeds for crawling, indexing, and ad targeting based on keywords, while creative storytelling and user-generated content (UGC) like reviews and Q&A often remained separate. Google’s system was designed for a world of keywords and clicks.

But we are now seeing the emergence of a new standard, shown by OpenAI’s Product Feed specification, that turns your product feed into your main content engine. Instead of describing your products solely for a search engine, you are now describing them to an AI system that can reason about them.

Every field within your feed, including titles, descriptions, and attributes, is no longer just structured data but conversational training data.

In essence, your catalog becomes content. If your descriptions lack voice, clarity, or context, you are basically training the model to recommend someone else’s product.

You need to align all of your content channels, from customer reviews to YouTube videos, into this single narrative. Every piece of content, including your product image and Q&A, is now considered a retrieval object that AI models can access to understand, compare, and recommend your products.

But we need to distinguish between two different things here:

- The product feed (your merchant feed) is the structured data file you submit to Google Merchant Center, Amazon, or other platforms. This is your direct input into the shopping graph and the foundational layer of product understanding from which commerce platforms build.

- Content that includes your product is everything else: customer reviews, YouTube videos, Reddit discussions, blog posts, Q&A threads, social media mentions, and product detail pages. You influence it through customer experience and content marketing, but you don’t control it directly.

Both matter, but they matter differently.

That means your product feed needs voice, not just keywords. It needs use cases, not just features. It needs emotional context that helps AI understand not just what something is, but why it matters to someone in a specific situation.

As Gianluca Fiorelli noted in his framework for increasing visibility in AI search: “Each unit of content — whether a full article or a single section — should begin with the core answer or takeaway, followed by supporting detail, nuance, and optional depth.”

This structure makes content highly scannable for humans and enables passage-level extraction by AI. Your feed establishes the core answer, and your content provides the supporting detail.

What Google and ChatGPT Are Reading

The transition from traditional ecommerce visibility to AI-driven discovery is really just a shift from structured feeds to multimodal signals.

The Google Shopping feed used to be mostly concerned with machine-readable text attributes like titles, prices, and keywords that were optimized for crawling and indexing. Now, ecommerce seems to be moving toward a richer, more comprehensive understanding of the product.

The Rise of Multimodal Data

AI systems are built for a world of questions and reasoning. To facilitate this reasoning, they require more than just descriptive text.

Google itself has been moving past simple structured data, evidenced by its patents focused on product understanding from visual embeddings. This signals that Google is also working to extract meaning directly from images and visual assets to better understand and recommend products.

The OpenAI Product Feed specification, however, supports a unified approach to all media types. The specification expands the media attributes beyond traditional images to include video_link and model_3d_link. These attributes, which are new or only partially supported via separate methods in the Google system, enable AI models to access and analyze 3D models and videos directly so every product image, Q&A, and review functions as a retrieval object.

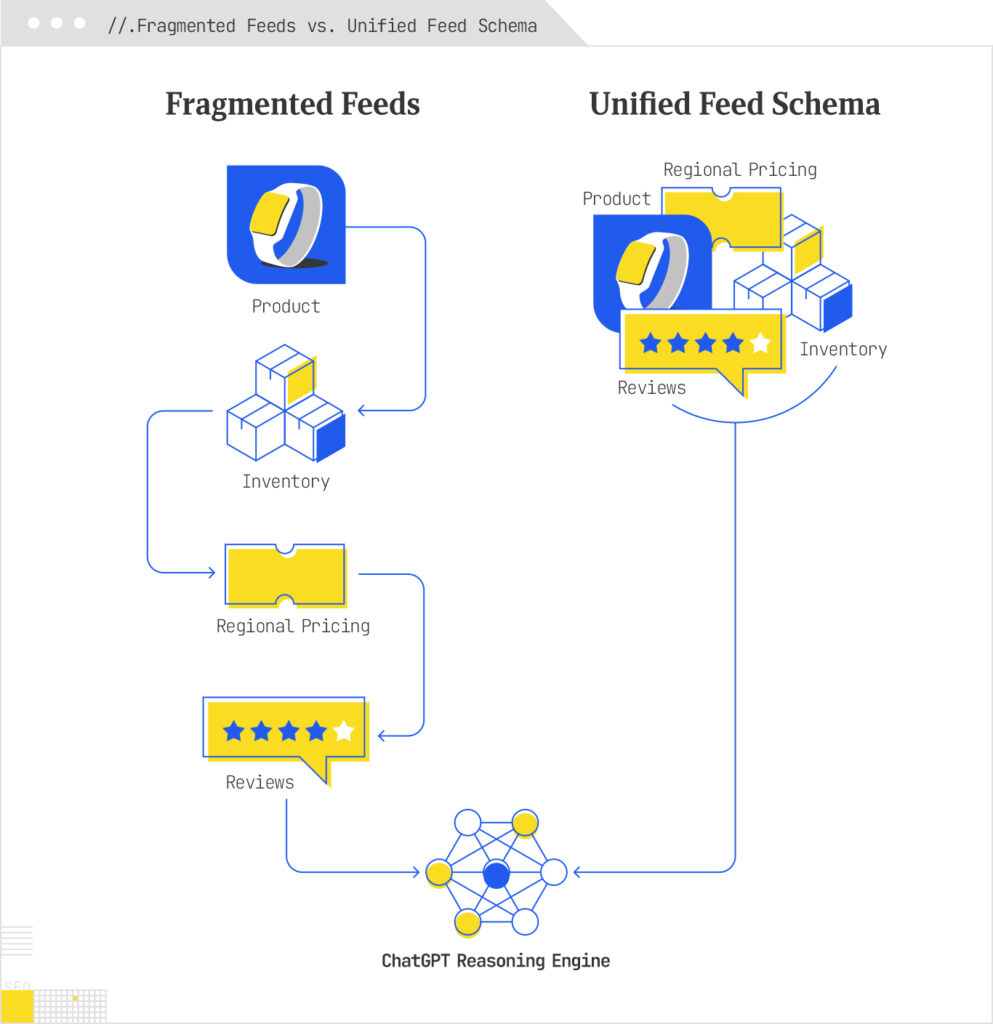

This focus on omnimedia goes hand-in-hand with a consolidation of data. Where Google traditionally splits product information, pricing, inventory, and reviews across multiple separate feeds (e.g., Core Product Feed, Inventory Feed, Product Reviews Feed), OpenAI merges it all.

This unified schema ensures that the AI has access to a complete, consistent picture of the product at all times, making it easier for the AI to reason about the product’s value and use case. For example, user-generated content (UGC) like full review text and Q&A pairs (raw_review_data, q_and_a) are now consolidated within the product record, offering the model more surface area for complex reasoning.

Using this multimodal and unified data approach can help your feed become conversational training data that allows the AI to tell your brand’s story with voice, clarity, and context.

How We Build an Omnimedia Content Plan

Optimizing your presence throughout the internet might sound obvious, but many businesses are not staying on top of this.

Your content should be aligned across all channels with consistent tone, language, and voice.

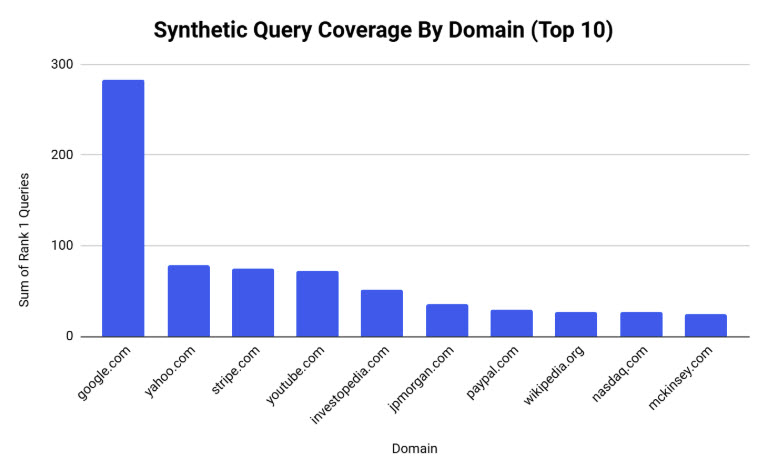

At iPullRank, we offer Omnimedia Content Audits and Plans for our clients that focus on gaps and potential wins across YouTube, Reddit, LinkedIn, and other places. Let’s look at a specific case study from a FinTech company that’s a client of ours:

Keyword Matrix

We start with creating our Keyword Matrix, which is a more comprehensive Keyword Portfolio incorporating deeper segmentation and data points to assess keyword opportunity, viability, relevance, and ranking potential. We use iPullRank’s own Qforia tool to perform query fanout research.

Using client-provided information and tools like Semrush and Ahrefs, we look at parts of speech, entities, word count (classifying keywords as head, chunky, or long-tail), search volume, trends, Google ranking, and well as the rank zone, which shows keyword ranking distribution.

Omnimedia Content Audit

We then use the Keyword Matrix to perform an Omnimedia Content Audit. We take synthetic queries from the Keyword Matrix and see the top ranking landing pages for those queries. Then we’ll identify where our client is visible, where there are visibility gaps and, of those top landing pages, what kind of content is ranking.

For this particular FinTech company, we tracked over 1,200 synthetic queries to see which domains ranked the highest and which type of content is the most successful (knowing that Google often links to itself within its SERP features). We look at priority topic clusters for a deeper dive into what it takes to win visibility for specific types of queries.

We also looked at the client’s social media presence and tagged them by topic cluster to see where there were gaps in coverage or opportunities to expand into new conversations. We found video to be a crucial part of user education for FinTech, focusing on use cases, helping users understand what these tools can do, and where there may be limitations or risks.

LinkedIn Pulse posts from individuals or companies also rank well in this space. These thought leadership and corporate insight pieces are educational and semi-formal, including a broader professional audience into the conversation around benefits, comparisons, and future-thinking that bridges technology and business.

Omnimedia Content Plan

From our Audit findings, we were able to develop a strategy for our client’s content production. We looked at the types of articles that Yahoo ranks well for, as well as what other companies in the FinTech space like IBM are writing about, to suggest topics that our client may consider covering.

In general, we often recommend these types of content to our clients:

- Data journalism with proprietary data

- Custom graphics

- Interactive tools within the site

- Traditional blog article

None of these should be broad, TOFU content anymore. That branch is pretty much covered by AI summaries. New content you create should be highly specialized, unique to your brand and can be used as a source or cited in other material, giving LLMs something unique to cite and view you as an authority.

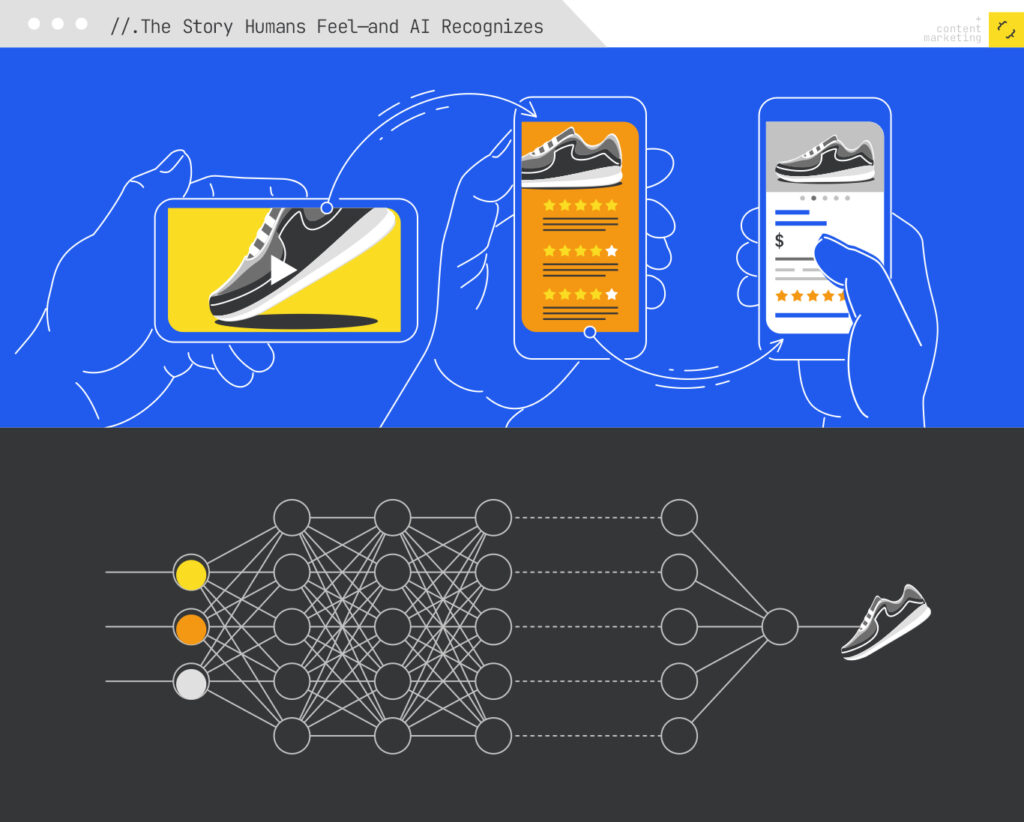

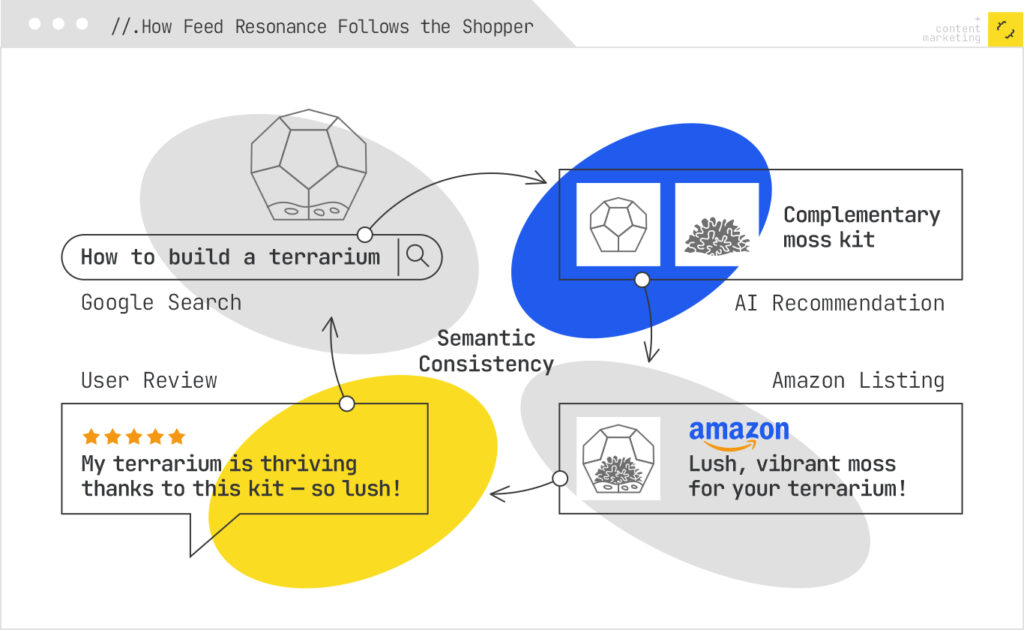

Why Feed Resonance Matters

When a customer watches your YouTube unboxing video, reads a review on your site, and later sees your product in Google Shopping, they’re encountering fragments of the same story. Feed resonance is what happens when those fragments create a coherent narrative that both human shoppers and AI systems can understand and trust.

Without resonance, you’re speaking in disconnected dialects. Your YouTube video calls it a “portable espresso maker for travelers.” Your feed says, “compact coffee machine.” Your reviews mention “camping coffee gear.” To a human, these might seem related, but to an AI parsing semantic relationships, you’ve just created three separate concepts competing for attention instead of one unified signal.

The Emotional Architecture of Your Feed

Traditional product feeds are transactional: SKU, price, availability. But AI models are trained on human language, which means they respond to emotional and value-based descriptors that explain why something matters, not just what it is.

Before: Transactional

- title: “StainlessSteel Insulated Tumbler 20oz”

- description: “20-ounce stainless steel tumbler with vacuum insulation”

After: Resonant

- title: “StainlessSteel Insulated Tumbler 20oz”

- description: “20-ounce stainless steel tumbler with vacuum insulation that keeps morning coffee hot through your entire commute because your first sip at the office should taste as good as the one at home”

- value_proposition: “temperature retention for busy professionals”

- emotional_benefit: “consistent comfort in unpredictable schedules”

- use_context: “daily commute, desk work, travel”

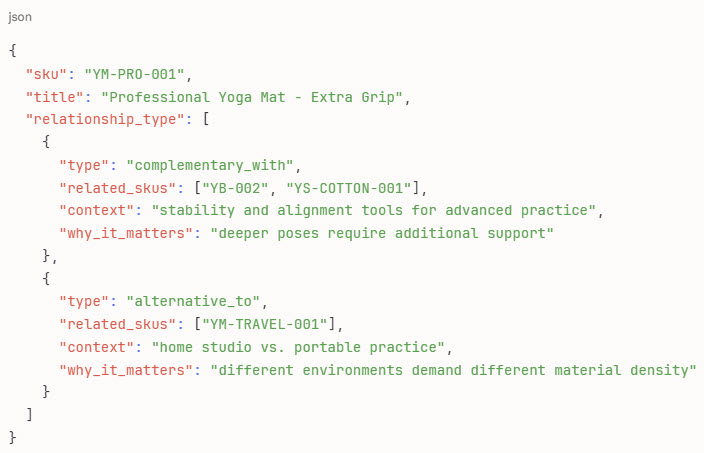

Clustering with Relationship_Type Fields

Ecommerce catalogs are naturally relational, but most feeds treat products as isolated entities. Adding relationship_type fields creates explicit semantic connections that help AI models understand your product ecosystem the way customers actually experience it.

Strategic Relationship Types:

- complementary_with: Products used together

- Yoga mat → yoga blocks, straps

- “Customers who practice at home often need both stability and support”

- alternative_to: Similar products for different needs

- Lightweight running shoe → stability running shoe

- “Same performance philosophy, different biomechanical support”

- progression_from: Journey-based relationships

- Beginner guitar → intermediate guitar

- “Natural next step as skills develop”

- occasion_paired_with: Context-based groupings

- Cocktail shaker → bar spoons, jiggers, bitters

- “Complete home bartending setup for entertaining”

- problem_solution_chain: Sequential pain point resolution

- Sensitive skin cleanser → hydrating serum → barrier repair cream

- “Layered approach to reactive skin concerns”

Implementation Example:

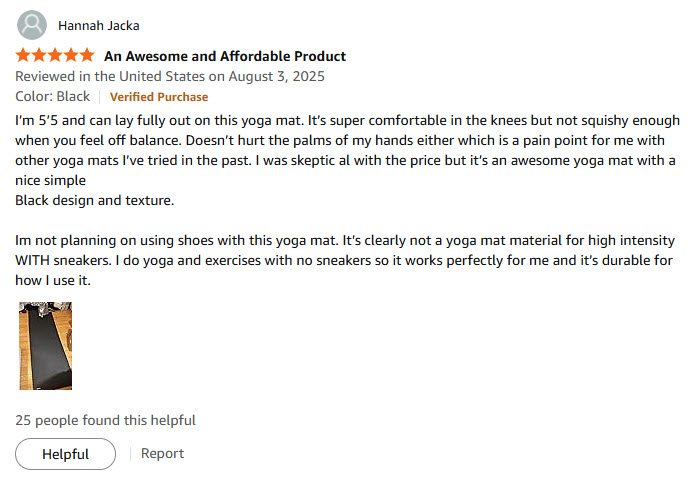

Encouraging Fresh, Contextual Customer Reviews

User-generated content is the highest-trust signal for AI models because it represents actual usage patterns and authentic language. But not all reviews are equally valuable for feed resonance.

“Great product! 5 stars.” = Zero semantic value

“Works as described.” = Confirms nothing meaningful

“Fast shipping!” = Wrong signal entirely

Some tips for soliciting better reviews:

- Post-Purchase Prompts with Specific Context

- Instead of:

“How would you rate this product?”

- Try:

- “How did this yoga mat perform during your first hot yoga class?”

- “Which features made the biggest difference during your morning commute?”

- “What surprised you most about using this for camping trips?”

- Temporal Review Requests

- Capture evolving experiences:

- 7 days: First impressions and setup experience

- 30 days: Daily use patterns and unexpected benefits

- 90 days: Durability and long-term value assessment

Each window generates different semantic data. Early reviews capture “unboxing,” “easy setup,” “initial quality.” Later reviews contribute “holds up well,” “worth the investment,” “still performs like new.”

- Scenario-Based Review Frameworks

- Guide customers to describe context not just satisfaction:

- “Tell us about a specific moment when this product made a difference:”

- Solved an unexpected problem

- Made a routine task easier

- Worked perfectly in challenging conditions

- Exceeded expectations over time

- This generates reviews like:

- “Used this tumbler during a power outage and it kept ice frozen for 14 hours when our fridge went out” instead of “Keeps drinks cold.”

- Cross-Channel Review Integration

- Surface reviews in:

- YouTube video descriptions (“Real users say…”)

- Product feed structured data markup

- Social media carousel posts

- Email nurture sequences

When the same authentic story appears in your feed, in video metadata, and in social content, AI models recognize pattern consistency.

Measuring Resonance

You’ll know your feed resonance strategy is working when:

- Cross-channel query matching increases: The same product appears for semantically similar queries across Google, Amazon, and LLM-based shopping.

- Related product discovery improves: AI recommends your complementary items without explicit ads.

- Review themes align with feed descriptors: Customer language mirrors your value propositions.

- Long-tail semantic traffic grows: You rank for “why” and “how” queries, not just product names.

Feed resonance transforms your product catalog from a static inventory list into a dynamic semantic network that follows your customer across every touchpoint.

In an AI shopping world, ecommerce shops need their products to tell the most coherent, emotionally resonant, and semantically rich stories across every surface where discovery happens.

Visibility in the Future of Ecommerce

The change from keyword optimization to omnimedia strategy may feel large, but the opportunity is proportional to the challenge. Ecommerce shops that treat their product feed as isolated technical infrastructure while investing heavily in “content marketing” aren’t understanding that the feed is the content. The reviews are the story. The YouTube videos, customer Q&A, product images, and attribute data are different expressions of the same semantic truth about what you sell and why it matters.

Getting this right requires recognizing that your 200-word product description will be read by more AI models in the next month than human shoppers will read in a year. It requires understanding that a well-structured relationship_type field does more for discovery than another generic Instagram post.

It’s about having the most resonant content where every signal across every channel reinforces the same clear, emotionally grounded, contextually rich narrative.

What story is your product feed actually telling?