Search is changing rapidly, and with it, the technical foundations that have guided SEO practitioners for years are being reexamined through the lens of artificial intelligence. People are relying more on AI-powered search, like AI Overviews or ChatGPT, to get information, so classic technical SEO methods should reflect that.

In a recent webinar, iPullRank’s Senior Director of SEO & Data Analytics Zach Chahalis outlined how traditional SEO principles are adapting to meet the demands of AI-powered search systems.

Traditional SEO ensures that your site is discovered, while generative engine optimization (GEO) and Relevance Engineering ensure that your site is understood. Let’s look at the technical SEO that’s building the foundation for the future of search and how it can help you develop new content strategies.

Understanding RAG: The Engine Behind AI Search

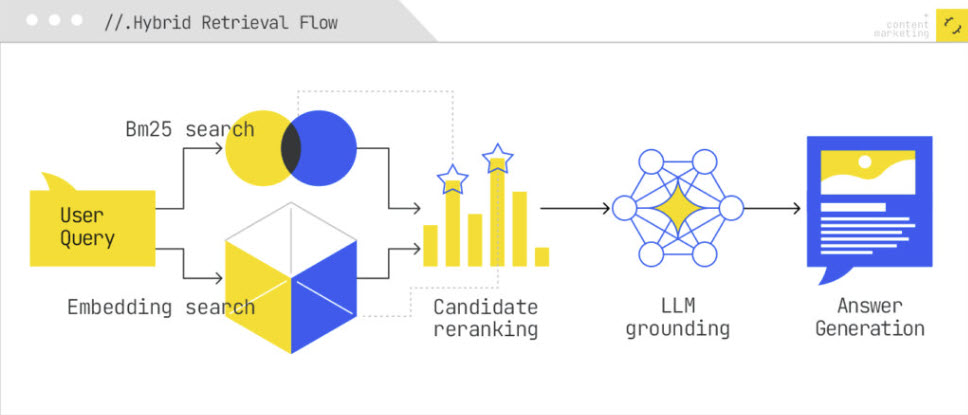

At the heart of modern AI search lies Retrieval-Augmented Generation (RAG), a pattern that addresses the inherent weaknesses of large language models. RAG works by combining two complementary search approaches:

- Lexical search remains the foundation of traditional keyword-matching that has powered search engines for decades.

- Semantic search is driven by the representation of meaning rather than exact word matches. It understands context, intent, and the relationships between concepts.

Hybrid retrieval combines both lexical and semantic approaches to power RAG systems. Modern search engines retrieve passages by combining lexical and semantic search then rerank the documents using methods like reciprocal rank fusion (RRF).

This dual approach ensures that AI can both find exact matches and understand the deeper meaning behind content. However, keep in mind that 95% of SEO tools still only perform lexical analysis. This needs to change with the times.

Vector embeddings are also going to fuel search going forward as queries become more personalized and contextual.

“Google is creating embeddings about all the users' interactions to address and put together the results and filter out unrelated results.”

- Zach Chahalis

The New Reality: Fragmented Visibility

These days, people are finding information across multiple platforms, including traditional search engines, AI chatbots, voice assistants, Reddit, YouTube, and more. This fragmentation means visibility now depends on your presence across all these platforms, and that visibility is increasingly multimodal.

“Your answers are going to come from everywhere,” Zach said.

The sweet spot for relevance lives in the overlap between classic SEO and conversational search, where context, knowledge quality, and synthesis converge.

Zach breaks it down into five main pillars of technical SEO that make up the foundation for modern search.

The Five Pillars of Technical SEO in the AI Era

Classic technical SEO practices are still important today regardless of what new AI advancements come along. Here are five main areas on which to focus your efforts:

- Site Information Architecture and Page Structure

- Your site’s structure is more critical than ever. Hierarchical organization and topic clustering help AI systems understand the relationships between your content. Think of your site as a knowledge graph that machines need to navigate.

- Internal linking serves as semantic glue, defining relationships between pieces of content. Use descriptive anchor text and implement horizontal linking strategies to show how topics relate to each other (no more “click here” or “learn more”).

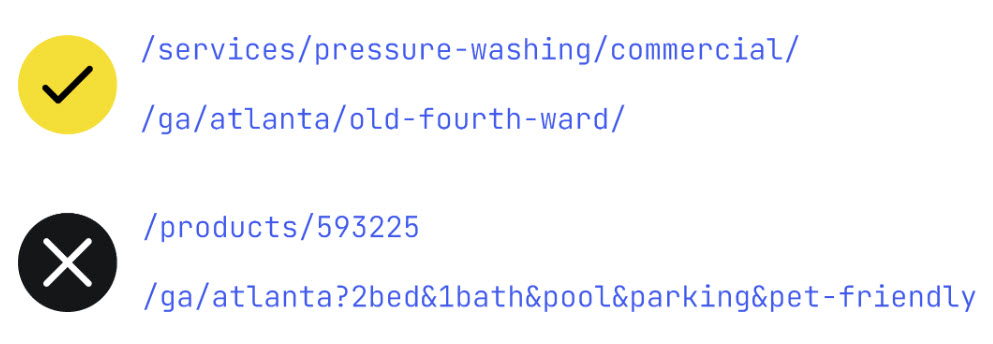

- Even in 2025, URL structures still matter. They provide semantic signals that help both humans and machines understand content hierarchy and relationships.

“If you want your content to perform well in the modern retrieval systems, you need to structure it in a way that’s readable and understandable primarily for your readers but also for machine-readable search engine crawlers.”

- Zach Chahalis

- Entity Mapping and Structured Data

LLMs can and do use structured data as part of the RAG pipeline. JSON-LD and Schema markup provide the foundation for helping AI systems understand the entities in your content. This means implementing comprehensive structured data for the who, what where and why:

- Organizations and brands

- People and authors

- Products and services

- Events and locations

- Articles and content types

If structured data is missing or inconsistent, your brand’s representation in AI systems is in jeopardy. This gives deeper context to your content and helps AI systems accurately synthesize and present your information.

If it’s machine-readable, self-contained, and separate from the main HTML, it is ideal for LLM and vector parsing and easier for crawlers to extract in one pass (vs. inline microdata or RDFa).

- Use @graph to connect related entities (Organization → WebSite → WebPage → Product/Service/FAQ).

- Include @id values to create unique identifiers, as these act like “anchors” in the global semantic web.

- Keep markup consistent across templates (same @id for repeated entities).

- Validate with Schema.org AND Google Rich Results Test but note that AI systems may interpret beyond Google’s scope, so structure for meaning, not just features.

- Crawlability: Opening the Doors to AI

AI crawlers need efficient access to your content. Robots.txt files continue to dictate what’s allowed to traditional search engines but also AI bot crawlers, so you need to strike a balance between brand control/privacy and discoverability/inclusion in AI search.

Several technical factors can hinder AI’s ability to discover and understand your site:

- Basic hygiene matters: Properly configured robots.txt files and CDN settings ensure crawlers can access your content. Monitor these regularly, as misconfigurations can completely block AI systems.

- Canonicalization and hreflang: Poor implementation can fracture your authority and cause fragmentation in embedding graphs (the way AI systems map and store content representations).

- Crawl depth and budget: AI crawlers prioritize efficiency. Endless pagination without clear site hierarchy creates excessive depth that AI systems may simply abandon. If pages aren’t interlinking with each other effectively, you’re making it harder for AI to understand your content’s relationships.

Well-structured XML sitemaps provide freshness and prioritization of content, so proper

use of <lastmod> is key to signal freshness. AI crawlers prioritize internal linking but

sitemaps reinforce canonical confidence and crawl depth.

- Rendering: Making JavaScript Content Accessible

If your content is built via JavaScript, AI crawlers may never see it. This is particularly critical as many modern sites rely heavily on client-side rendering. Missing rendered content can mean incomplete or distorted embeddings.Consider implementing pre-rendering or hybrid rendering strategies that serve fully rendered content to crawlers while maintaining dynamic experiences for users. The content that AI systems can’t render simply doesn’t exist in their understanding of your site.

These strategies include:

- Static Rendering (SSG): For evergreen content (articles, service pages) that rarely changes, serve static pre-rendered HTML.

- Server-Side Rendering (SSR): Generate full HTML on the server for dynamic pages; send a complete DOM to crawlers.

- Hybrid Rendering: Render above-the-fold or critical content server-side; render secondary or interactive elements client-side.

- Dynamic Rendering for Bots: For legacy crawlers, detect known bot user-agents (Googlebot, GPTBot, etc.) and serve pre-rendered HTML.

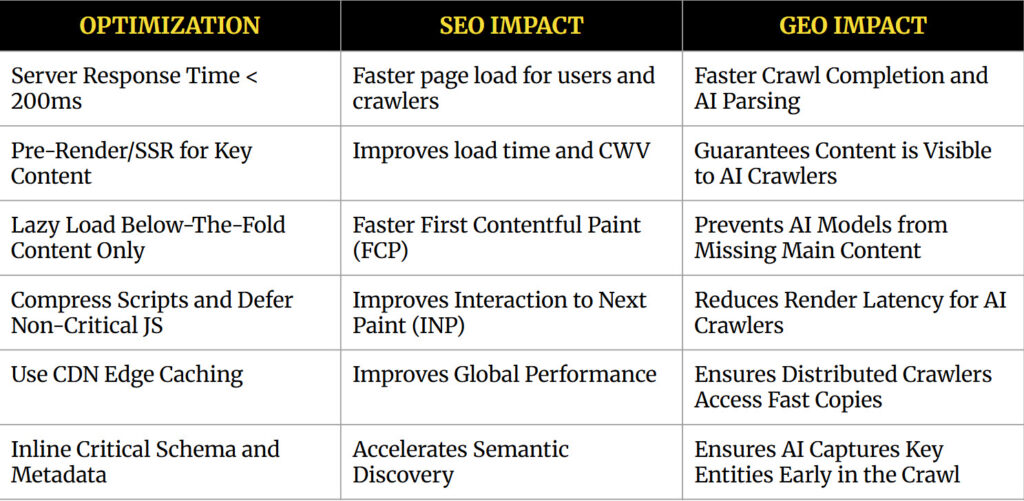

- Performance: Speed as a Foundation

Site speed remains a critical factor, not just for user experience but for AI crawlers’ efficiency calculations. Slow-loading interactive elements can hurt from multiple perspectives:

- User experience suffers when elements take too long to load.

- Crawlers may abandon slow-loading pages.

- AI systems may deprioritize sluggish sites in their retrieval processes.

Reliable website performance ensures that both humans and machines can efficiently access and process your content.

Writing for Synthesis

Perhaps the most important change in the AI era is how we approach content creation itself. Writing for synthesis means structuring content in a way that’s readable and understandable not just by humans, but by systems that need to extract, understand, and recombine information.

This includes:

- Clear hierarchical organization with meaningful headings

- Well-defined entities and concepts

- Logical flow that machines can follow

- Structured data that provides explicit context

- Internal linking that shows relationships

Writing content using semantic triples (the subject, predicate, object format), sharing proprietary data and stats, and keeping sentences short, punchy, and specific can all help with retrieval.

The Path Forward

The future of search is still up in the air, but search is strategically different from what the industry and software can support. The fundamentals of technical SEO haven’t disappeared, of course. The same practices that helped search engines understand your content now help AI systems retrieve, process, and synthesize it.

The difference is that mediocre technical implementation that might have worked in purely lexical search can cause problems in hybrid retrieval systems.

AI search requires attention to these technical foundations, combined with content that’s genuinely valuable and synthesizable. As search continues to evolve across platforms and modalities, CMOs need to ensure that their sites make it easy for AI systems to understand, extract, and accurately represent their content.

The future of search is here, and it’s built on evolved technical foundations. By mastering these five pillars, you’ll position your content for visibility in both traditional and AI-powered search experiences.

With agentic search becoming more prevalent, the brands that focus on engineering that relevance are going to define AI search.

“Winning in this realm means creating content that resonates with building a persona-driven, audience-first perspective,” Zach said.

And check out the other 2 articles in this series based on our webinars with Sitebulb: