When we talk about “attribution” in traditional SEO, we usually mean a clean, direct connection: a user typed a keyword, saw your page in the results, clicked through, and maybe converted. We have logs, analytics, and Search Console to validate that flow. Generative AI search surfaces break that connection in a fundamental way. The visible query from the user is just the spark. The actual retrieval process that builds the answer happens behind the scenes, and it’s rarely a one-to-one reflection of what the user typed.

What you see is the final synthesis: a tight block of text, often with citations. What you don’t see is the scaffolding: the fan-out of synthetic queries, the entity lookups, and the ranking layers that select the sources. Generative Engine Optimization lives and dies in that invisible space. If you can’t map it, you’re optimizing blind.

This chapter is about making the invisible visible. It’s about reverse engineering the fan-out process, predicting it before it happens, and mapping the entities that act as retrieval anchors. It’s about integrating those maps into a live, evolving attribution framework so you can align your content with the way AI search actually finds and selects it. And it’s about doing this in a way that’s forward-looking, not just reacting to today’s retrieval models but anticipating how they’ll evolve.

In Google’s AI Overview and AI Mode, the typed query is just a seed. As described in multiple Google patents, the system decomposes that seed into components: named entities, task intent, temporal scope, and contextual modifiers. Each of these components can be expanded, substituted, or enriched to form new subqueries.

For example, take the user query: “best laptops for video editing 2025.” Lexically, it’s a short phrase. Internally, Google’s retrieval system might parse it as:

From here, the expansion model generates variations:

Some of these may hit the main web index; others may query structured data in the Knowledge Graph; others might pull from YouTube transcripts or product specs in Google Shopping. This is query fan-out: distributing the original intent across multiple retrieval runs to cover it more comprehensively.

GEO Strategy Note: Treat every typed query as just the surface representation of intent. For GEO targeting, build your process to anticipate and optimize for the synthetic subqueries AI systems will generate. This means creating content that satisfies both the lexical query and its decomposed entity/intent variants.

We can’t directly see Google’s internal subqueries, but we can infer them by “perturbing the input” and watching the output. This is where query perturbation testing comes in.

You take a base query — say “best laptops for video editing 2025” — and systematically vary it:

For each variation, you check whether an AI Overview triggers and, if so, which URLs are cited. Over time, you’ll see that some URLs persist across many variations. These are strong candidates for being retrieved via a shared synthetic subquery.

By mapping URL overlap across dozens of variations, you start to reconstruct clusters of latent intents. Each cluster represents a likely branch in the fan-out tree. You won’t capture the entire tree because Google’s model is dynamic, and freshness signals can shift branches, but even partial reconstruction is valuable.

GEO Strategy Note: Use query perturbation testing as your primary diagnostic tool for uncovering latent retrieval branches. Consistent co-citations across variations signal high-priority retrieval sets to target for coverage.

When you reverse engineer AI Overview fan-out behavior, the process is not about making loose inferences, it’s about applying a repeatable, controlled framework that can expose the latent “query space” behind the surface result.

You begin with a set of high-value seed queries in your domain. These should be the kinds of queries where winning an AI Overview (or AI Mode) citation has real commercial or reputational value in the transactional, decision-stage, or high-visibility informational searches. Each seed acts as an anchor point from which you will probe the AI system’s expansion patterns.

For each seed, you generate 10–50 controlled variations. AI Overviews use fewer queries while AI Mode uses more. These variations are crafted to isolate different potential intents that an AI might surface with modifiers, synonyms, entity swaps, or long-tail expansions while keeping enough semantic overlap to be considered the “same search” from a business perspective. This gives you a test bed that mimics the LLM’s own fan-out process, but under your control.

As you run each variation, you log every AI Overview trigger along with all citation URLs. This is your raw retrieval footprint, an observable slice of the AI’s candidate document pool for that cluster of intent. The power of this approach is in the next step: computing co-citation frequency. By counting how often two URLs appear together across different query variations, you reveal which sources the AI is grouping in its internal relevance graph.

Once you have these frequencies, you plot them as a network graph. In this visual, nodes represent citation URLs and edges represent co-citation relationships, weighted by frequency. Clusters begin to emerge naturally. One might correspond to “benchmark data” subqueries; another to “software compatibility” subqueries; another to “best practices” advice. Each cluster is an empirical signal of how the AI is segmenting the topic space and which pages it sees as interchangeable or complementary for a given sub-intent.

The URLs within a cluster are your observable retrieval set for that branch of the fan-out. In GEO terms, this tells you two critical things:

By iterating this process, you can build a multi-level retrieval map that is far more precise than traditional SERP competitor lists because it reflects the AI’s own probabilistic grouping, not just static rank order.

GEO Strategy Note: Co-citation frequency analysis transforms scattered AI Overview citations into structured competitive intelligence. Clusters reveal both the content themes AI trusts and the “citation neighborhoods” you need to penetrate or dominate.

Reverse engineering is inherently reactive. To get ahead, you simulate.

Start with keyword graph data from Semrush or Ahrefs. These tools cluster queries by SERP co-occurrence, which approximates human search behavior. Then, feed your seed query into a large language model with an instruction like: “Generate every query a retrieval system might run to fully answer this question, including low-volume and zero-volume variants.”

The output will include queries you won’t find in keyword tools like the long-tail and entity-linked variations that are precisely the sort of synthetic queries AI search generates.

Run entity extraction (e.g., spaCy, Google NLP API) on the combined set. This gives you the entity dimension of the fan-out space. Now you have two layers: lexical queries and their resolved entities.

Test these predicted queries in live search. If AI Overviews trigger and cite your domain, you’ve validated part of your predicted fan-out. If not, you’ve identified retrieval gaps to target.

GEO Strategy Note: Simulation closes the gap between reactive and proactive GEO. Combining keyword graph data with LLM-predicted expansions lets you pre-build content for queries the AI hasn’t yet surfaced but is statistically likely to generate.

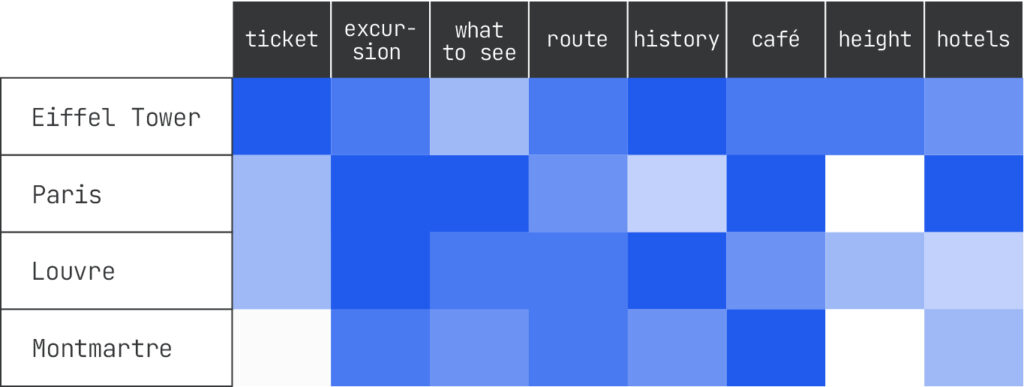

In GEO, entities matter more than exact keywords because entities are how AI search systems structure knowledge. Two lexically different queries can resolve to the same entity set and therefore hit the same retrieval branch.

Example:

Different words, but both resolve to [Zion National Park] and [Permit Requirements]. If your content is entity-linked in the Knowledge Graph to those nodes, it’s eligible for both retrievals including synthetic ones you’d never predict.

This is why entity attribution is critical. It tells you what the system understood, not just what the user typed. Building an entity–query co-occurrence matrix for your topics reveals which entities drive the most retrieval eligibility.

GEO Strategy Note: Anchor your GEO strategy in entity optimization, not just keyword targeting. Aligning content with the right Knowledge Graph nodes increases eligibility for a wide spectrum of explicit and synthetic queries, including ones with zero observable search volume.

To map entities effectively, you need three capabilities:

Once you’ve built this mapping, you can layer it onto your fan-out reconstruction. Now you can see, for example, that the “benchmark data” cluster in your fan-out map is anchored on [Laptop Computers], [CPU Model X], and [4K Video Editing]. You can then verify whether your content is robustly linked to those entities.

ProTip: Check out WordLift’s suite of tools to support this work.

GEO Strategy Note: Build and maintain an entity–query co-occurrence matrix for your vertical. This lets you target content development toward the highest-value entities and verify that your pages are structurally and semantically linked to them.

The real power comes when you merge query and entity attribution into a unified dataset. Every observed citation is logged with:

Over time, this dataset shows:

This is your GEO control panel. When you plan new content, you cross-check it against this map:

GEO Strategy Note: Your attribution map is your control panel. Merging query and entity tracking into a single dataset ensures content decisions are grounded in the AI’s actual retrieval logic, not just surface-level SERP data.

Manually reconstructing fan-out is valuable, but it doesn’t scale. Automation turns it into a living system.

You can build a crawler-agent hybrid that:

Run this daily or weekly, and you’ll see shifts in fan-out and entity dominance in near real-time.

GEO Strategy Note: This is forward-looking. As Google and other AI search systems evolve, the specific subqueries may change, but the need to continuously map them will not. Building an attribution engine now future-proofs your GEO program.

Generative search systems are getting better at multi-hop reasoning chaining together multiple retrievals where the second depends on the first’s output. This means fan-out won’t just be a flat set of subqueries; it’ll be a layered graph where some nodes are intermediate reasoning steps. Entities will be the glue between those layers.

For GEO, this raises the stakes. You’ll need to cover not just primary entities, but the secondary and tertiary ones that reasoning steps connect to. Predictive simulation will have to model these hops, not just one-off expansions.

GEO Strategy Note: Begin tracking “bridge entities” nodes that connect different clusters in your fan-out map. These often become disproportionately important as models adopt deeper reasoning.

In closing, mastering query and entity attribution in a generative search context is about shifting from static, keyword-based thinking to a dynamic, systems-level view of how AI actually retrieves, ranks, and cites content. The fan-out tree is not a mystery once you learn to observe its shadows, reconstruct its branches, and predict its growth. Entities form the connective tissue of this system, determining eligibility across explicit and synthetic queries alike. By merging query and entity maps into a living attribution model and automating its upkeep you turn an unpredictable, probabilistic environment into one you can measure, influence, and ultimately shape.

If your brand isn’t being retrieved, synthesized, and cited in AI Overviews, AI Mode, ChatGPT, or Perplexity, you’re missing from the decisions that matter. Relevance Engineering structures content for clarity, optimizes for retrieval, and measures real impact. Content Resonance turns that visibility into lasting connection.

Schedule a call with iPullRank to own the conversations that drive your market.

The appendix includes everything you need to operationalize the ideas in this manual, downloadable tools, reporting templates, and prompt recipes for GEO testing. You’ll also find a glossary that breaks down technical terms and concepts to keep your team aligned. Use this section as your implementation hub.

//.eBook

The AI Search Manual is your operating manual for being seen in the next iteration of Organic Search where answers are generated, not linked.

Prefer to read in chunks? We’ll send the AI Search Manual as an email series—complete with extra commentary, fresh examples, and early access to new tools. Stay sharp and stay ahead, one email at a time.

Sign up for the Rank Report — the weekly iPullRank newsletter. We unpack industry news, updates, and best practices in the world of SEO, content, and generative AI.

iPullRank is a pioneering content marketing and enterprise SEO agency leading the way in Relevance Engineering, Audience-Focused SEO, and Content Strategy. People-first in our approach, we’ve delivered $4B+ in organic search results for our clients.

We’ll break it up and send it straight to your inbox along with all of the great insights, real-world examples, and early access to new tools we’re testing. It’s the easiest way to keep up without blocking off your whole afternoon.