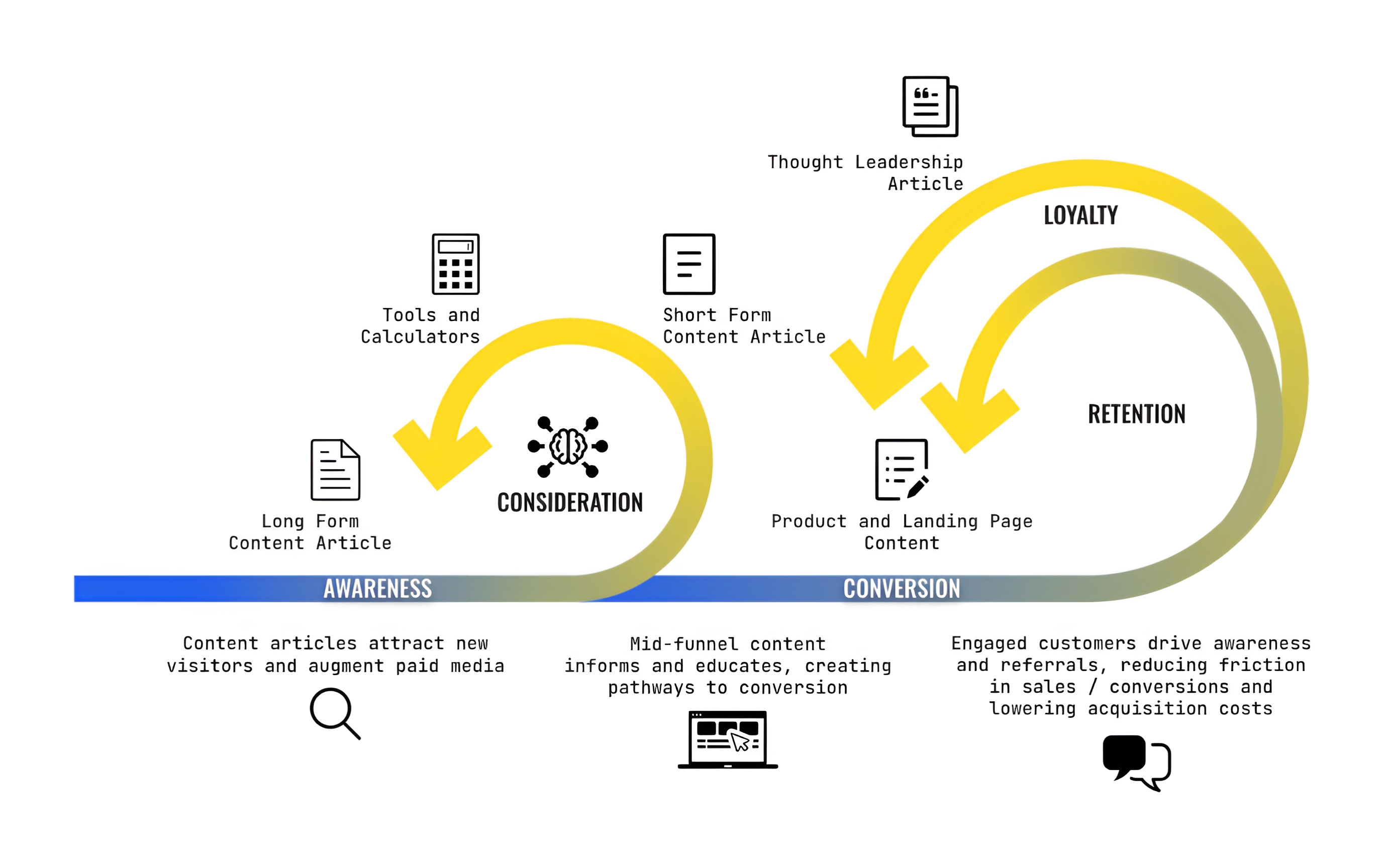

AI search is reshaping how content is discovered, interpreted, and delivered across nearly every major platform. Whether it’s AI Overviews in Google Search, conversational responses in ChatGPT, or synthesized answers in Perplexity, the question content creators and businesses now face is how to show up in all of these places.

The goal now is to understand how information is being retrieved and rebuilt, and then to write and structure content accordingly.

One of the most important steps toward visibility in AI search is ensuring that your content is both accessible and relevant. AI systems rely on structured, crawlable inputs to understand what your content is about and whether it should be included in summaries or responses.

If your content can’t be found, it won’t be surfaced. This remains true whether the user is typing a query into Google Search or being served an AI-generated summary.

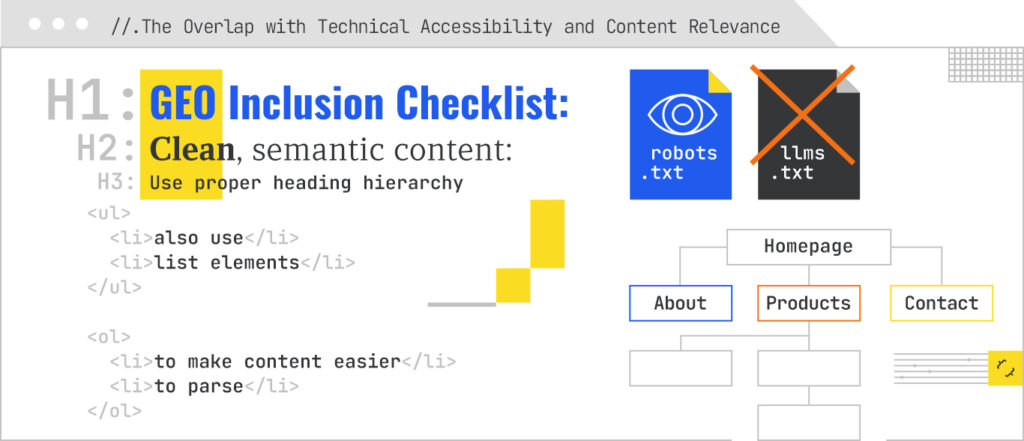

Make sure the following technical elements are in place:

There are also some content-focused recommendations:

It’s not all technical tips and tricks, though. The best way to ensure visibility in LLMs is if your content resonates. In a time when the internet is overflowing with content, yours must be scroll-stopping.

Add the R.E.A.L. tenants to your content strategy:

Making your content technically accessible, semantically clear, and relevant gives you the best chance of being included in AI-generated answers. The overlap between SEO and GEO is real, and getting the technical foundation right is one of the most concrete steps you can take today.

Generative engines validate, compare, and often cite content in their summaries. In that process, concrete facts, figures, dates, and measurable data points become critical signals. AI systems prioritize information they can verify, extract, and repurpose with minimal ambiguity. So, the more specific your content is, the more likely it is to be selected, synthesized, and surfaced.

What to Focus On:

Measurable data helps AI systems evaluate whether content can be trusted so they can summarize more confidently, align facts across multiple sources, and identify your content as a reliable contribution to an answer.

Generative systems have moved beyond simple keyword matching and rely more heavily on structured signals to interpret and reassemble information. Schema.org markup, meta descriptions, and other structural hints give AI models the clarity they need to understand the meaning, relationships, and utility of your content at the page level and within individual elements.

These signals not only improve discoverability but also enhance your inclusion in generative outputs, such as AI Overviews, AI Mode, ChatGPT, Copilot, or Perplexity.

What to Focus On:

For queries involving troubleshooting, product comparisons, lived experiences, or niche use cases, user-generated content (UGC) and forum discussions are often prioritized by AI systems. Generative models value this type of content because it reflects authentic, diverse, and situational insights that can’t always be found in more polished corporate content.

This trend has become more visible with Google’s Hidden Gems update and the increasing appearance of Reddit and Quora excerpts in AI Overviews and conversational results.

What to Focus On:

AI engines are increasingly looking beyond corporate blogs and product pages to answer real human questions. For GEO, this means content strategy should account for where and how your audience is sharing insights.

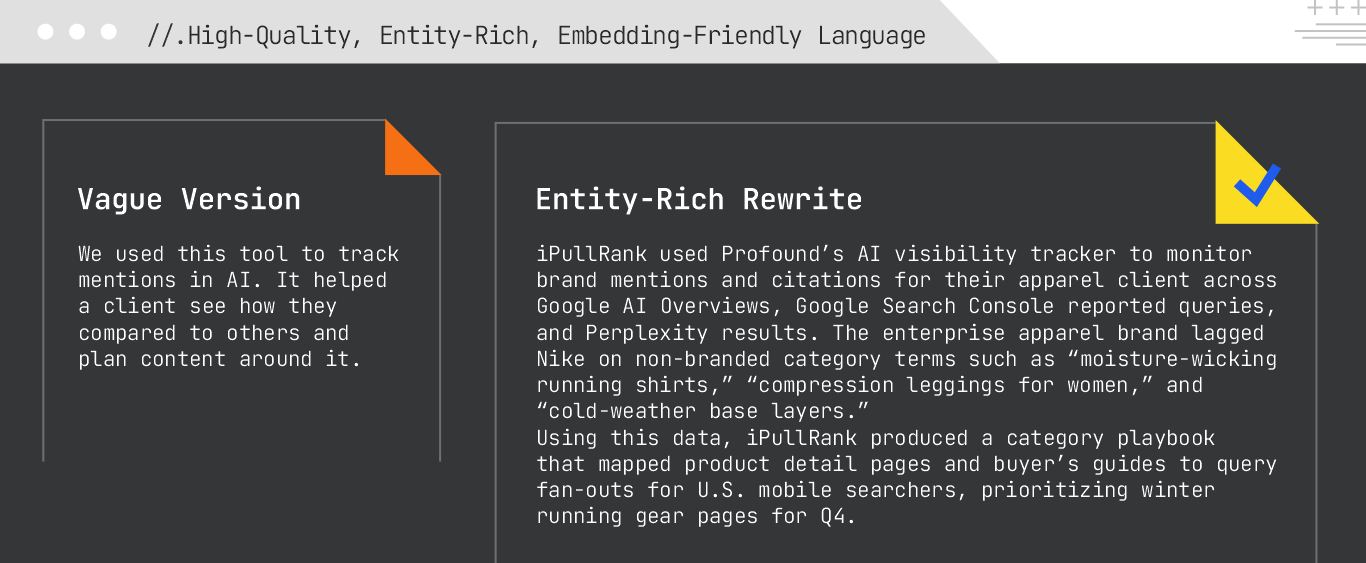

In traditional SEO, content relevance often meant placing the right keywords in the right spots. But in the context of GEO, keyword density matters less than clarity, relevance, and how well your content maps into vector space.

Generative AI systems work by encoding language into vector representations called embeddings. These embeddings capture the relationships between concepts, not just words. The clearer and more semantically rich your content, the easier it is for AI models to parse, understand, and reuse it.

What to Focus On:

Clarity fuels visibility in generative systems. Your goal is to write in a way that helps the model make accurate, meaningful associations between topics. This makes your content more retrievable and more useful as part of the AI’s response.

It doesn’t stop there, though. Considerations when creating quality content can go much deeper.

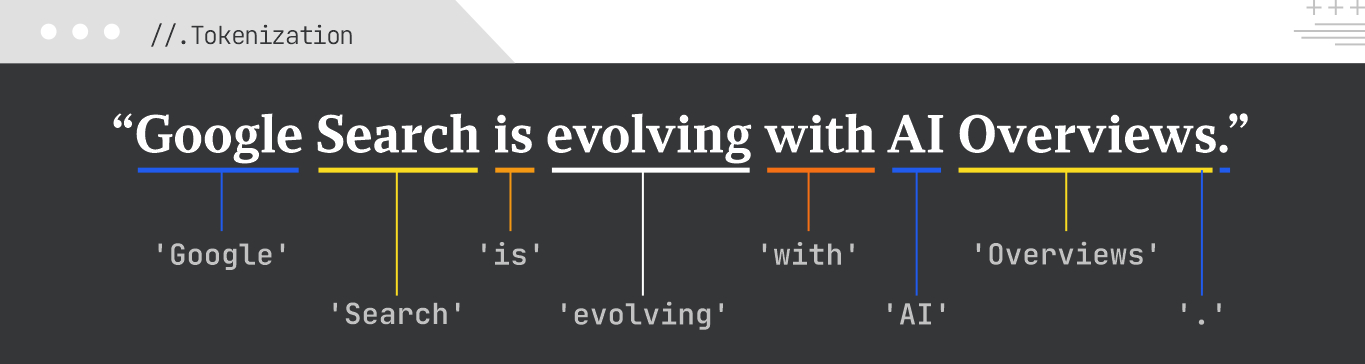

Tokenization is the process of splitting text into smaller units called ‘tokens’. These tokens can be words, subwords, or even characters. It’s a foundational step in most natural language processing (NLP) tasks, crucial for analyzing text, calculating keyword density, and preparing input for models like Bidirectional Encoder Representations from Transformers (BERT).

Tokenization can also be used to protect sensitive data or to process large amounts of data.

Example:

For the sentence, “Google Search is evolving with AI Overviews.”, tokenization might produce tokens such as [‘Google’, ‘Search’, ‘is’, ‘evolving’, ‘with’, ‘AI’, ‘Overviews’, ‘.’]

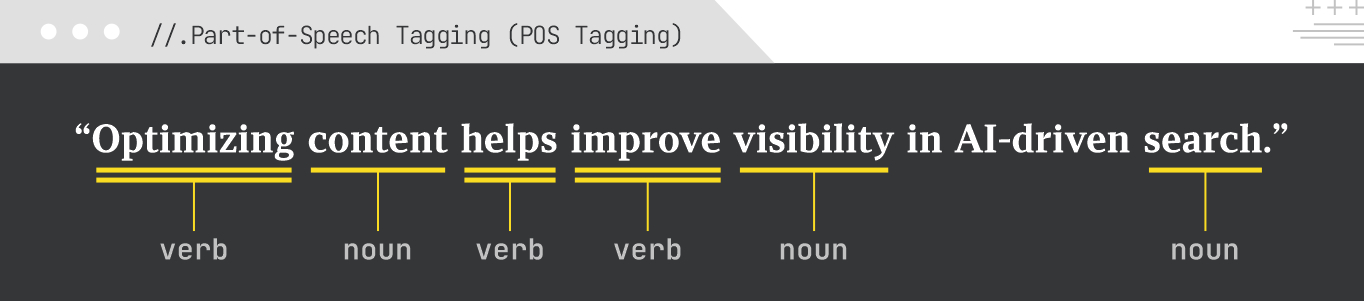

Part-of-Speech (POS) tagging assigns a grammatical category (e.g., noun, verb, adjective, adverb) to each word in a sentence. This helps with understanding the syntactic structure of the text, which is fundamental for more complex NLP tasks like dependency parsing, named entity recognition, and information extraction.

It also works well for clarifying ambiguity in terms with numerous meanings and showing a sentence’s grammatical structure, contributing to better semantic understanding for AI search.

Example:

For the sentence, “Optimizing content helps improve visibility in AI-driven search.”, POS tagging might label “Optimizing” as a verb, “content” as a noun, “helps” as a verb, “improve” as a verb, “visibility” as a noun, and so on.

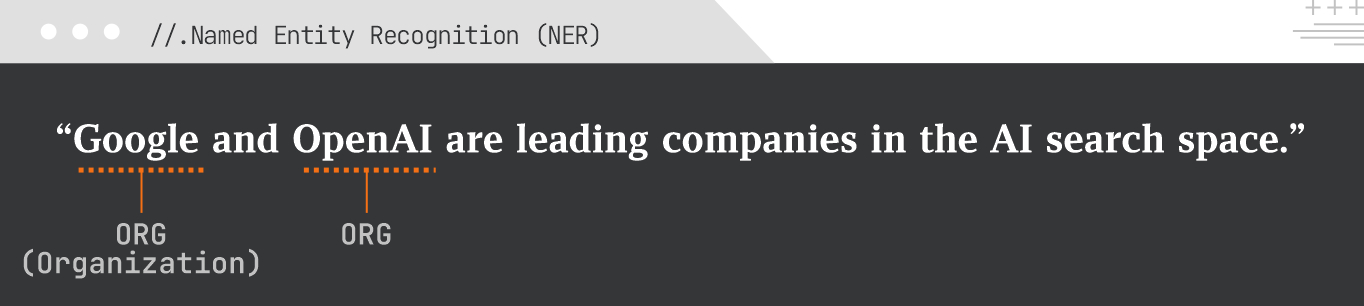

Named Entity Recognition (NER) is the task of identifying and classifying named entities (like persons, organizations, locations, dates, etc.) in text. NER is crucial for semantic search, knowledge graph construction, content categorization, and understanding key concepts mentioned in a document.

NER is a big part of chatbots, sentiment analysis tools and search engines. It’s often used in industries such as healthcare, finance, human resources, customer support, and higher education.

Example:

In the sentence, “Google and OpenAI are leading companies in the AI search space.”, NER would identify “Google” as an ORG (Organization) and “OpenAI” as an ORG.

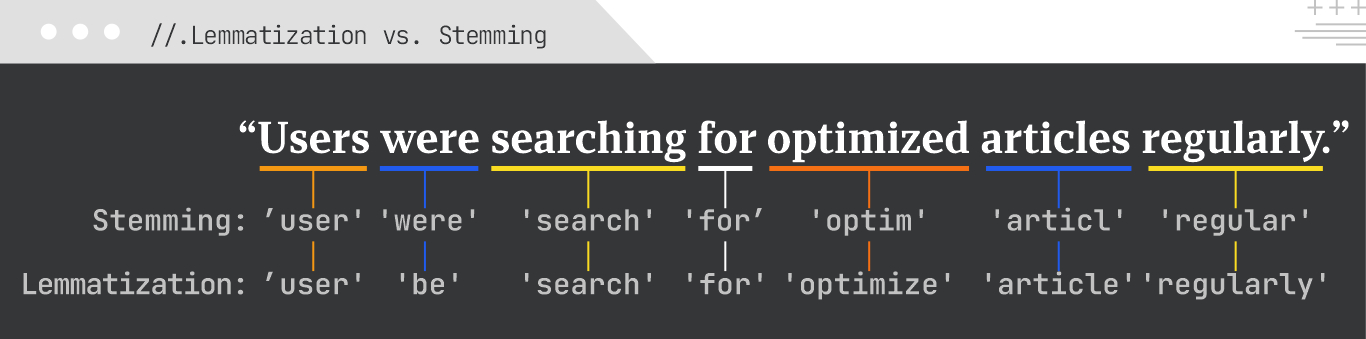

Both lemmatization and stemming reduce words to their base or root form. They help information retrieval systems and deep learning models identify related words in tasks such as text classification, clustering, and indexing.

Lemmatization is generally preferred for semantic tasks in SEO and AI search because it retains meaning better, leading to more accurate keyword matching and understanding.

Example:

For, “Users were searching for optimized articles regularly.”

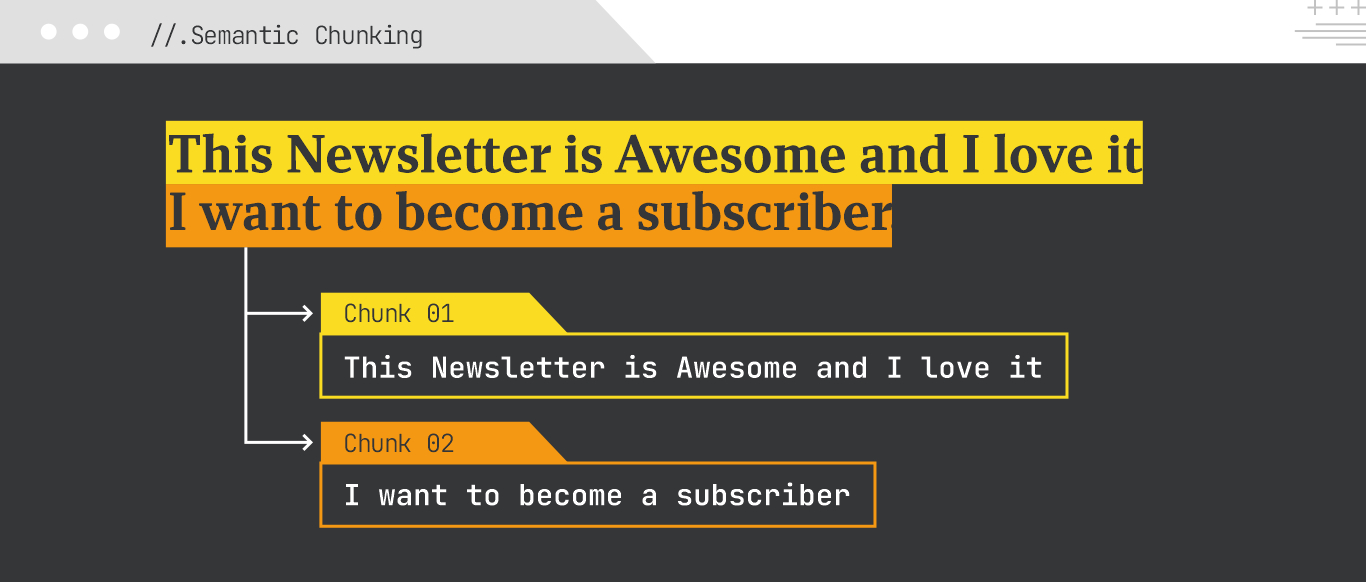

Generative engines pull sections (a sentence, paragraph, or list) and use them to construct answers. If your content is buried in a long-form narrative, it may be skipped. If it’s cleanly chunked and self-contained, it becomes far more usable.

To be included in generative responses, your content needs to be divided into clear, self-contained chunks that each express a complete idea on its own. This approach is referred to as semantic chunking.

What to Focus On:

Think of every paragraph, bullet, or table row as a potential answer on its own. Semantic chunking makes your content more extractable, more quotable, and more likely to appear in summaries, featured answers, or conversational results across AI-driven platforms.

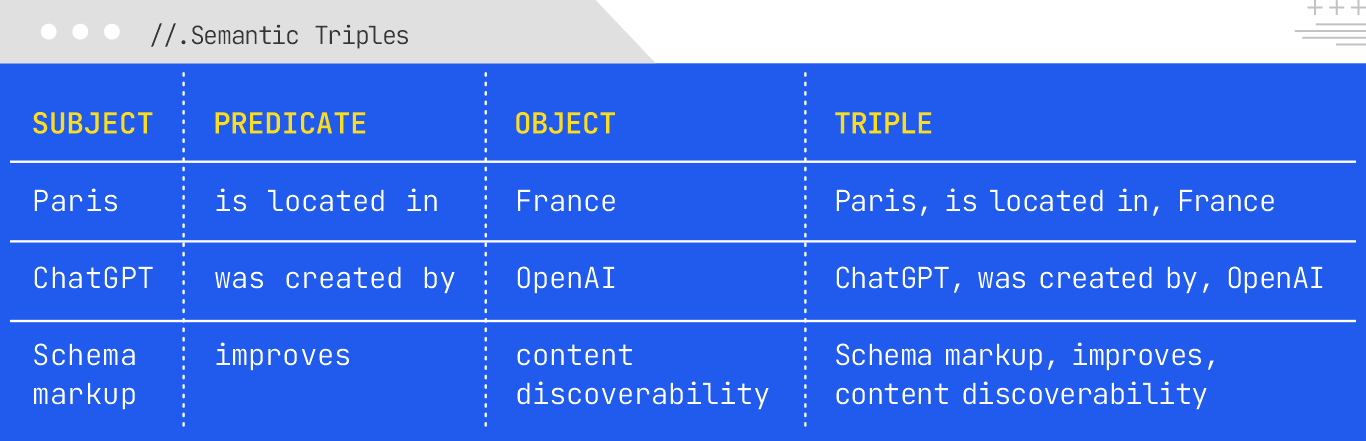

As generative engines get more sophisticated, they rely more on structured relationships between concepts. One of the most effective ways to support this is by writing in semantic triples: simple “Subject–Predicate–Object” phrases that clearly state facts.

Semantic triples help search engines understand context better by identifying entities, establishing connections, and building a web of interconnected concepts, which provide richer contextual information beyond just keywords. These triples are the building blocks of knowledge graphs, which allow AI systems to understand relationships between entities, enabling more intelligent search results, factual verification, and structured data for AI overviews.

What to Focus On:

AI systems try to understand and reconstruct knowledge from data. When you write using semantic triples, you give models the clearest path to extracting accurate information and improving your content’s usefulness to machines and humans who want fast, scannable information.

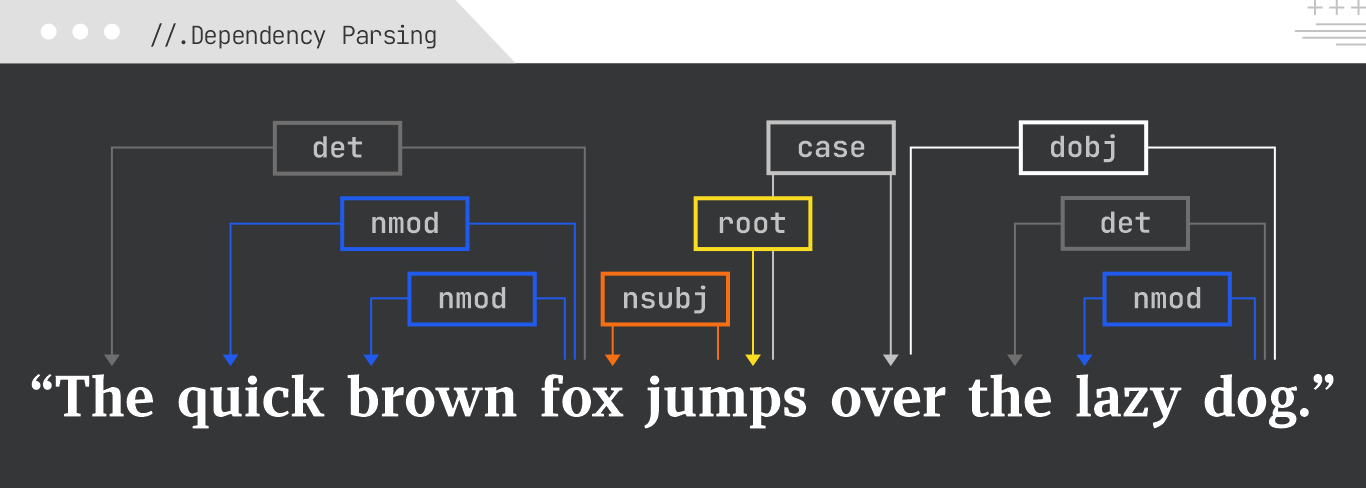

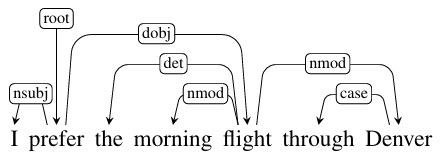

Dependency parsing analyzes the grammatical structure of a sentence by showing how words relate to each other as “heads” and “dependents.” It creates a tree-like structure, revealing the syntactic relationships between words (e.g., which word modifies which and subject-verb relationships). This is crucial for understanding sentence meaning, coreference resolution, and accurate information extraction for AI search.

A dependency typically involves two words: one that acts as the head and the other as the child.

Example:

For, “The quick brown fox jumps over the lazy dog.”, dependency parsing would show that “quick” and “brown” modify “fox”, “jumps” is the root verb, “fox” is the subject of “jumps”, and “dog” is the object of “over”.

The University of Stanford created this diagram to map out the different parts of dependency parsing:

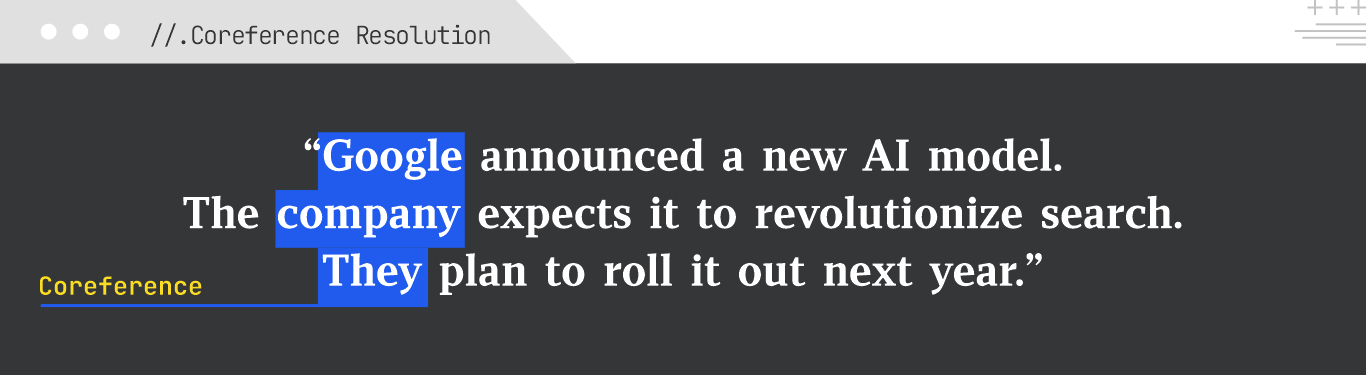

Coreference resolution is the task of identifying all expressions that refer to the same real-world entity in a text. In “John Doe went to the store. He bought milk.”, we refer to linguistic expressions like he or John or Doe as mentions or referring expressions, and John Doe as the referent. Two or more expressions that refer to the same discourse entity are said to corefer.

Coreference is vital for AI search to understand the full context of a document, know who is being discussed in text, accurately summarize information, and answer complex questions where pronouns or synonyms are used to refer to the same entity.

Example:

In the text, “Google announced a new AI model. The company expects it to revolutionize search. They plan to roll it out next year.”, coreference resolution would link “Google”, “The company”, and “They” to the same entity (Google).

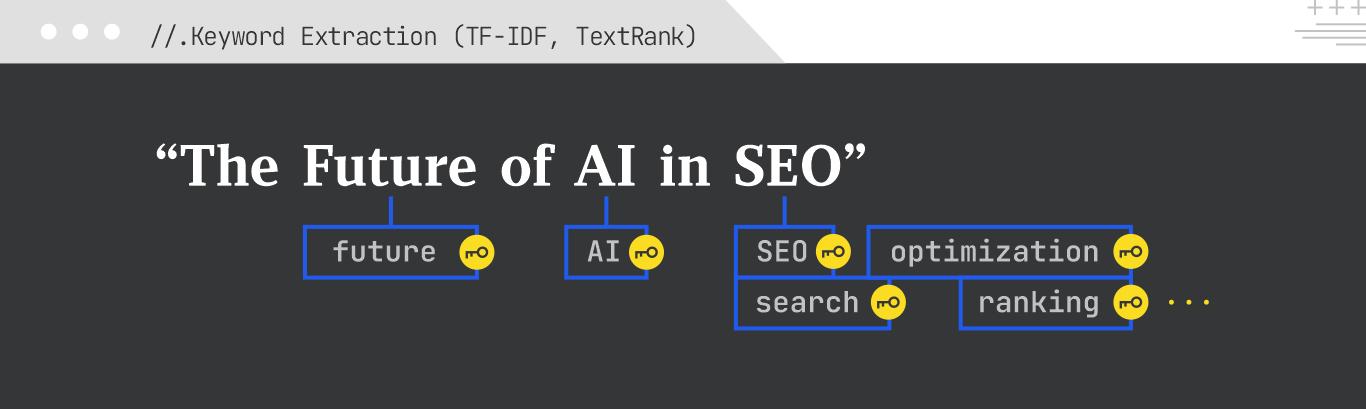

Keyword extraction is an automated information-processing task that identifies the most important words or phrases in a text to provide a summary of the text. Two keyword extraction techniques include:

Both are crucial for understanding the main topics of a document, optimizing content for specific keywords, and informing content strategy for SEO and AI search.

Example:

For a blog post about “The Future of AI in SEO”, keyword extraction might identify terms like “AI”, “SEO”, “future”, “search”, “optimization”, “ranking”, etc.

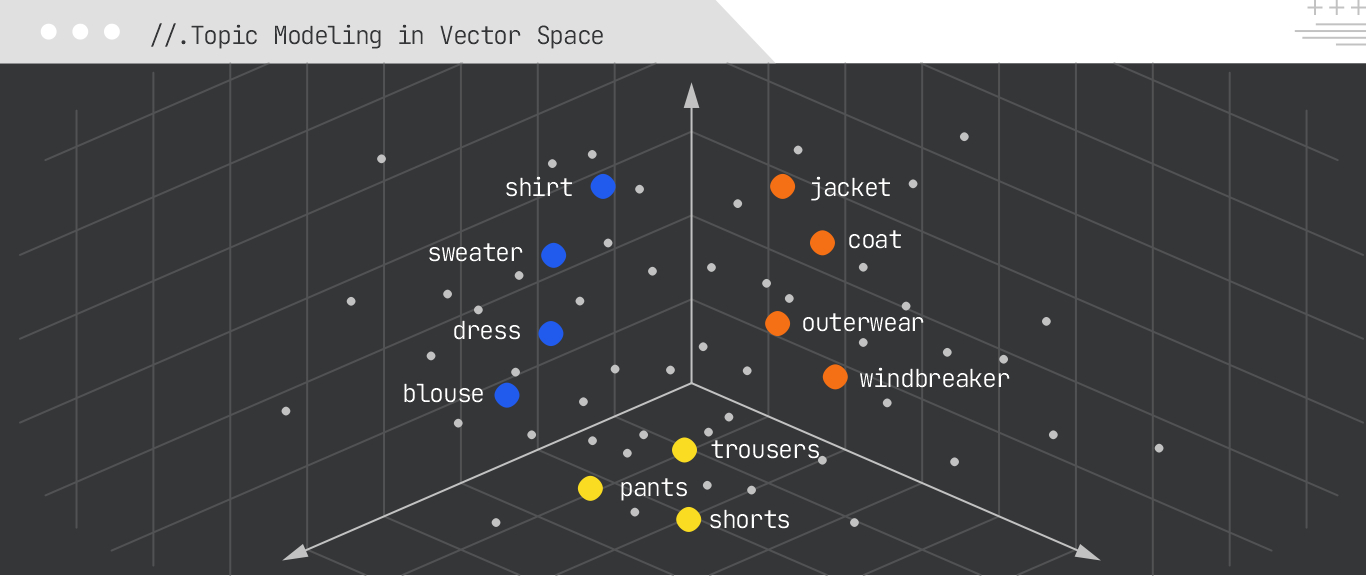

Topic modeling algorithms discover abstract “topics” that occur in a collection of documents. They automatically cluster words that often occur together in documents with the goal of identifying groups of words and the underlying themes and topics.

Some of the more popular models include:

Topic modeling is useful for content gap analysis, understanding user intent across queries, grouping similar content, and informing content cluster strategies for SEO.

Example:

Analyzing a set of SEO articles might reveal topics such as “Link Building Strategies,” “On-Page SEO Optimization,” “Technical SEO Audits,” and “Content Marketing for SEO.”

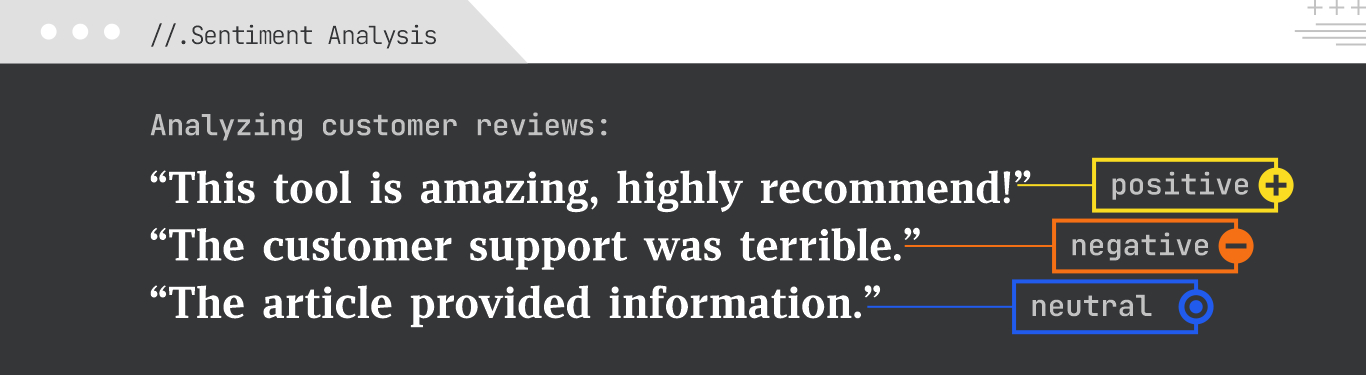

Sentiment analysis (or opinion mining) determines the emotional tone behind a piece of text, be it positive, negative, or neutral.

In SEO, sentiment analysis can be used to analyze customer reviews, social media mentions, and competitor content to gauge brand perception and identify areas for improvement. For AI search, understanding sentiment can influence result ranking and personalized recommendations.

Example:

Analyzing customer reviews:

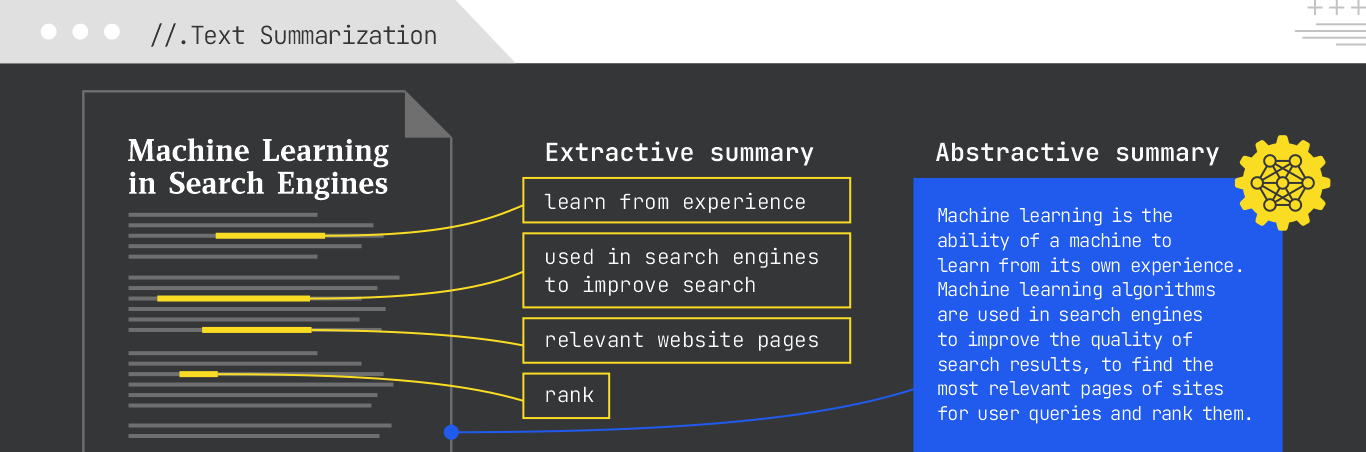

Text summarization condenses longer texts into shorter, coherent versions. To do this, it uses two different methods:

Summarization is critical for generating AI Overviews, creating meta descriptions, summarizing long articles for quick review, and producing concise content snippets for AI search results.

Example:

For a long article about “Machine Learning in Search Engines”, an extractive summary might pick out the main topic sentences, while an abstractive summary might synthesize a new, concise overview.

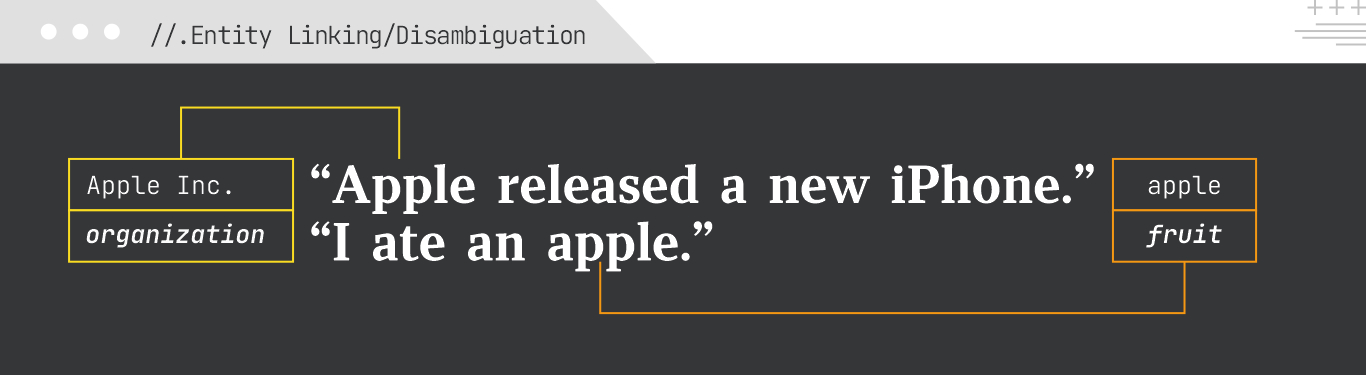

Entity linking (or entity disambiguation) is the process of mapping named entities extracted from text to their unique, unambiguous entries in a knowledge base.

Entity linking is crucial for semantic search, as it ensures that search engines understand the exact entity a query refers to, leading to more precise results and a richer understanding of content for AI systems.

Example:

In the sentence, “Apple released a new iPhone.”, “Apple” would be linked to Apple Inc. (organization). In “I ate an apple.”, “apple” would be linked to apple (fruit).

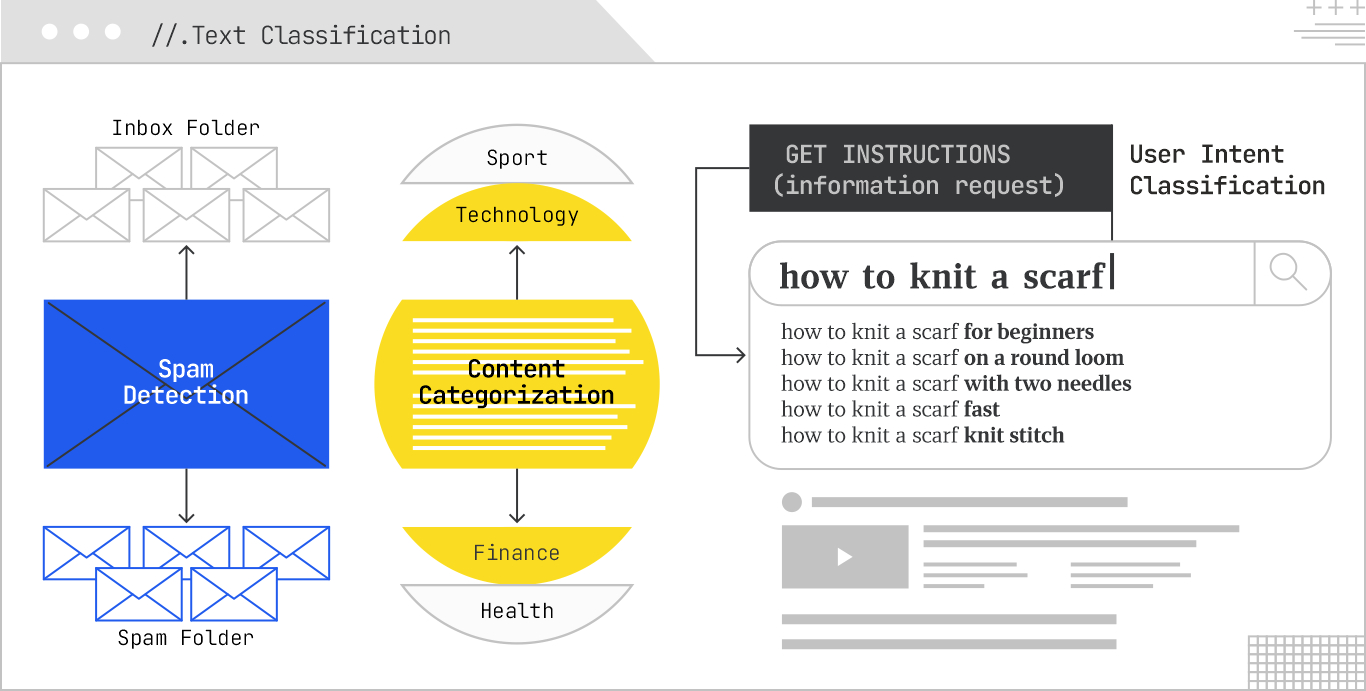

Text classification is the task of assigning predefined categories or labels to pieces of text, allowing computers to interpret and organize large amounts of data. This is highly versatile and can be used for:

In SEO, text classification helps categorize content for better organization, identify low-quality content, and understand the thematic relevance of pages. In AI search, it aids in filtering irrelevant results and structuring information for better retrieval.

Example:

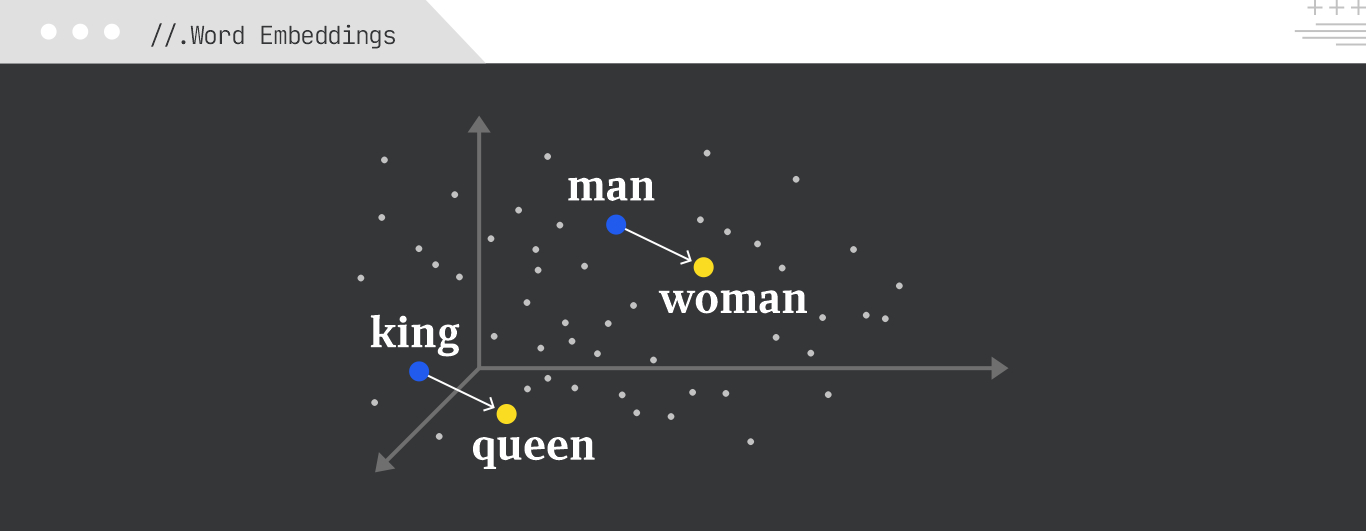

Word embeddings are dense vector representations of words that capture their semantic meaning. Words with similar meanings are located closer to each other in this multi-dimensional space, helping with tasks such as text classification, sentiment analysis, machine translation and more.

Gemini embedding, an advanced embedding model developed by Google DeepMind and built on Gemini, offers a unified approach to generate rich, context-aware embeddings for various text granularities, from words to longer phrases. It generates embeddings for text in over 250 languages and can code.

Gemini embeddings can be used for tasks like classification, similarity search, clustering, ranking, and retrieval.

Example:

The embedding for “king” would be semantically close to “queen” and “prince”, and the vector arithmetic king – man + woman would be close to queen.

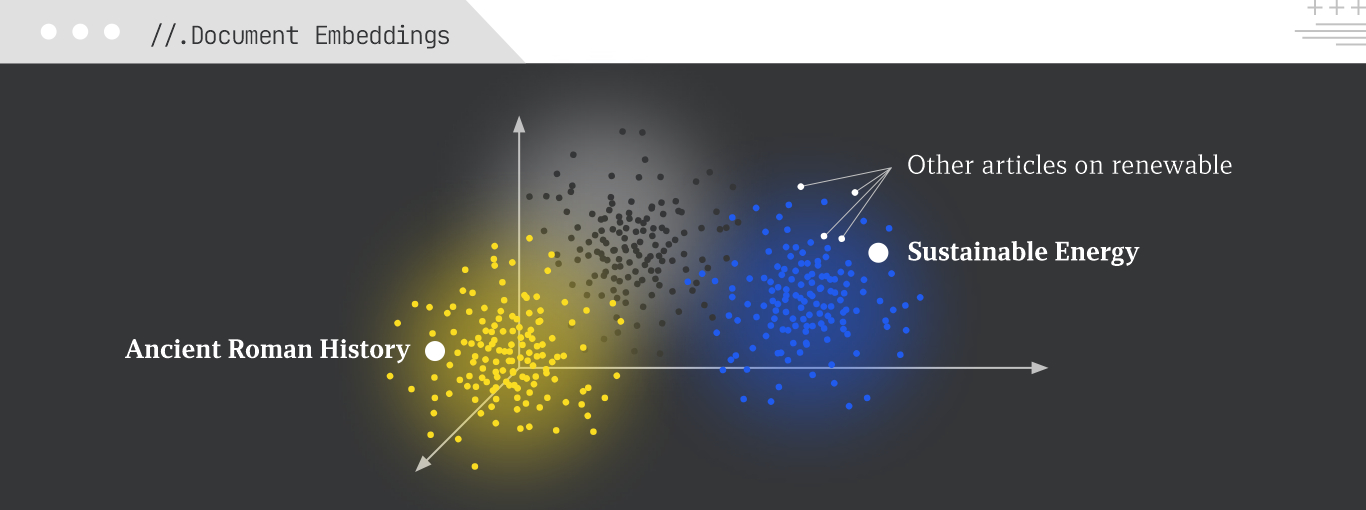

Document embeddings (or sentence embeddings) are vector representations that capture the semantic meaning of entire documents or sentences. They allow for comparing the similarity between larger chunks of text.

Three methods for generating document embeddings are:

Example:

A document embedding for an article about “Sustainable Energy” would be close to embeddings for other articles on renewable resources, but far from articles about “Ancient Roman History.”

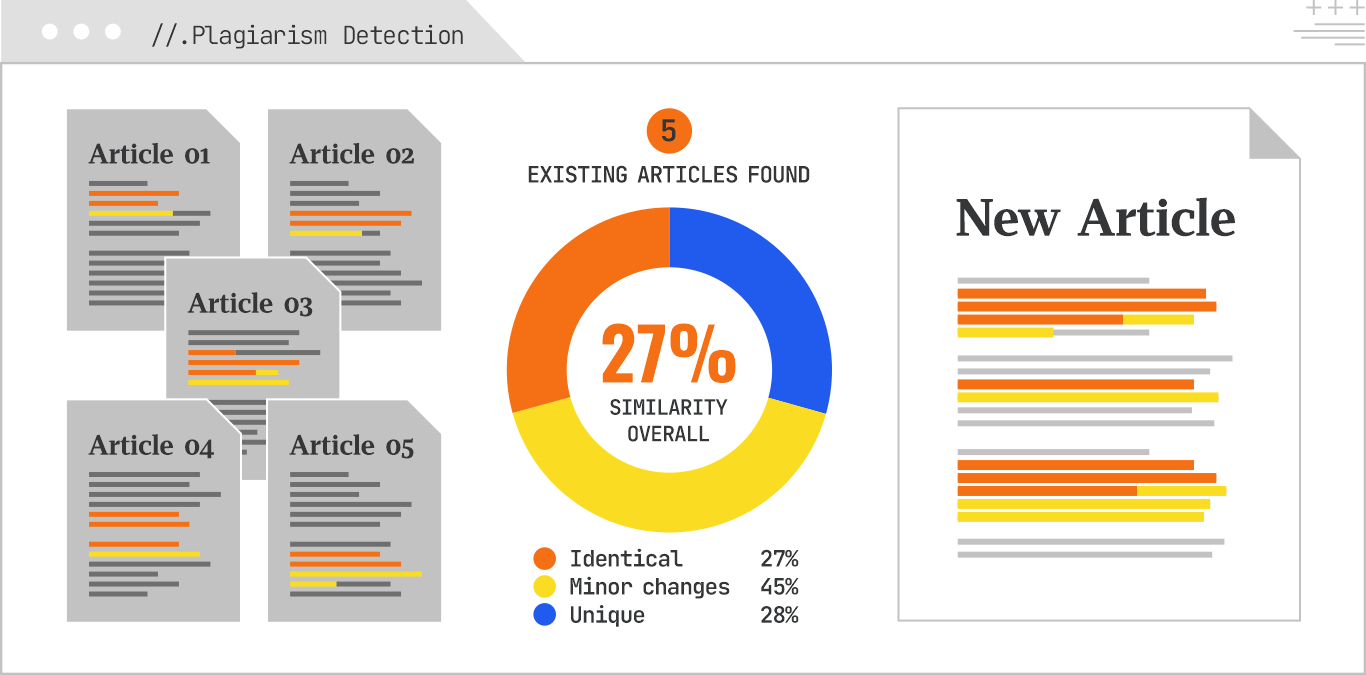

Plagiarism detection identifies instances where text has been copied without proper attribution. Leveraging Gemini embeddings allows for a robust semantic plagiarism check, detecting not just exact copies but also highly similar rephrased content, crucial for maintaining content originality and avoiding search engine penalties.

Example:

Comparing a newly generated article against a corpus of existing articles to detect copied phrases or paragraphs based on semantic closeness.

Anomaly detection identifies unusual patterns or outliers in data. In NLP for SEO, this can be applied to content quality by detecting:

This helps with proactive identification of potential content issues that could impact SEO performance or indicate a need for review, such as errors, unusual events, or potential fraud.

Example:

A sudden spike in the use of a seemingly irrelevant keyword across multiple articles, or a review with an extreme sentiment score compared to others.

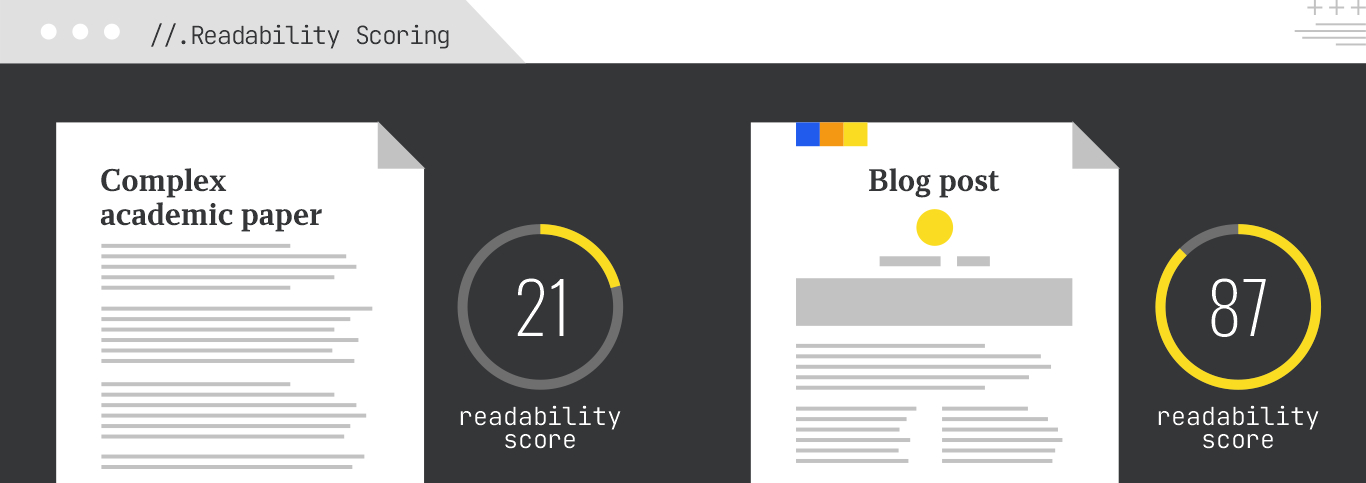

Readability scoring assesses how easy it is to read and understand a text.

In SEO, optimizing for readability improves user experience, reduces bounce rates, and makes content more accessible, which indirectly signals quality to search engines and is a direct factor for AI Overviews.

Readability tests include:

All of these metrics consider factors such as sentence length, word length, and syllable count to determine the approximate reading level of a text or how many years of education a person would need to understand it.

Example:

A complex academic paper would have a low readability score, while a simple blog post would have a high one.

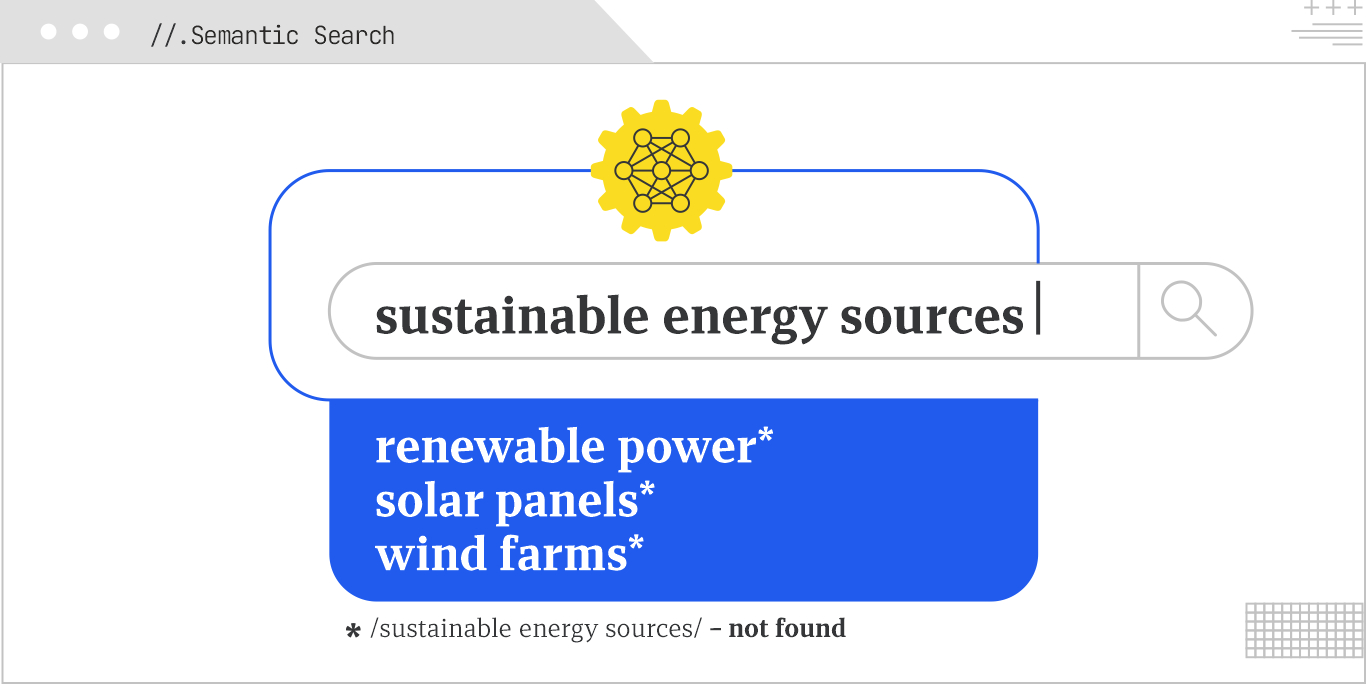

Semantic search understands the meaning and intent behind a query, moving beyond keyword matching. It uses powerful embeddings like Gemini’s to find documents that are semantically similar to the query, even if exact keywords are absent. This is the cornerstone of modern AI-powered search engines, delivering more relevant and nuanced results.

Example:

A search for “sustainable energy sources” would return results about “renewable power,” “solar panels,” or “wind farms,” even if the exact phrase “sustainable energy sources” isn’t present in the documents.

There is no one-size-fits-all formula for visibility in AI Search, but the patterns are becoming clear. Structured data, semantic clarity, specific language, and technical accessibility all play a role in how content is evaluated and used by AI systems. These systems are trained to understand not just words, but meaning, context, and usefulness.

GEO sits at the intersection of technical SEO, content strategy, and natural language processing. Getting it right means knowing how models interpret the web and giving them content they can trust, extract, and reuse.

Creating the right content requires a focus on relevance. Engineering the most relevant content for visibility involves looking at semantic scoring, optimizing passages, and testing vector embeddings. Let’s look more deeply at the process of Relevance Engineering.

If your brand isn’t being retrieved, synthesized, and cited in AI Overviews, AI Mode, ChatGPT, or Perplexity, you’re missing from the decisions that matter. Relevance Engineering structures content for clarity, optimizes for retrieval, and measures real impact. Content Resonance turns that visibility into lasting connection.

Schedule a call with iPullRank to own the conversations that drive your market.

The appendix includes everything you need to operationalize the ideas in this manual, downloadable tools, reporting templates, and prompt recipes for GEO testing. You’ll also find a glossary that breaks down technical terms and concepts to keep your team aligned. Use this section as your implementation hub.

//.eBook

The AI Search Manual is your operating manual for being seen in the next iteration of Organic Search where answers are generated, not linked.

Prefer to read in chunks? We’ll send the AI Search Manual as an email series—complete with extra commentary, fresh examples, and early access to new tools. Stay sharp and stay ahead, one email at a time.

Sign up for the Rank Report — the weekly iPullRank newsletter. We unpack industry news, updates, and best practices in the world of SEO, content, and generative AI.

iPullRank is a pioneering content marketing and enterprise SEO agency leading the way in Relevance Engineering, Audience-Focused SEO, and Content Strategy. People-first in our approach, we’ve delivered $4B+ in organic search results for our clients.

We’ll break it up and send it straight to your inbox along with all of the great insights, real-world examples, and early access to new tools we’re testing. It’s the easiest way to keep up without blocking off your whole afternoon.