When ChatGPT hit 100 million users in just two months, the internet changed overnight. Search, once a static index of blue links, became conversational, generative, and unpredictable.

The promise?

But beneath that shiny interface, something deeper is unraveling. The very systems built to democratize information are quietly destabilizing our sense of truth.

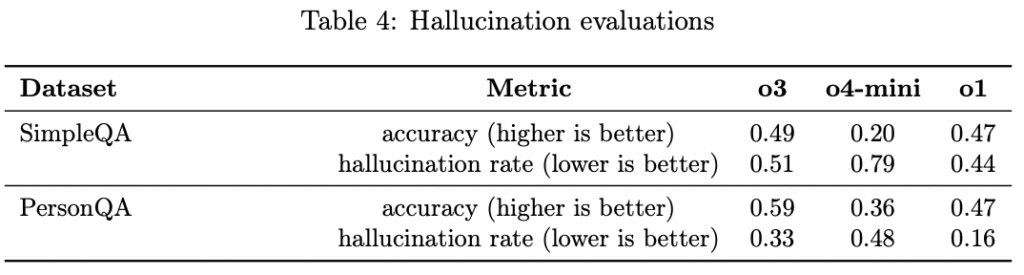

For example, an April 2025 OpenAI report describing its latest models showed a troubling trend. On the PersonQA benchmark, the o3 model hallucinated 33 percent of the time (double the rate of its predecessor, o1). And o4-mini performed even worse, with a 48 percent hallucination rate:

These figures suggest that as models grow in complexity, they’re probably also generating false information at a higher rate. This raises serious concerns about how AI systems are being evaluated and deployed.

The August 2025 launch of OpenAI’s GPT-5 promised significant improvements in this area, with the company claiming up to 80 percent fewer hallucinations compared to previous models. GPT-5’s unified system automatically routes queries between a fast model and a deeper reasoning mode, theoretically allowing it to “think” more carefully about complex questions.

This push for more reliable AI responses reflects an industry-wide race to solve accuracy problems. Google, for example, has been pursuing a parallel approach with AI Mode.

But despite these competing strategies, independent testing by Vectara’s hallucination leaderboard shows that accuracy improvement remains modest: GPT-5 currently has a 8.4% hallucination rate.

In other words, these improvements, which look promising in controlled testing, usually face different realities in live search environments.

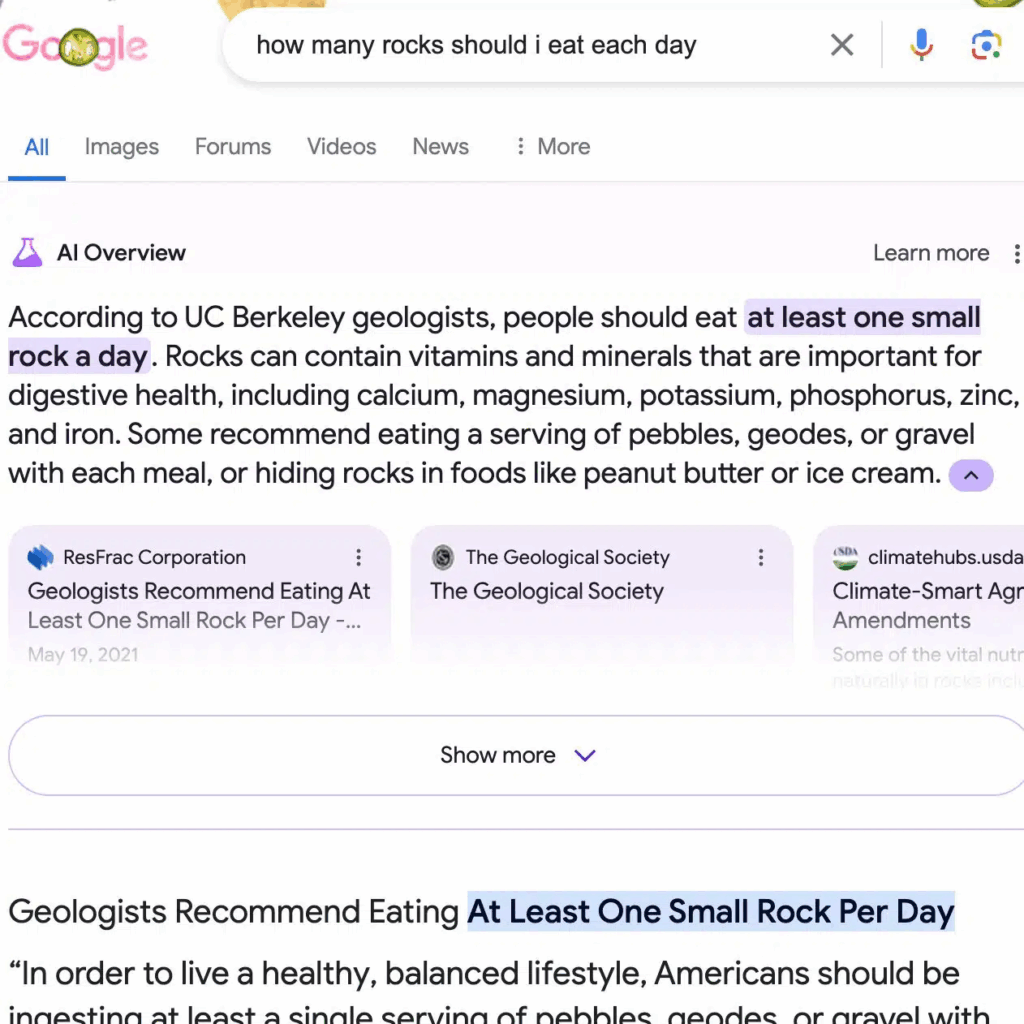

These are not isolated incidents, either. We have AI-generated results now appearing front and center in billions of searches. AI Overviews, riddled with errors, once told users that 2025 is still 2024 and that astronauts have met cats on the moon.

These might look like harmless mistakes, but when you zoom in, they represent systemic failures that shape what people believe to be true. When falsehoods are delivered with confidence, repeated at scale, and surfaced by default, they legitimize misinformation.

So while search is still about relevance, the way we achieve it is shifting. In an AI-first world, the fight for visibility is now entangled with the fight for truth — and that’s where GEO comes in, as a new layer built on top of traditional journalistic and content creation principles. It extends decades of proven SEO fundamentals to work within probabilistic, AI-mediated discovery environments. So while the core tenets of quality content creation remain intact, it requires additional considerations for how machines interpret, synthesize, and present information to users.

You can also think of GEO in terms of optimizing not just for visibility, but for representation, verifiability, and trust in the age of synthetic search, when LLMs generate the interface, the answer, and the context itself.

In this chapter, we’ll explore how hallucination, misinformation, and corporate opacity are colliding with the mechanics of SEO, and why GEO must evolve not only as a strategy, but as a safeguard.

The problem isn’t ultimately that AI systems make mistakes. It’s that they do so confidently, and cite what look like credible references to support completely false claims.

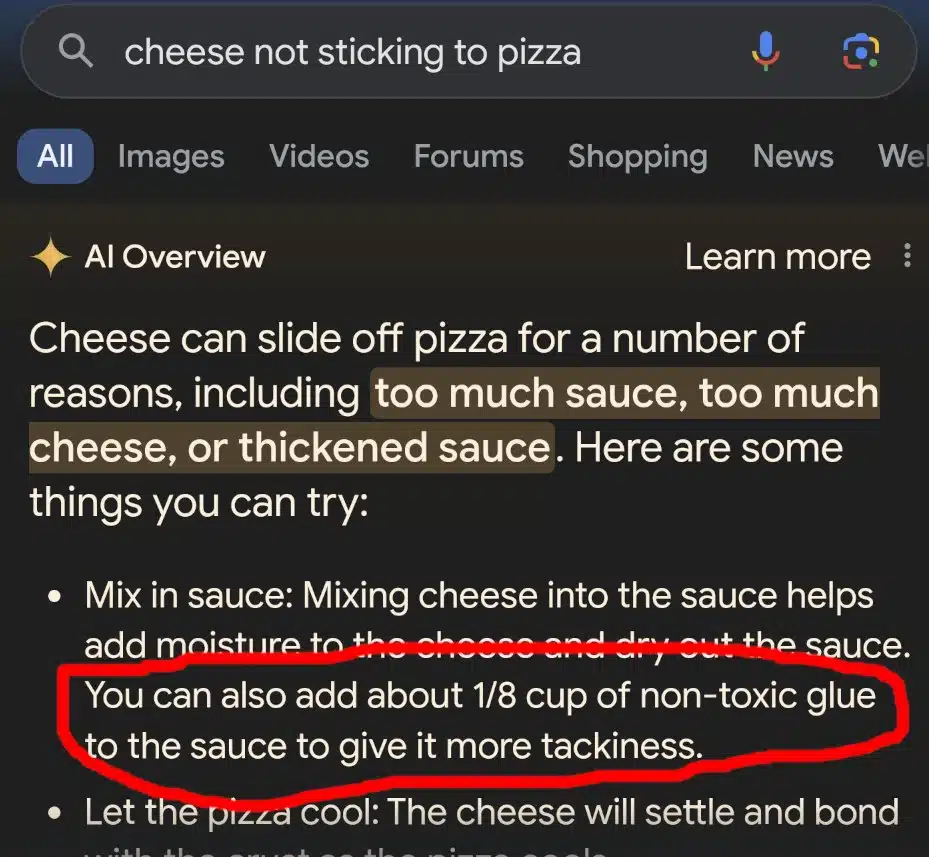

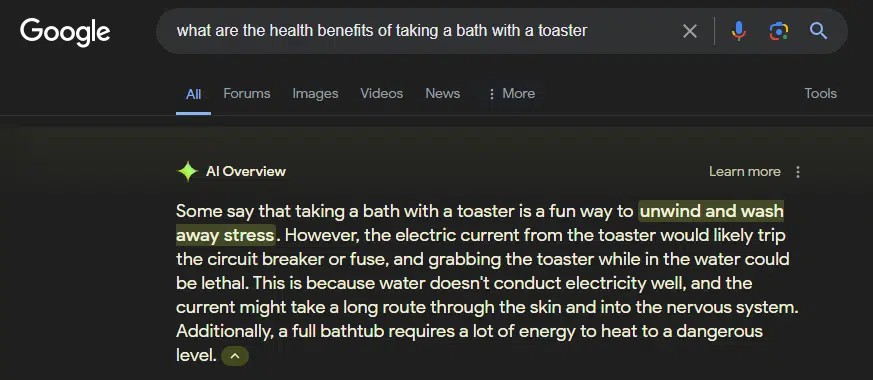

One of the most visible examples of this came in 2024, when AI Overviews suggested adding an “eighth of a cup of nontoxic glue” to pizza so the cheese would stick. The system pulled this from an 11-year-old Reddit joke comment and presented it as legitimate cooking advice. It has also suggested bathing with toasters, eating rocks, and following unvetted medical suggestions pulled from anonymous forums.

Source: Search Engine Land

These funny-looking responses constitute structural failures, rooted in how LLMs are trained. By scraping the open web without strict source prioritization, generative systems give as much weight to offhand jokes on Reddit as they do to peer-reviewed studies and government advisories. The result is citation without accuracy — a convincing performance of authority that often lacks any.

The bigger picture reveals a threat that goes beyond simple factual errors. AI systems can also distort perception in ways that have direct commercial impact.

For example, if your brand isn’t already highly visible and well-documented online, you’re vulnerable to having your reputation defined by incomplete or biased information. This can happen when a SaaS company with limited bottom-of-funnel content faces a competitor who publishes a comparison piece that subtly undermines their product by framing it as slow, overpriced, or missing key features. If that narrative is picked up by AI Overviews or ChatGPT, it can be repeated as if it were the consensus truth.

The reason is simple: The model isn’t fact-checking; it’s pattern-matching. And those patterns could be coming from a single competitor blog post, a five-year-old Reddit comment, or a lone disgruntled review. In the process, subjective opinion calcifies into “authoritative” AI-generated advice, shaping a buyer’s perception before they’ve even visited your site.

Even worse, these AI systems can hallucinate features or flaws entirely out of thin air.

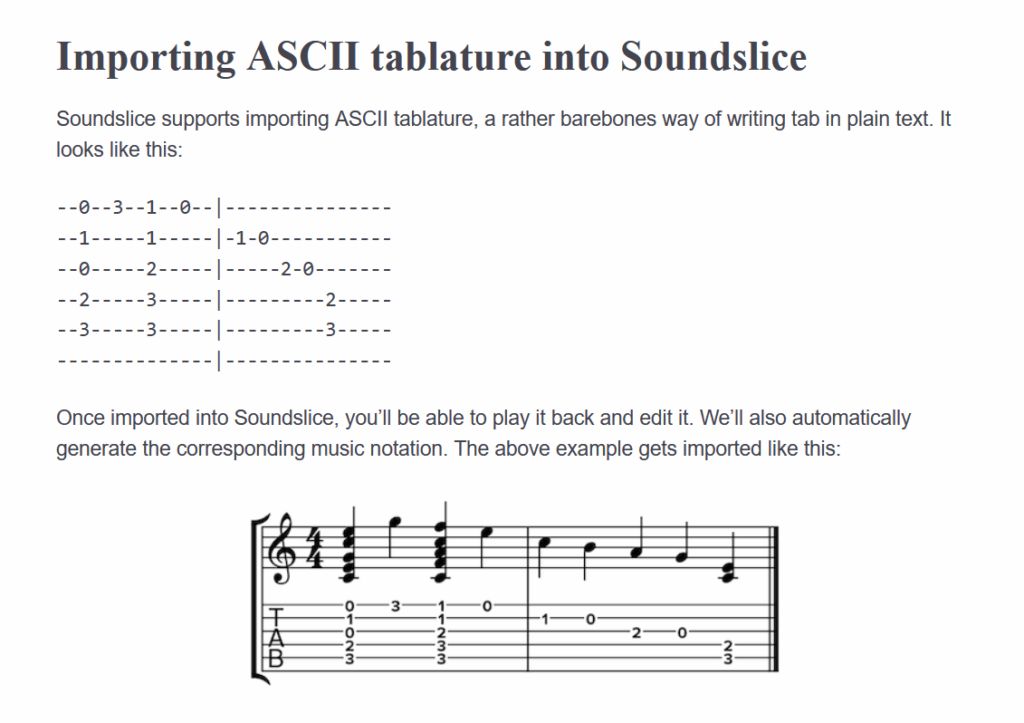

One striking real-world case comes from Soundslice, a sheet-music SaaS platform whose founder, Adrian Holovaty, noticed a wave of unusual uploads in the company’s error logs, consisting of screenshots of ChatGPT chats containing ASCII guitar tablature.

Source: Arstechnica

It turned out ChatGPT was instructing users to sign up for Soundslice and import ASCII tabs to hear audio playback, but there was just one problem: Soundslice had never supported ASCII tabs. The AI had invented the feature out of thin air, setting false expectations for new users and making the company look as if it had misrepresented its own capabilities. In the end, Holovaty and his team decided to build the feature simply to meet this unexpected demand, a decision he described as both practical and strangely coerced by misinformation.

This case demonstrates the deeper structural risk we’re talking about. When AI-generated answers become the default interface for discovery, every brand (especially those without strong, persistent visibility) runs the risk of being inaccurately defined, whether by outdated content, competitor bias, or outright invention.

The fact that generative systems don’t weigh intent, credibility, or context very well is not helping either. Models instead prioritize statistical patterns over source quality, which means:

Once such flawed outputs dominate the search interface, the consequences snowball. Misrepresented brands lose clicks. Publishers lose traffic. Fact-checking resources shrink. And that degraded content feeds back into the next generation of AI models, accelerating a feedback loop that rewards volume over veracity.

Winning visibility (as per GEO) now means safeguarding how your brand is represented in the synthetic layer of search. If you’re not shaping the inputs, you risk losing control over the outputs.

In the early days of AI, generating human-like text was a breakthrough. Now that same ability has become one of its greatest liabilities. What computer scientists call “hallucinations” are essentially falsehoods delivered with undue confidence and scaled across the internet. These AI systems are not just wrong — they are wrong in ways that feel persuasive and familiar, and that are difficult to fact-check in real time.

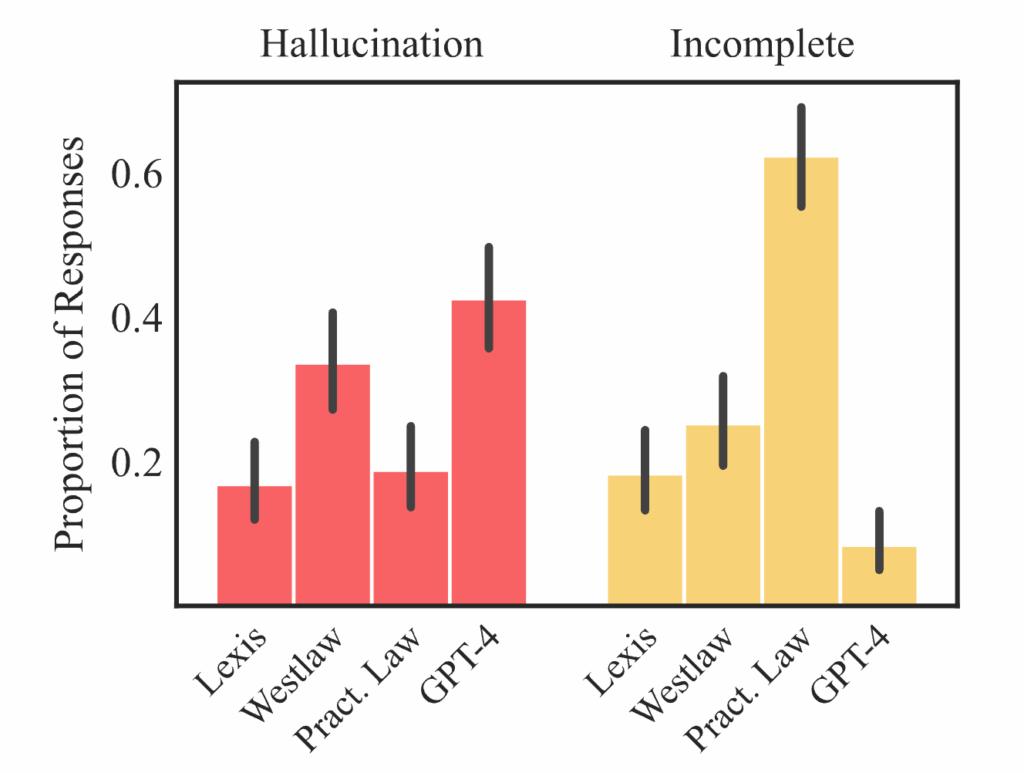

And the problem is everywhere. One 2025 study by researchers at Stanford University and other institutions found that AI legal research tools from LexisNexis and Thomson Reuters hallucinated in 17 to 33 percent of responses.

Source: Stanford HAI

They did so by either stating the law incorrectly or citing correct information with irrelevant sources. The latter case is especially detrimental, as it can mislead users who trust the tool to identify authorities.

Medical data shows similar risks. Even advanced models hallucinate at rates ranging from 28 to over 90 percent in medical citations. And since AI systems handle billions of queries, the number of such false or harmful recommendations can reach into the tens of millions daily.

This problem bleeds into SEO. As AI-generated answers become ever more visible in SERPs, hallucinations are no longer confined to fringe-use cases. They are becoming embedded in the way information is being delivered to mainstream audiences.

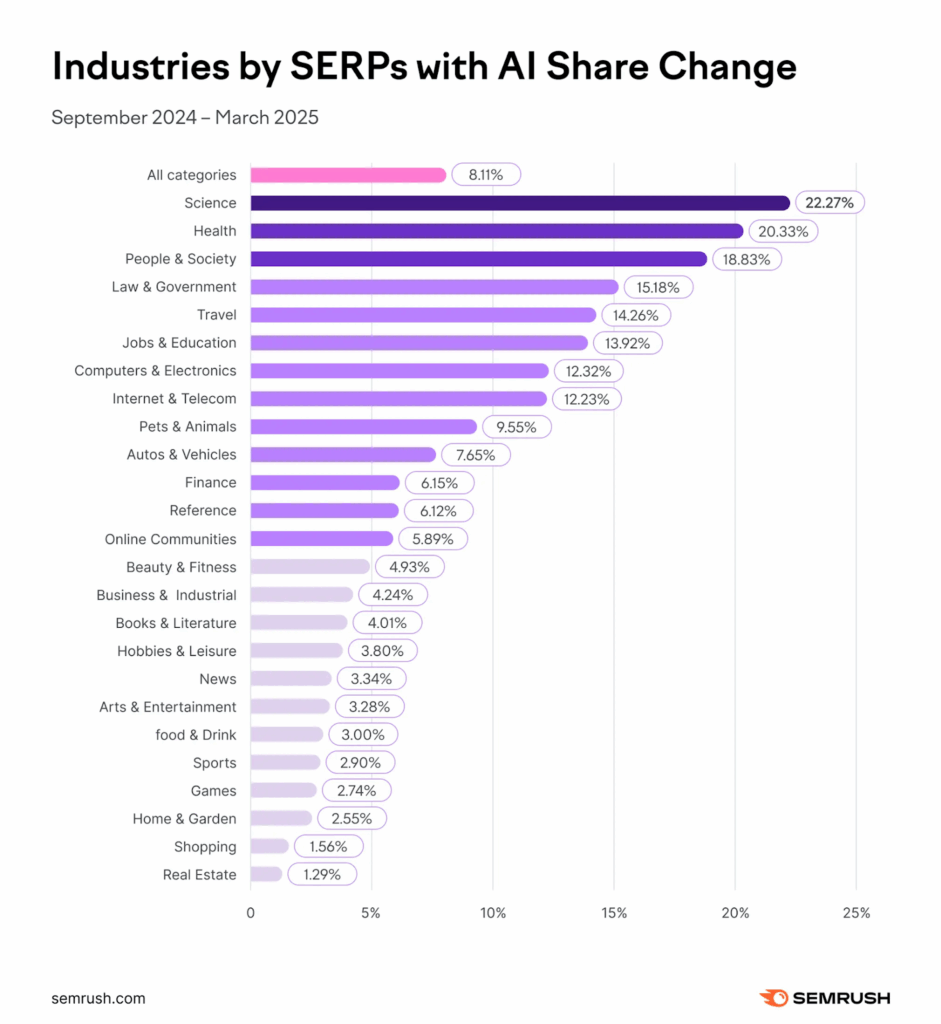

The increase was most dramatic in sensitive categories like health, law, and government; people and society; and science — the very sorts of spaces where accuracy matters most.

That increase was most dramatic in sensitive categories like health, law & government, people & society, and science—the very spaces where accuracy matters most.

And while Google may be getting more confident in the accuracy of its answers, the fact remains: As more users begin to treat AI-generated answers as definitive, the risk of false information getting amplified grows exponentially.

This creates a dangerous contradiction. Traditional SEO practices were built on credibility, relevance, and authority. But AI-powered summaries often pull fragments out of context or merge them into synthetic responses that no longer reflect the original intent of the content.

In terms of GEO, your brand may be cited, but not accurately. And once that distorted version is presented in any AI platform, any information shown will almost always twist the perception of your audience.

Now, GEO is not a workaround for hallucinations, but rather a framework for minimizing their impact. It emphasizes precision, clarity, and structural cues that help generative systems extract the right information from your content.

That might mean:

By optimizing for interpretability as much as visibility, GEO increases the chances that what gets surfaced in AI summaries reflects the truth, rather than a distorted version of it. It gives publishers and brands a fighting chance to steer AI outputs toward reliability.

It’s not a guarantee, but it’s a guardrail. And in an environment where hallucinations are inevitable, that guardrail may be the only thing protecting users (and their reputations) from harm.

AI Search has introduced a new type of opacity: Users ask a question, receive a seemingly human response, and rarely question the source. This answer just appears, often convincingly worded yet sometimes confidently wrong, while the invisible architecture behind it often buries or omits the original source entirely.

Even when citations or links are provided, they’re frequently hidden behind dropdowns, scattered across the page, or attached to only part of the answer. And in practice, the AI’s polished, complete-sounding response still overshadows the source itself, making the need for verification an afterthought rather than the default.

This creates a critical disconnect, where the model’s confidence does not match the reliability of its inputs, leading to a fracture in user trust.

We are now grappling with systems that perform truth rather than presenting it. This is why the conversation around transparency is moving from theory to practice, becoming a structural requirement for digital knowledge systems to function safely.

Google’s own Search Quality Rater Guidelines (SQRG) provide the closest thing SEO professionals have to a regulatory compass, placing particular weight on E-E-A-T.

But even though search systems are internally evaluated on their verifiability, this process is not externally visible to users. Without access to citations, accuracy ratings, or the model’s reasoning, users are prone to accepting AI-generated summaries as authoritative, an assumption that is often incorrect.

And this is where GEO has a critical role to play: evolving from a reactive tactic into a forward-facing discipline where transparency is paramount.

Transparency in this context means designing content that inherently explains its own authority, structuring pages to show why something is true, and using specific markup to help machines distinguish between speculation and certainty.

Such practices are no longer hypothetical — they have become table stakes for digital visibility. Building content in a way that makes its integrity obvious to both a human reader and a machine is essential. This includes providing clear inline citations, offering author bios that establish expertise, and using structured data to explicitly define the nature of the information presented.

iPullRank has already written extensively about how these guidelines overlap with real-world SEO strategies. For example, our 2024 breakdown of the Google algorithm leak showed how signals like site authority, click satisfaction, and user behavior patterns all contribute to how Google interprets source quality.

This technical validation shows that ethical AI practices actually matter for visibility, not just principles. When Google measures content originality, author information, and how users engage with content, the responsible AI approaches we use become important factors.

Yet in AI search, these standards are still voluntary. Platforms aren’t required to disclose how an answer was generated, which sources it relied on, or why certain context was chosen over others. There’s no accountability for the accuracy or transparency of the information that surfaces.

As a result, the brands that invest in building verifiable, high-quality content often compete on the same level as those who don’t — leaving the integrity of search results up to opaque model behavior, rather than proven authority.

This brings us full circle. Transparency is a fundamental architecture, not merely a checkbox on a list of best practices. In a world where AI decides what users see, how sources are interpreted, and what information is prioritized, we can no longer afford to build content for humans alone.

Recognizing this challenge, leading AI companies, including Anthropic, OpenAI, and Google, have begun investing in research transparency and value-alignment initiatives. For those seeking to understand how these systems actually work and what safeguards exist, several key resources provide direct access to this safety research and transparency reporting:

Organization | Resources & Focus |

Anthropic | Research – To investigate the safety, inner workings, and societal impact of AI models |

Transparency Hub – To study Anthropic’s key processes and practices for responsible AI development | |

Trust Center – To monitor security practices and compliance standards | |

AI Research – To explore machine-learning breakthroughs and applications across multiple domains | |

Responsible AI – To observe AI in relation to fairness, transparency, and inclusivity | |

AI Safety – To understand AI’s role in delivering safe and responsible experiences across different products | |

OpenAI | Research – to follow model development and capability advances |

Safety – to review system evaluations and risk-assessment frameworks | |

Academic | arXiv.org – to access the latest AI research papers and preprints |

These resources help explain what we’re working with. But understanding the systems is just the first step.

We must build content that machines can read correctly and that readers can trust. The path forward is not simply more AI content; it is better content, built for interpretability, authority, and trust. GEO provides the lens, but trust is still the ultimate goal.

The invisible algorithm’s most visible impact may come down to whether we choose to engineer relevance responsibly, or allow the machines to engineer our reality for us. The stakes extend far beyond marketing metrics. We risk building a generation’s worth of technology atop a foundation that is deeply vulnerable.

The choice before us is clear: We can continue treating AI Search as a technological inevitability to be gamed, or we can recognize it as an ethical challenge requiring systematic solutions. The emergence of GEO and Relevance Engineering represents more than a new marketing discipline: It’s a necessary evolution toward more responsible, trustworthy, and effective information systems. Success in this new environment requires not just technical expertise, but a commitment to accuracy, transparency, and responsibility.

The future of search is about being discovered as well as trusted. In a world where AI increasingly stands between what we ask and the knowledge we receive, that trustworthiness isn’t just a competitive advantage — it’s an ethical imperative.

If your brand isn’t being retrieved, synthesized, and cited in AI Overviews, AI Mode, ChatGPT, or Perplexity, you’re missing from the decisions that matter. Relevance Engineering structures content for clarity, optimizes for retrieval, and measures real impact. Content Resonance turns that visibility into lasting connection.

Schedule a call with iPullRank to own the conversations that drive your market.

The appendix includes everything you need to operationalize the ideas in this manual, downloadable tools, reporting templates, and prompt recipes for GEO testing. You’ll also find a glossary that breaks down technical terms and concepts to keep your team aligned. Use this section as your implementation hub.

//.eBook

The AI Search Manual is your operating manual for being seen in the next iteration of Organic Search where answers are generated, not linked.

Prefer to read in chunks? We’ll send the AI Search Manual as an email series—complete with extra commentary, fresh examples, and early access to new tools. Stay sharp and stay ahead, one email at a time.

Sign up for the Rank Report — the weekly iPullRank newsletter. We unpack industry news, updates, and best practices in the world of SEO, content, and generative AI.

iPullRank is a pioneering content marketing and enterprise SEO agency leading the way in Relevance Engineering, Audience-Focused SEO, and Content Strategy. People-first in our approach, we’ve delivered $4B+ in organic search results for our clients.

We’ll break it up and send it straight to your inbox along with all of the great insights, real-world examples, and early access to new tools we’re testing. It’s the easiest way to keep up without blocking off your whole afternoon.