The internet is drowning. Not in water, but in something far more insidious: AI slop.

What began as revolutionary tools to democratize content creation has unleashed something darker. We’re witnessing the largest information quality crisis in internet history, and it’s happening at machine speed.

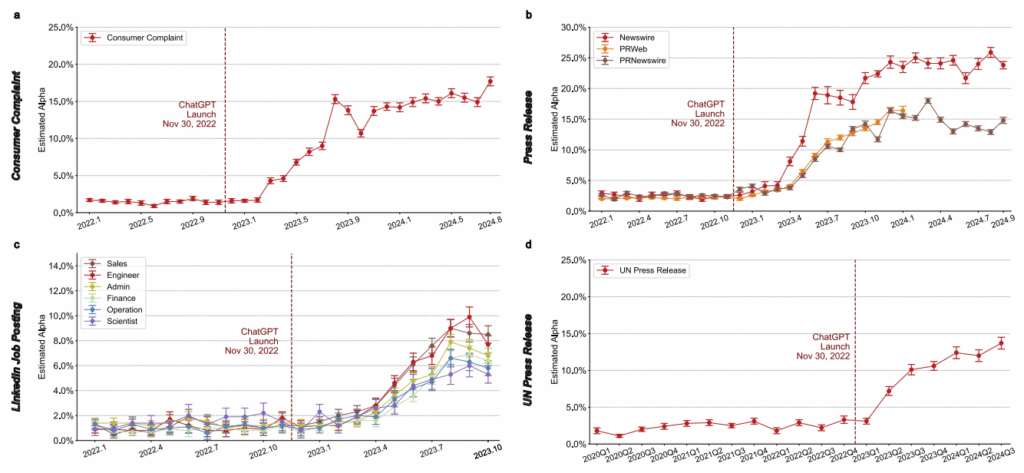

A Stanford University study analyzing over 300 million documents (from corporate press releases and job postings to UN press releases) showed a sharp surge in LLM-generated content following the launch of ChatGPT in November 2022.

Source: The Widespread Adoption of Large Language Model-Assisted Writing Across Society

But it doesn’t stop at dry institutional text. AI slop is flooding every format—think social posts, SEO blog posts, fake Amazon reviews, YouTube faceless channels, etc. These days, it’s not uncommon to see accounts churn out low-effort content with AI voiceovers and stock visuals. Even Facebook’s most viral posts are increasingly synthetic.

And it’s only getting worse. In fact, experts estimate that as much as 90% of online content may be AI-generated by 2026.

For creators, marketers, and businesses, this raises urgent new questions. How do you compete, rank, or even stay visible in an ecosystem buried under an avalanche of low-quality synthetic content?

Many turn to optimization. But the very engines we optimize for are being poisoned by machine-made sludge. More AI content feeds more AI training data, which clutters the web with even more noise. This creates a vicious cycle that makes quality content invisible in the very systems we depend on to surface it.

It’s a digital ouroboros, and we’re all trapped inside it.

In that loop, Generative Engine Optimization becomes the ecosystem in which every piece of content must operate to be relevant.

Relevance is shifting. Rankings are unstable. We’re no longer talking about beating the algorithm. We’re talking about surviving in a system that is losing its ability to recognize what matters.

The driving force behind AI slop is depressingly simple: money.

“Mostly, the AI slop is created to manipulate search engines or social media algorithms to earn money from advertising,” explains an analysis on Arrgle. “AI slop sites heavily rely on ‘programmatic advertising,’ where ads are placed automatically.”

Scroll any Facebook feed today and you’ll see what researchers call AI slop—a term that perfectly captures the uncanny, unpalatable quality of this content. You’ll see homeless veterans with badly worded signs, cops carrying massive bibles, “Shrimp Jesus” (an AI-generated fusion of religious imagery and crustaceans), babies wrapped in cabbage leaves, and skeletal elderly people being eaten by bugs.

John Oliver extensively covered the topic on his show, Last Week Tonight.

The goal isn’t storytelling or information. It’s engagement and eyeballs. Clicks. Impressions. Monetization.

But the contamination runs deeper than individual bad actors gaming the system with clickbait and Midjourney monstrosities. It goes deep into the very core of SEO and content marketing.

Marketers and content teams saw AI not just as a tool but as a growth engine. It gave them the power to flood the web with content at unprecedented scale. Blog posts, listicles, product roundups, tutorials—anything that could be generated was. All in pursuit of organic traffic and the ad revenue it fuels.

The strategy was simple: publish more, rank more, earn more. A classic numbers game.

Content farms scaled up like factories, pumping out thousands of SEO-targeted posts with virtually no quality control. In many cases, the goal wasn’t to inform or persuade but simply to exist, be indexed, and capture stray clicks from search.

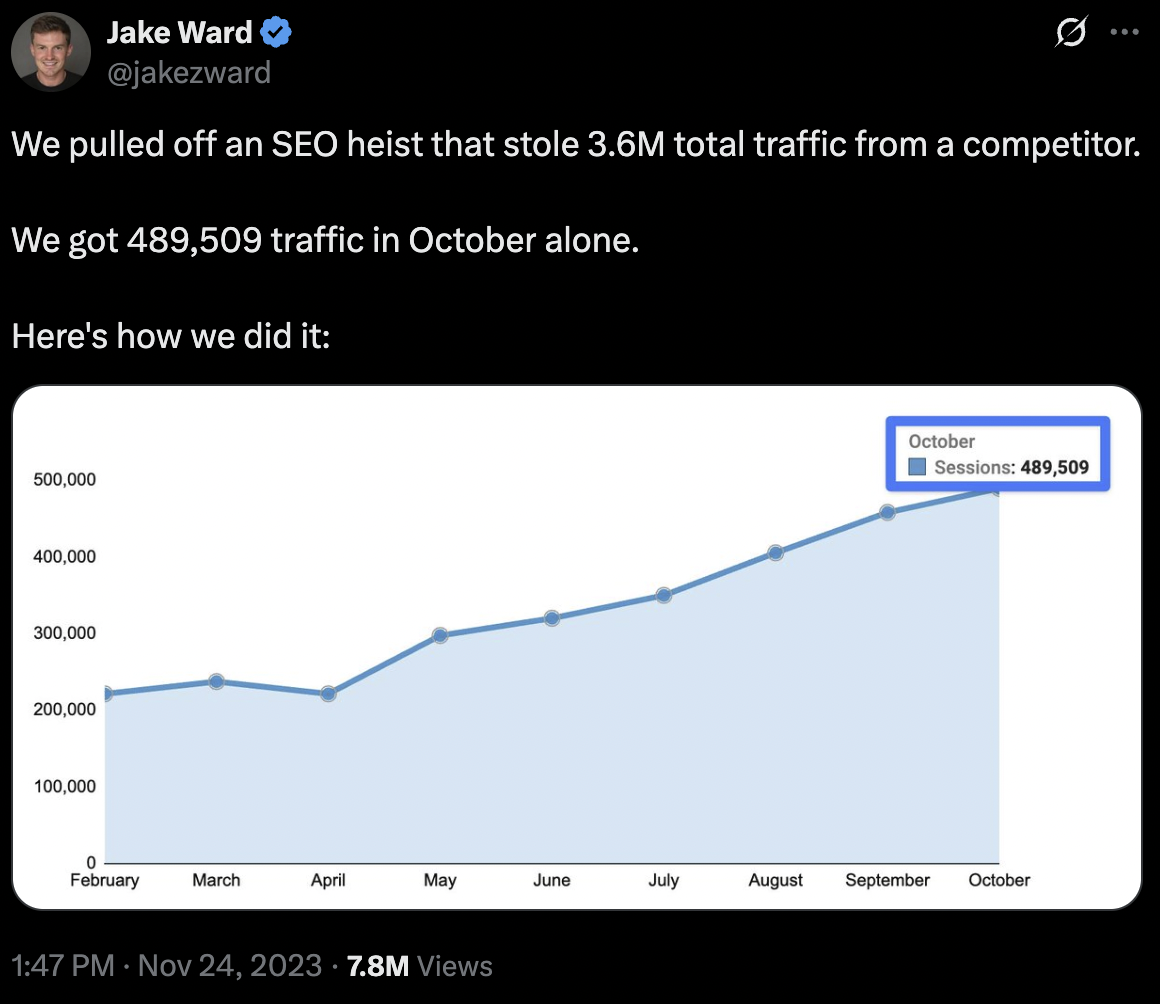

Source: X

The 2023 SEO heist story is a classic example. In this case, a co-founder openly bragged about using AI to “steal” 3.6 million visits from a competitor by scraping their sitemap and generating 1,800 articles at scale. The problem? All those articles were published with minimal human oversight, prioritizing pure volume over any semblance of quality or value.

This describes the level of AI-generated spam that’s devaluing the internet.

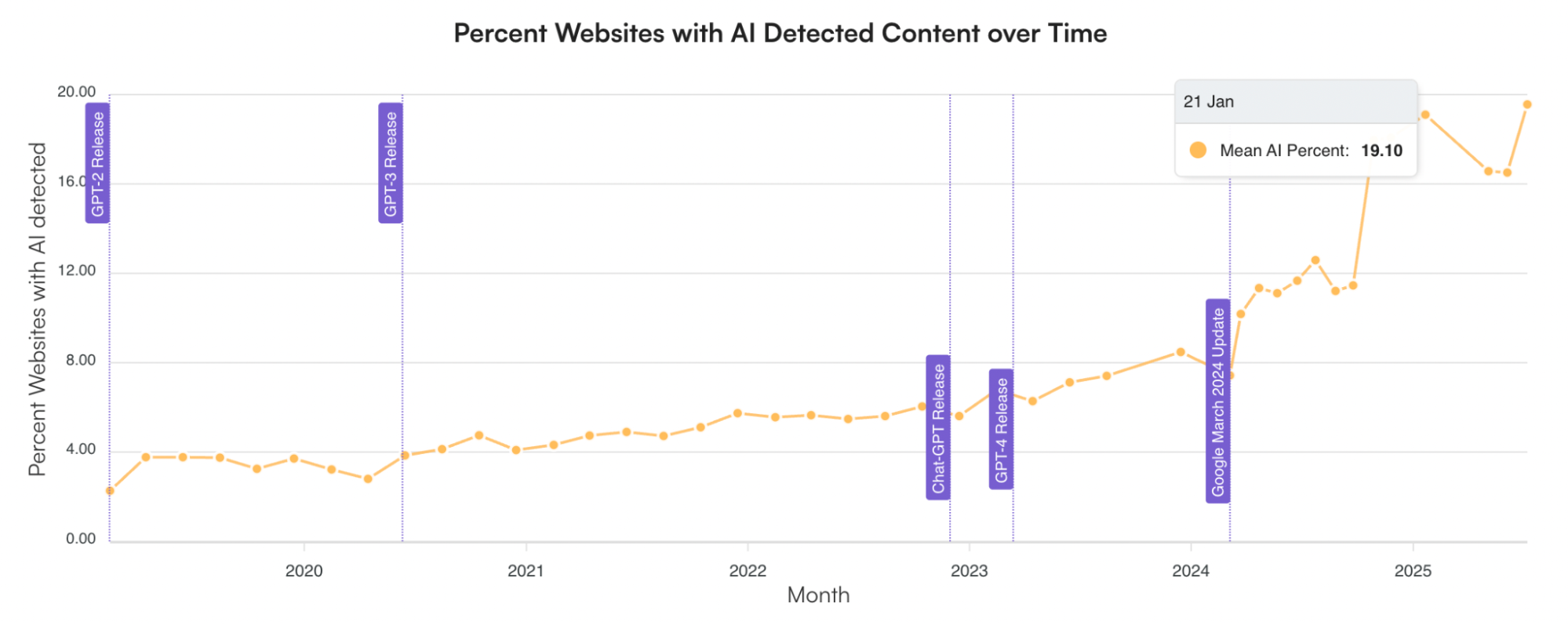

The scale of this shift is visible in the numbers. According to Originality AI, AI-generated content accounted for 19.10% of Google search results as of January 2025, up from just 7.43% in March 2024. Even as that figure dipped slightly to 16.51% by June, the signal remains clear.

Source: Originality AI

But we can’t take this data as definitive fact, AI content detectors are inherently unreliable because language patterns aren’t exclusive to either humans or machines. Both can produce text that overlaps in style, structure, and vocabulary. Even small human edits to AI-generated content can shift the signal enough to fool detection models, while highly formulaic human writing can be misclassified as AI. These tools rely on statistical guesses rather than definitive markers, making their accuracy shaky at best, especially when the creation process involves any mix of human and AI input.

That said, these types of studies along with anecdotal evidence suggest that AI Slop is growing at an unfathomable scale.

When content can be produced at scale and near-zero cost, the economic logic becomes hard to resist. The result is a feedback loop where velocity replaces value.

And that loop keeps tightening. As zero-click searches become more common, especially with AI Overviews appearing in up to 47% of Google results depending on query type, original content creators often lose attribution altogether. Their insights are scraped, reworded, and repackaged into AI summaries that offer no credit and deliver no traffic.

At the same time, the systems responsible for delivering these answers sometimes cannot reliably distinguish between real expertise and a convincing imitation. A fluent response isn’t always a truthful one. And as language models pull from increasingly contaminated content pools, the line between reliable and synthetic continues to blur.

As this contamination spreads across the entire search ecosystem, the challenge becomes even more urgent. Traditional SEO optimized for visibility within a ranking system that favored technical signals like backlinks and keyword density.

Generative engines bypass that system entirely. They synthesize information on the fly, pulling from both high-quality sources and low-effort clones, to produce something that sounds credible, even when it isn’t.

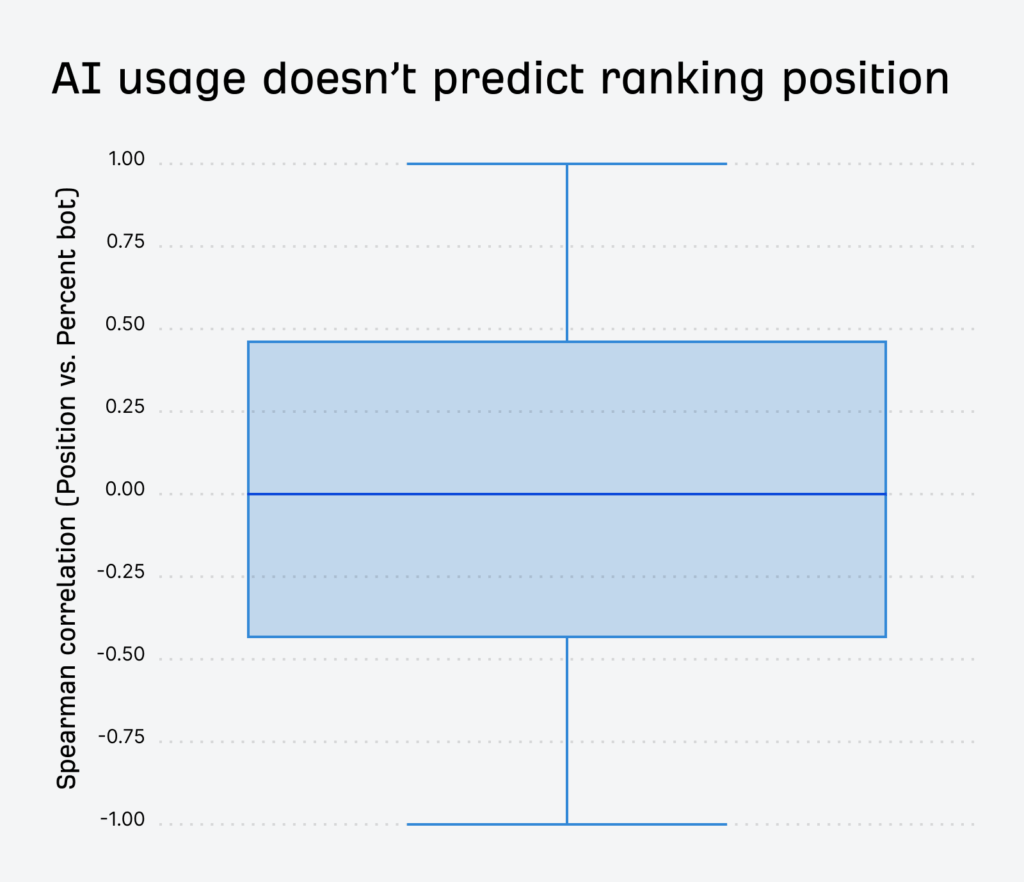

According to data from Ahrefs, 86.5% of top-ranking pages now include some form of AI assistance. Only 13.5% are fully human-written.

Google appears largely indifferent to this shift. That is, it neither significantly rewards nor penalizes AI-generated pages.

Source: Ahrefs

As long as content meets surface-level thresholds for relevance and coherence, it is treated as valid.

What we’re witnessing isn’t just an increase in machine-written content, but a breakdown in how people discover and validate information. The platforms designed to reward helpfulness and authority are being overwhelmed by speed, scale, and synthetic noise. If this trend continues unchecked, the foundation of trust that underpins the open web may not hold.

The proliferation of AI slop creates a problem that transcends individual bad search results. It’s systematically degrading the foundation that search engines and AI systems rely on to understand and rank content.

This amounts to the corruption of the internet’s knowledge base, with implications that ripple through every aspect of digital discovery.

Search engines were never designed to filter content at this scale, or with this level of deception.

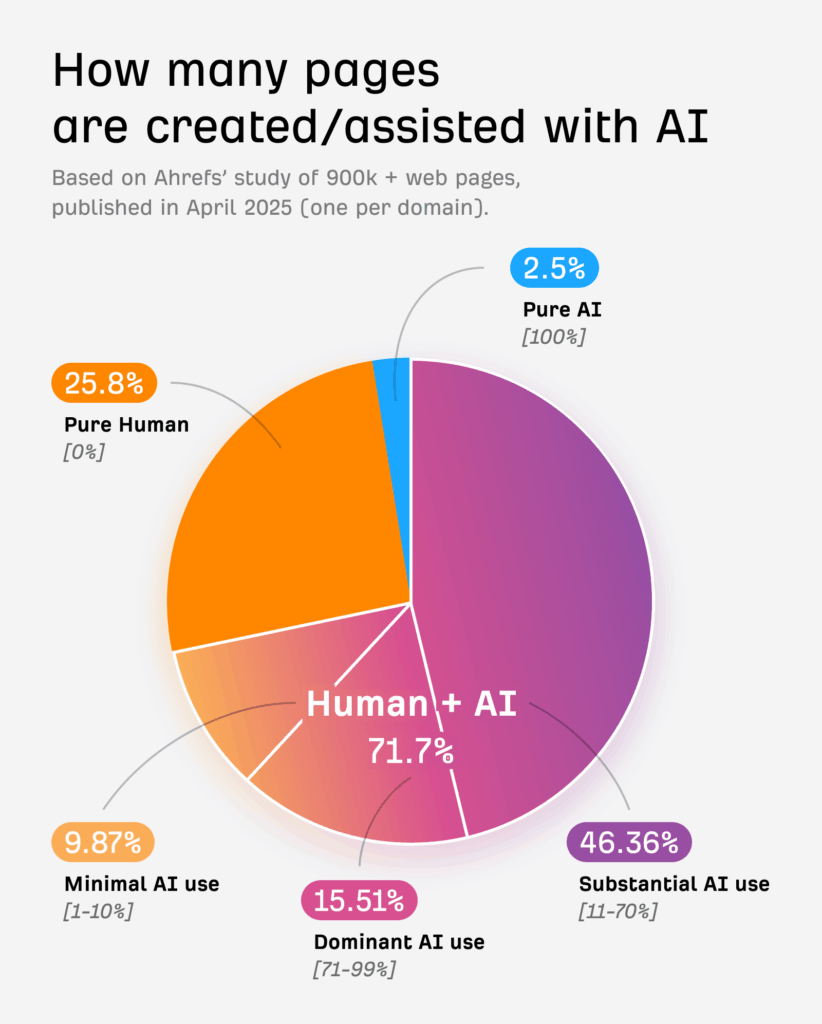

In April 2025, Ahrefs analyzed 900,000 newly published English-language web pages and found that 74.2% contained AI-generated content. Only 25.8% were classified as purely human-written. While 71.7% were categorized as a mix of ‘pure human’ and ‘pure AI’.

Source: Ahrefs

The summary of this? AI-generated content is no longer the exception, but the default. And there are multiple implications to this.

When the majority of indexed content is at least partially machine-generated, this makes it harder for search engines to distinguish between genuine, human-authored content and mass-produced, often-unreliable AI content. As a result, the signals used to rank pages are losing their meaning.

The issue compounds in generative contexts. Tools like ChatGPT, Claude, and Google AI Overviews rely on search indexes as the backbone of their answers.

When those indexes are saturated with synthetic content, generative engines blend real insights with mimicry, creating answers that sound credible but lack foundation.

Google’s spam policies technically prohibit AI-generated content used to manipulate rankings, but the policy carves out a massive gray area. As long as content demonstrates surface-level coherence and aligns with E‑E‑A‑T guidelines, it remains eligible to rank, regardless of origin.

This gives sophisticated AI-generated content a pass, especially when paired with structured data and strategic on-page optimization.

Another point is that detection doesn’t scale. Google’s January 2025 quality rater guidelines added definitions for AI content, and raters can now assign the lowest score to “automated or AI-generated content.” But these reviews are manual, and millions of content are generated every day.

More importantly, enforcement isn’t equal. Low-effort AI spam may get caught, but slightly reworked synthetic content passes through undetected, creating an uneven playing field. Human-authored content is subjected to increasingly strict evaluation, while automated content scales without friction.

This asymmetry erodes the foundation of both SEO and GEO. Competitive analysis becomes unreliable. Ranking no longer signals human expertise or investment. And when generative tools train on these results, they inherit the bias and pollution embedded within the index.

The real danger isn’t just degraded search performance, but the epistemic decay of the internet itself. Search engines, once trusted to surface the best of the web, are now functioning atop contaminated inputs. The more they rely on that data, the worse the problem gets.

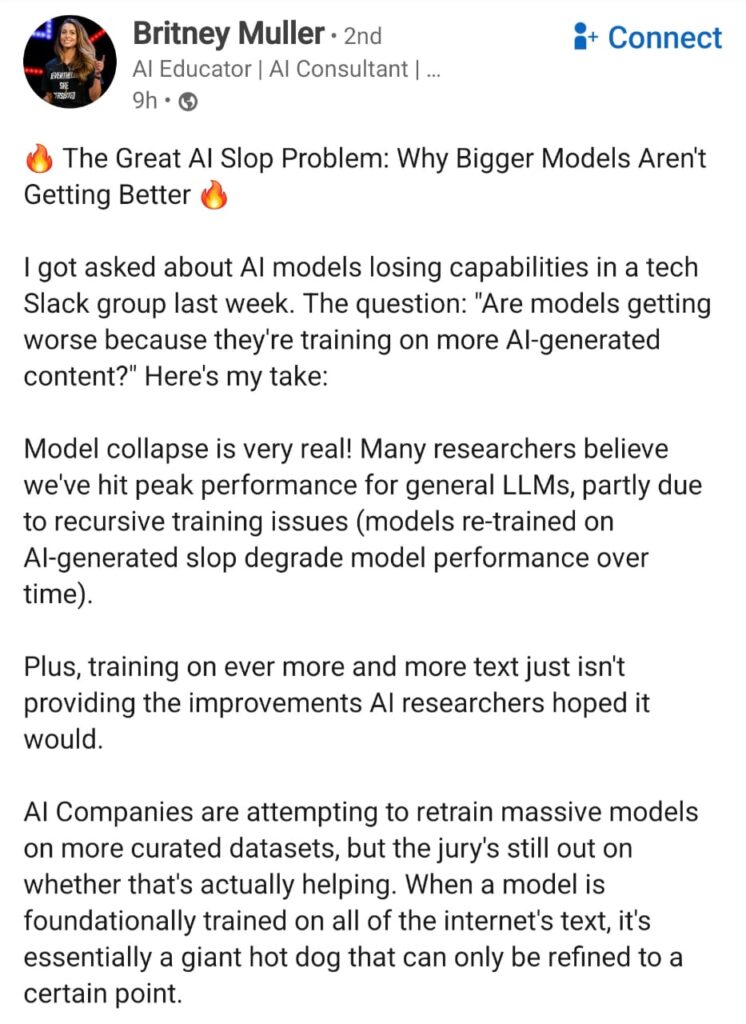

As AI-generated content floods the web, it becomes training data for future AI models, creating what researchers call “model collapse”—a phenomenon where each generation of AI content becomes progressively more generic, less accurate, and less useful.

When AI content dominates the training data, generative systems begin to reinforce their own patterns. Each iteration becomes a copy of a copy, degrading the original signal and amplifying flaws in both form and substance. The internet becomes a hall of mirrors where real expertise is outnumbered by simulations of simulations.

Britney Muller, International AI consultant and educator, compares models trained on all of the internet’s text to ‘a giant hot dog that can only be refined to a certain point’. This means that once a model has ingested massive amounts of low-quality data, there’s only so much post-training refinement can do to improve its fundamental capabilities.

Source: LinkedIn

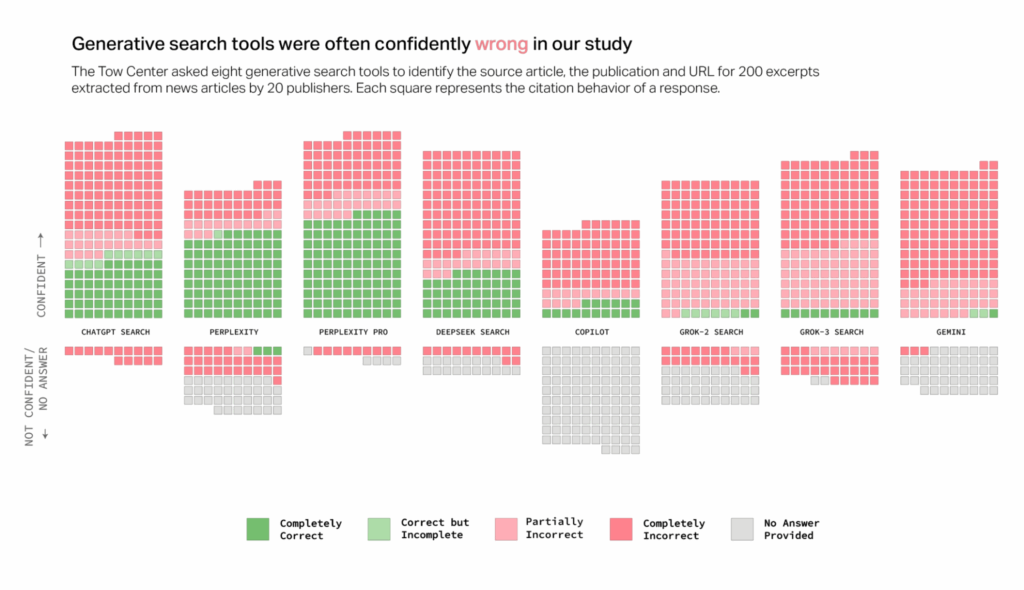

Taking it further, a March 2025 study by the Columbia Journalism Review tested eight major AI search engines and found that, for a controlled information retrieval task, chatbots collectively provided inaccurate or misleading answers more than 60% of the time, nearly always without acknowledging uncertainty.

More troubling, premium AI models were even more prone to confidently incorrect responses than their free counterparts, contradicting the assumption that paid AI services are more reliable.

Source: CJR

In generative engine optimization, this creates a trust paradox. Brands and marketers are investing in strategies to earn citations and visibility inside systems that are becoming less reliable. Optimizing for GEO means feeding into models that may reward visibility but distort meaning.

When users see a polished, AI-generated answer that sounds authoritative, they often assume it is true. But if the answer was pulled from a contaminated index, summarized without attribution, and built on flawed assumptions, it becomes misinformation at scale. The user has no clear signal to differentiate valid from synthetic.

This is the core of the confidence crisis. If the most advanced AI systems are wrong more often than they’re right, and they express that wrongness with complete confidence, then the entire foundation of AI-powered discovery is built on quicksand.

In other words, the illusion of precision masks the instability of the underlying data.

Meanwhile, the very behavior patterns that GEO strategies depend on are fragmenting in unprecedented ways. Multiple studies reveal dramatic shifts in how people discover and consume information.

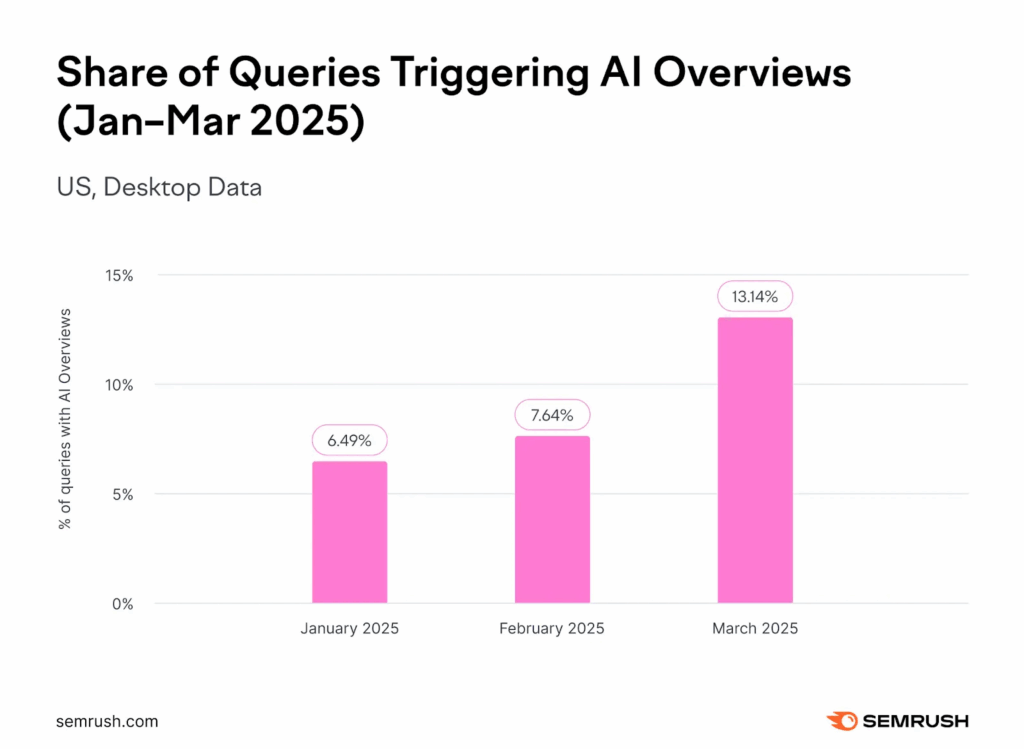

Semrush’s analysis of over 10 million keywords found that AI Overviews were triggered for 6.49% of queries in January 2025, climbing to 7.64% in February (an 18% increase), and jumping to 13.14% by March – a 72% increase from the previous month.

Source: Semrush

This shows an exponential transformation of search results.

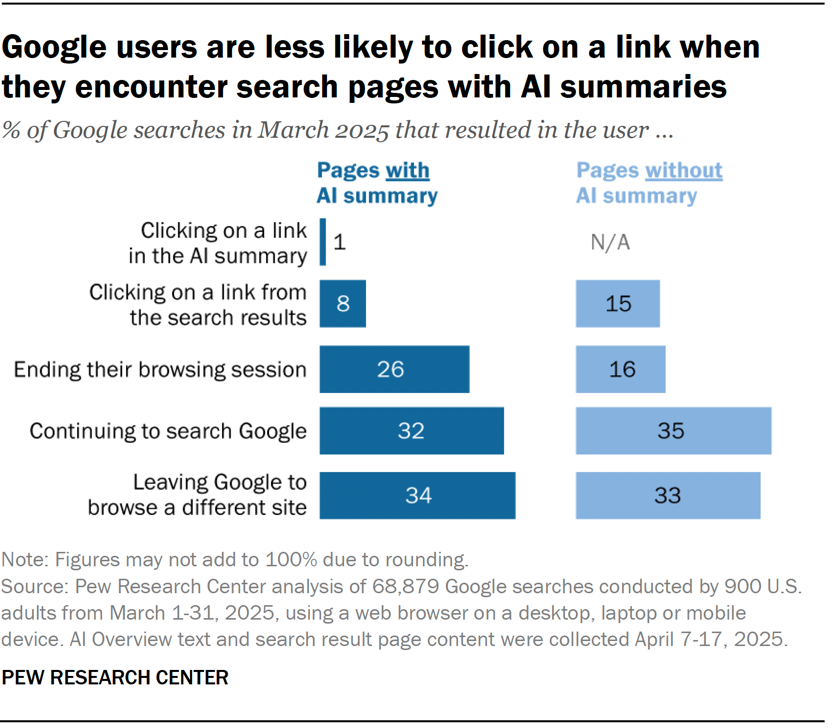

In the same lens, the Pew Research Center’s analysis of 900 US adults’ browsing behavior found that around 18% of all Google searches in March 2025 produced an AI summary. When these summaries appeared, users were less likely to click on search result links compared to pages without AI summaries.

For searches that resulted in an AI-generated summary, users clicked on a traditional search result link in just 8% of visits, compared to 15% of visits when no AI summary was present. Even more telling, users very rarely clicked on the sources cited within AI summaries – just 1% did so.

Source: Pew Research Center

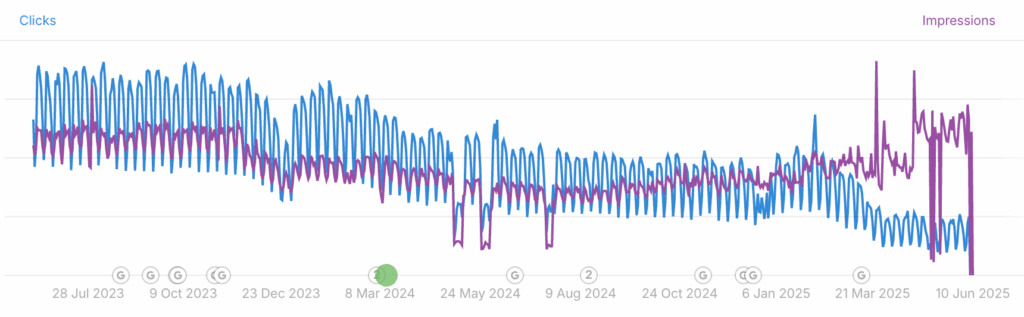

All this creates what many SEOs are calling “The Great Decoupling”, which is a disconnect between content visibility and website traffic. Content can receive massive exposure in AI summaries while generating zero website visits.

Source: Ahrefs

For businesses that have built their entire digital strategy around search traffic, this represents an existential threat. Their content becomes the raw material for AI responses that satisfy user queries without ever sending users to their websites.

And the fragmentation doesn’t stop at Google. Data compiled by Writesonic shows that 43% of consumers now use AI tools like ChatGPT or Gemini on a daily basis. Perplexity AI processed 780 million queries in May 2025 and drew 129 million visits, with over 20% month-over-month growth. Meanwhile, Google’s AI Overviews have reached over 1 billion users across more than 100 countries.

Each of these platforms has its own logic, interface, and optimization quirks. Discovery is no longer centralized. Users may start a query on Google, refine it in Perplexity, and fact-check it on Reddit or TikTok. This creates a fragmented ecosystem where no single platform controls the journey, and no single SEO playbook applies.

The trust patterns are shifting as well. A Yext survey shows that 48% of users always verify answers across multiple AI search platforms before accepting information. Only 10% trust the first result without question.

So yes, they’re using AI tools for convenience and speed, but they’re also hedging their bets by checking multiple sources.

For marketers and publishers, this means success must be redefined. It is no longer just about ranking. It is about maintaining consistent authority across a fragmented ecosystem where discovery happens in pieces and trust is earned across multiple touchpoints.

Visibility now depends on being present across different surfaces and formats. Content needs to perform everywhere, hold up under scrutiny, and remain coherent even when detached from its original source.

Over the past few sections, we’ve seen how the content landscape has changed beyond recognition.

We’re no longer competing with just other businesses. We’re up against an endless torrent of AI content that can be produced faster, cheaper, and at far greater scale than any human team. Worse, this same synthetic output is degrading the very foundation that search engines and AI systems use to understand, evaluate, and rank information.

In this transformed environment, we must adopt a new kind of strategy. Not one rooted in volume, but in authority resilience. The old playbook of publishing frequently and hoping for clicks has stopped working. Success now hinges on understanding GEO and designing content that AI systems reference, cite, and trust.

Authority is no longer a nice-to-have. In a discovery ecosystem driven by large language models and fragmented search behaviors, it’s the foundation of visibility. The AI era rewards the most useful, the most credible, and the most recognizable voices across platforms.

This is where content resonance becomes central to GEO.

Resonance isn’t just about engagement. It’s about lasting recognition and trust, not only from humans, but also from AI systems learning what matters based on consistent signals across sources. In this new paradigm, the authority-first approach means creating content that earns its place in the ecosystem because it reflects:

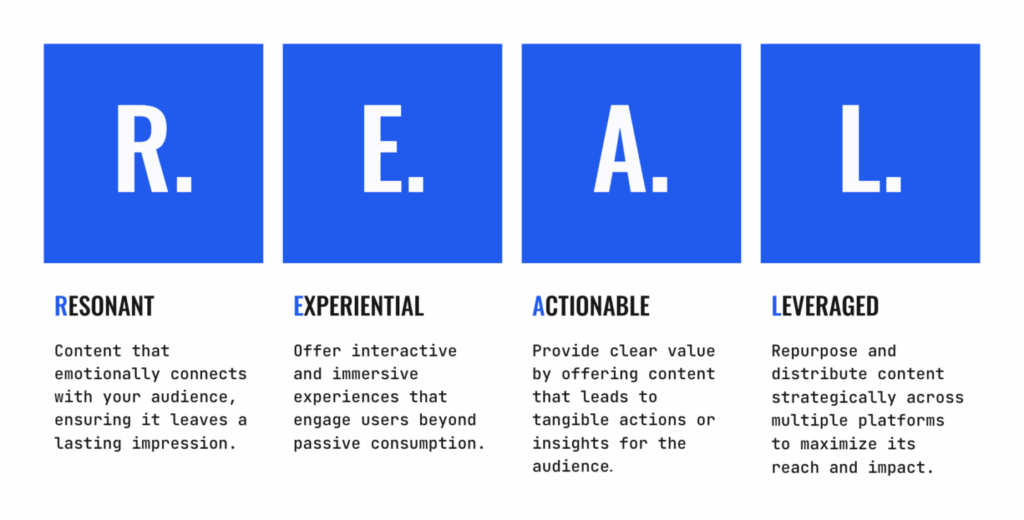

So what makes that kind of content? It’s what we at iPullRank call R.E.A.L. content:

This framework goes beyond human readers, creating signals that large language models recognize and cite. AI systems reward well-structured, widely shared, clearly attributed content that gets revalidated across surfaces. Resonant content tends to do exactly that, because it earns attention, elicits responses, and shows up again and again in trusted places.

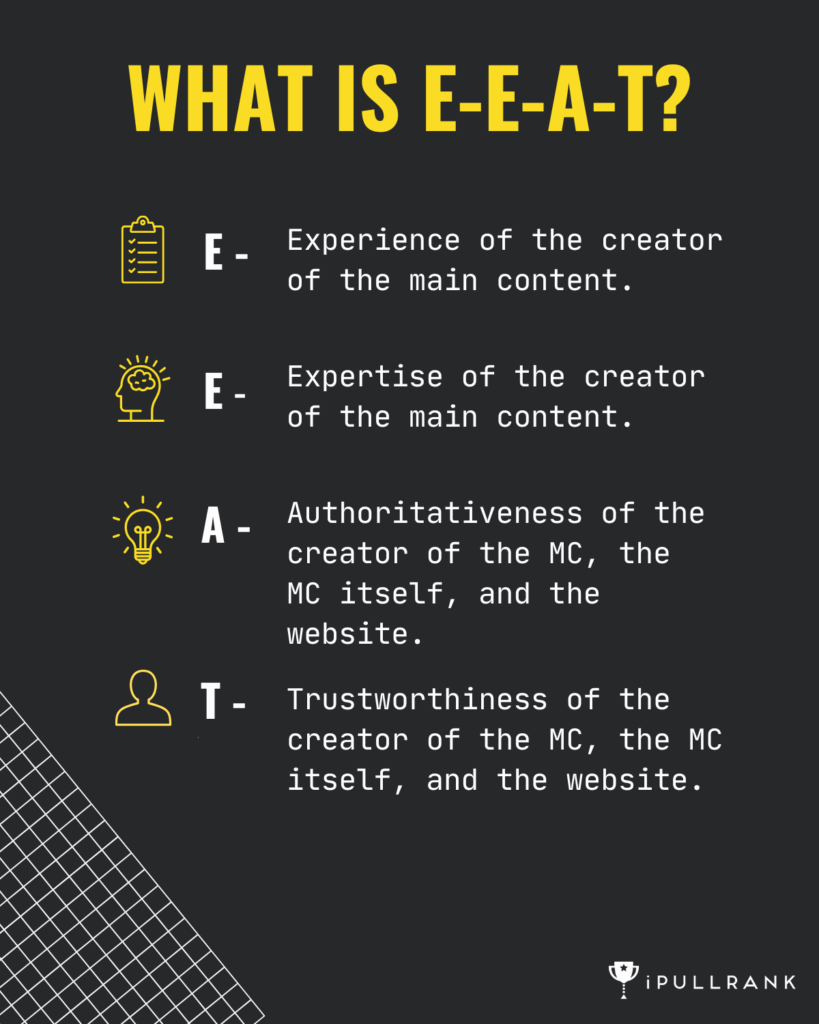

Google’s E-E-A-T framework (Experience, Expertise, Authoritativeness, and Trustworthiness) has become more than a ranking factor. In the GEO era, these signals are how you earn citations from AI systems and trust from users.

Breaking this further:

Large language models don’t judge content the way humans do. They look for patterns of credibility, structure, and semantic clarity.

AI systems favor content that provides clear, structured information with strong attribution and context. This means adopting formats that make information easily extractable while maintaining the depth and nuance that AI content lacks.

Successful GEO strategies focus on creating content that serves as the definitive source on specific topics, becoming the reference that AI systems consistently return to. This concept, known as information gain, involves providing unique insights or data that make your content more valuable and likely to be surfaced by generative AI search algorithms.

Original research proves particularly valuable in this context. When you conduct studies, surveys, or analyses that produce unique data, AI systems have no choice but to cite your work when users ask about that information.

As Francine Monahan notes in her piece on engineering relevant content, “LLMs tend to favor content that stands out and offers something original. If your content includes information that can’t be found anywhere else, like internal research, customer trends, or your own analysis, it helps establish your site as a trustworthy source.”

Another key lies in understanding LLM memory and how these systems store and retrieve information. AI models don’t just process individual pieces of content; they build understanding through patterns across multiple high-quality sources. When your expertise consistently appears across various contexts and formats, it becomes embedded in how AI systems understand your topic area.

This understanding points to a fundamental shift in how we approach content creation entirely. The old model of tactical SEO optimization is breaking down under AI Mode. We’re moving beyond tweaking existing content to fix performance issues. Instead, brands need to build what Mike King calls “retrievable and reusable content artifacts that serve as input for machine synthesis.”

What this means in practice is completely different from traditional content marketing. Rather than creating individual blog posts or pages and hoping they rank, you’re building pieces that AI systems can pull apart and reconstruct to answer different questions. Think of it like providing Lego blocks instead of finished sculptures. AI systems take your expertise, break it down into components, and rebuild it in whatever format best serves the user’s specific query.

This requires planning your content as an interconnected system rather than isolated pieces. Each article, case study, and data point becomes part of a larger structure that AI can navigate and reference. When someone asks about your industry, the AI doesn’t just find one page from your site. It synthesizes insights from multiple pieces of your content to create a comprehensive answer that positions you as the definitive source.

The goal shifts from getting traffic to individual pages to becoming the reference point that AI systems consistently return to when they need authoritative information in your space.

This new era rewards those who create authentic expertise at scale, combining AI efficiency with human judgment. The goal is to become the reference point AI systems trust, users return to, and competitors can’t match.

Winning in GEO means shifting from publishing to relevance engineering (r19g). You’re no longer just creating content. You’re building systems of influence that persist across discovery platforms.

This means your content team needs to get comfortable with original research, cross-platform relationship building, and measuring authority signals rather than just traffic metrics.

The brands that embrace this shift will become the default references in their category. Not just in Google. Not just in ChatGPT. Everywhere discovery happens.

Because in the age of AI-mediated discovery, volume means nothing if you lack authority, and content means nothing if it doesn’t demonstrate genuine expertise that AI systems recognize and trust.

If your brand isn’t being retrieved, synthesized, and cited in AI Overviews, AI Mode, ChatGPT, or Perplexity, you’re missing from the decisions that matter. Relevance Engineering structures content for clarity, optimizes for retrieval, and measures real impact. Content Resonance turns that visibility into lasting connection.

Schedule a call with iPullRank to own the conversations that drive your market.

The appendix includes everything you need to operationalize the ideas in this manual, downloadable tools, reporting templates, and prompt recipes for GEO testing. You’ll also find a glossary that breaks down technical terms and concepts to keep your team aligned. Use this section as your implementation hub.

//.eBook

The AI Search Manual is your operating manual for being seen in the next iteration of Organic Search where answers are generated, not linked.

Prefer to read in chunks? We’ll send the AI Search Manual as an email series—complete with extra commentary, fresh examples, and early access to new tools. Stay sharp and stay ahead, one email at a time.

Sign up for the Rank Report — the weekly iPullRank newsletter. We unpack industry news, updates, and best practices in the world of SEO, content, and generative AI.

iPullRank is a pioneering content marketing and enterprise SEO agency leading the way in Relevance Engineering, Audience-Focused SEO, and Content Strategy. People-first in our approach, we’ve delivered $4B+ in organic search results for our clients.

We’ll break it up and send it straight to your inbox along with all of the great insights, real-world examples, and early access to new tools we’re testing. It’s the easiest way to keep up without blocking off your whole afternoon.