Want a different perspective? These links open AI Search platforms with a prompt to explore this topic, how it works in AI Search, and how iPullRank approaches it.

*These buttons will open a third-party AI Search platform and submit a pre-written prompt. Results are generated by the platform and may vary.

People are searching differently. Google still owns the lion’s share of the search market, but organic search’s rapid transformation, driven by advancements in machine learning and improved natural language understanding, is fundamentally changing the way that we perform this task.

Search engines like Google are increasingly effective at interpreting and answering complex, conversational queries that previously would have required multiple searches and clicks.

Elizabeth Reid, Google’s VP of Search, champions the shift, noting, “AI in search is making it easier to ask Google anything and get a helpful response with links to the web,” and highlighting AI Overviews as “one of the most successful launches in search in the past decade.”

Similarly, in late 2024, Alphabet CEO Sundar Pichai hinted at even deeper upcoming changes, stating at the New York Times DealBook Summit, “Search itself will continue to change profoundly in ’25. We are going to be able to tackle more complex questions than ever before… You’ll be surprised, even early in ’25, the newer things search can do compared to where it is today.”

Pichai’s forecast was realized a few months later with Google’s rollout of AI Mode.

Such advancements are fundamentally reshaping search behavior. And contrary to what many SEOs may want you to believe, conversational search platforms are designed to satisfy search intent (at least according to Google).

But meanwhile, traditional keyword searches are getting deprecated. We need rich, nuanced prompts that contain deep contextual information to provide more accurate answers. And this is shifting the very nature of search from quick lookups to iterative conversational exploration. (Those quick lookups still have value in the appropriate context, however.)

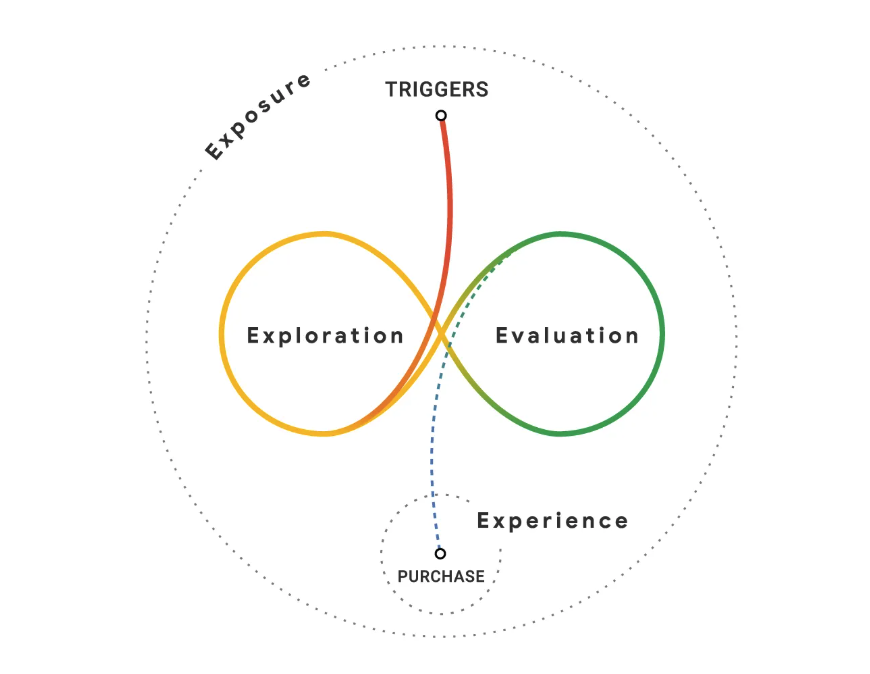

Search behavior is becoming conversational, interactive, and exploratory, reflecting something akin to Google’s 2020 concept of the “messy middle,” where users navigate complex decision journeys through continuous back-and-forth interactions.

But given issues like AI hallucinations and content stolen from publishers, we must confront critical questions about trust and reliance on AI-generated outputs.

As people increasingly accept AI-generated responses without always verifying their sources (due to laziness), brands face strategic considerations regarding editorial guidelines, implicit trust dynamics, and even conversational biases. Prompts unintentionally or intentionally guide the responses, shape search interactions, and individual decisions. If everyone is getting a different answer to their personalized and contextualized questions, what are brands supposed to do?

There’s also an upside here, however: The traffic that navigates to a website after engaging with generative search results tends to be highly qualified and intentional, presenting more transparent and more actionable conversion opportunities.

Brands need to recognize this and adapt by optimizing their visibility within AI-generated contexts today — particularly Google’s AI Overviews — while strategically preparing for deeper integration with conversational search behaviors tomorrow.

This chapter explores these behavioral shifts in detail, highlighting how brands can anticipate conversational bias, leverage iterative discovery journeys, and foster trust within AI-driven search environments, ultimately aligning with human values and user expectations in this generative era.

Let’s not sugarcoat it: Adoption of AI Search is skyrocketing, and clicks are disappearing.

People are not going to jump through all of the hoops required to avoid AI Search. (You can’t turn off AI Overviews, so even if you hate it, you can’t avoid it.) And frankly, research is indicating they are increasingly satisfied with the answer Google gives them right there in the results.

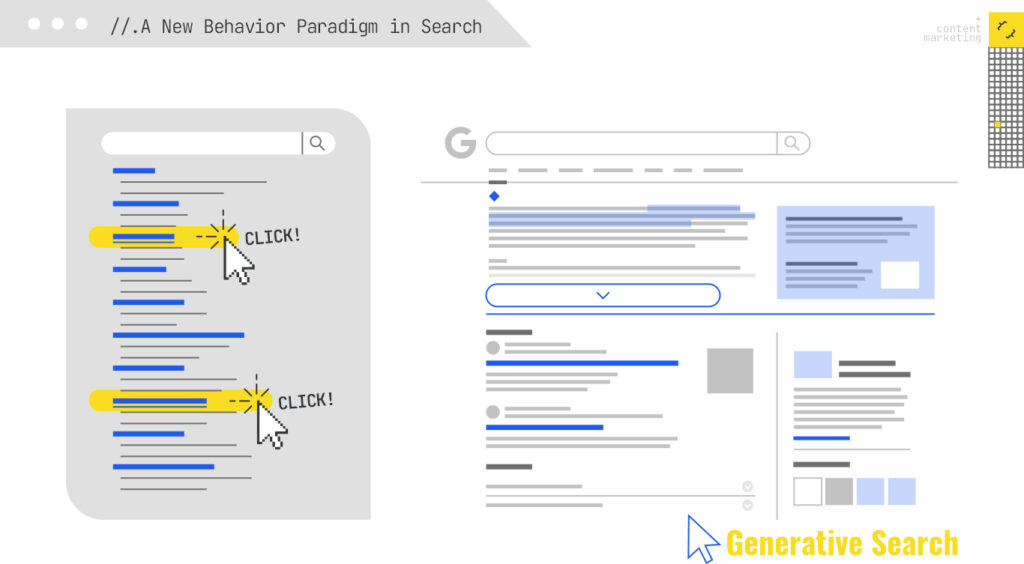

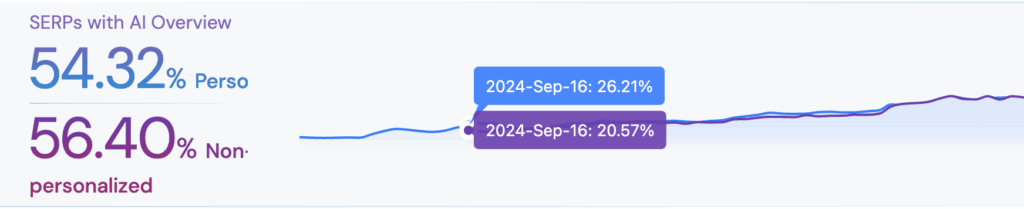

AI Overviews are synthesized, AI-generated summaries built to fulfill the query without sending people elsewhere (unless they’re going to buy your product or service). And they are now showing up at the top of Google search results more often than not.

They take up space, deliver direct answers, and push traditional organic listings deep below the fold—sometimes by over 1,500 pixels. It’s a total redistribution of attention.

Here’s what that looks like in practice:

Why is this happening? Because people are adjusting. They’re retraining themselves not to browse. Instead, they scan the AI output, get what they need, and bounce — and often end their session entirely.

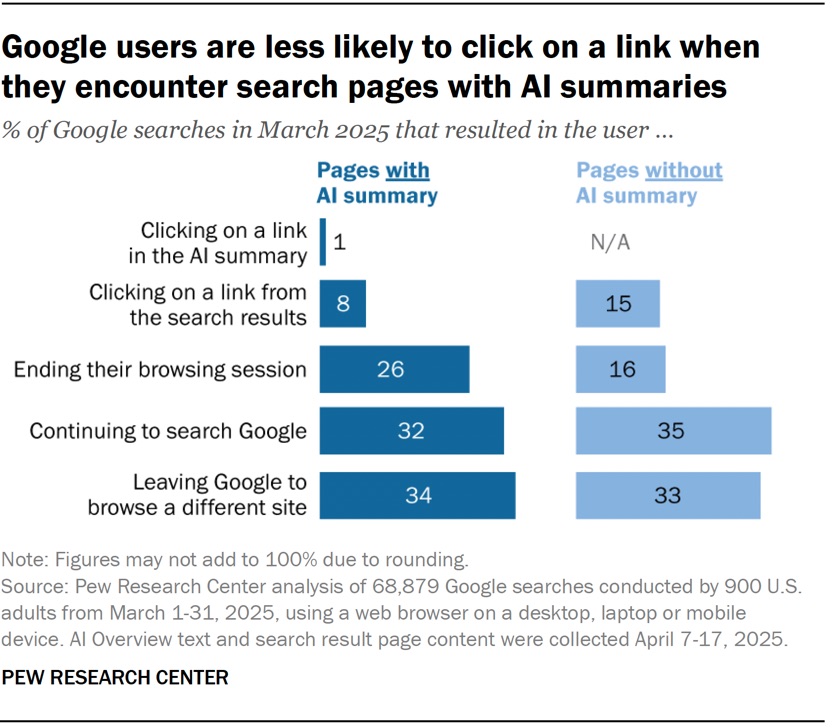

Pew Research reports that only 1% of users click links inside AI summaries, and 26% abandon their session altogether after reading them.

If users aren’t clicking but they’re still satisfied, what does that tell us?

It tells us the changes in search behavior are already happening. Google is collecting engagement data, watching abandonment rates drop, and concluding that generative answers work. The model is giving people what they want: immediate, frictionless synthesis.

Next up? They’ll bring ads to AI Mode. If they generate as much or more revenue as traditional search, the inevitable next step is AI Mode as the default version of search.

Now here’s the uncomfortable part.

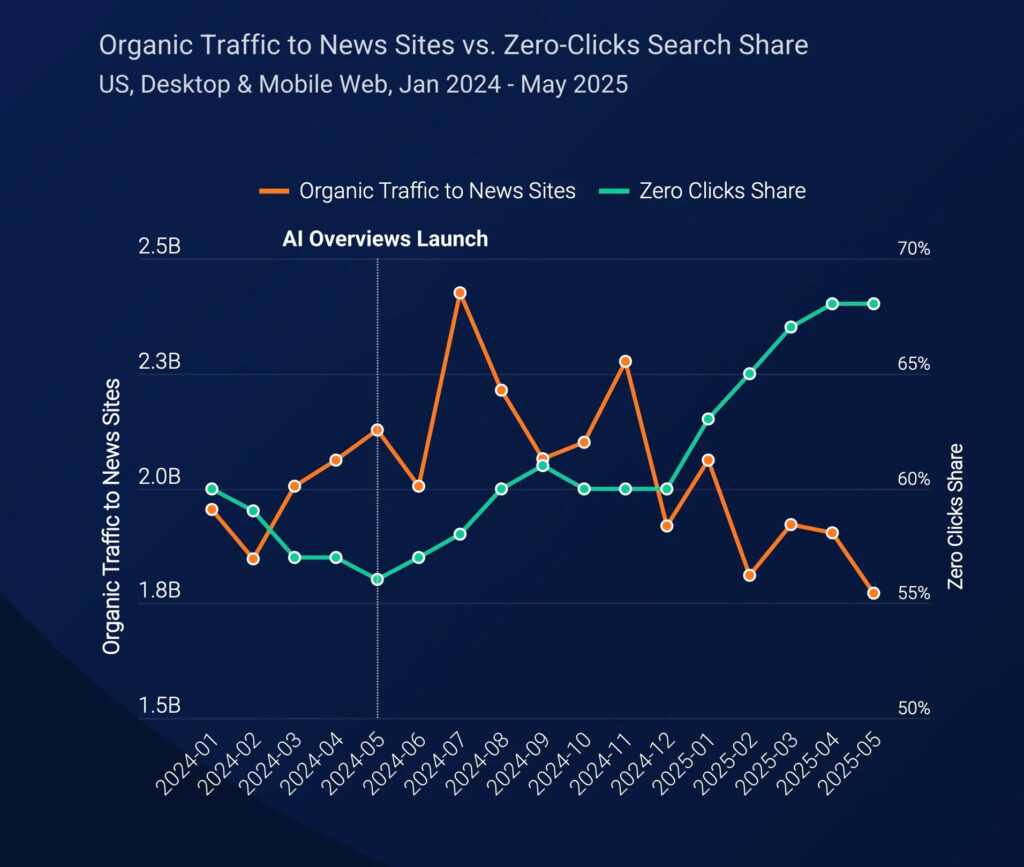

If users prefer AI-generated summaries over visiting the actual source, then publishers are no longer part of the value chain. They’re the raw material. That breaks the unspoken agreement that has powered the open web for decades: You create quality content; Google sends you traffic.

But if no one enforces that social contract (i.e., no legislation, no user backlash, no drop in satisfaction), Google, OpenAI, and Perplexity will keep moving forward. And people will follow.

It’s a rewiring of search behavior.

And while other discovery platforms like TikTok and Reddit are increasing their share of the pie, Google is still the front door. That door just looks a lot more like a chatbot now.

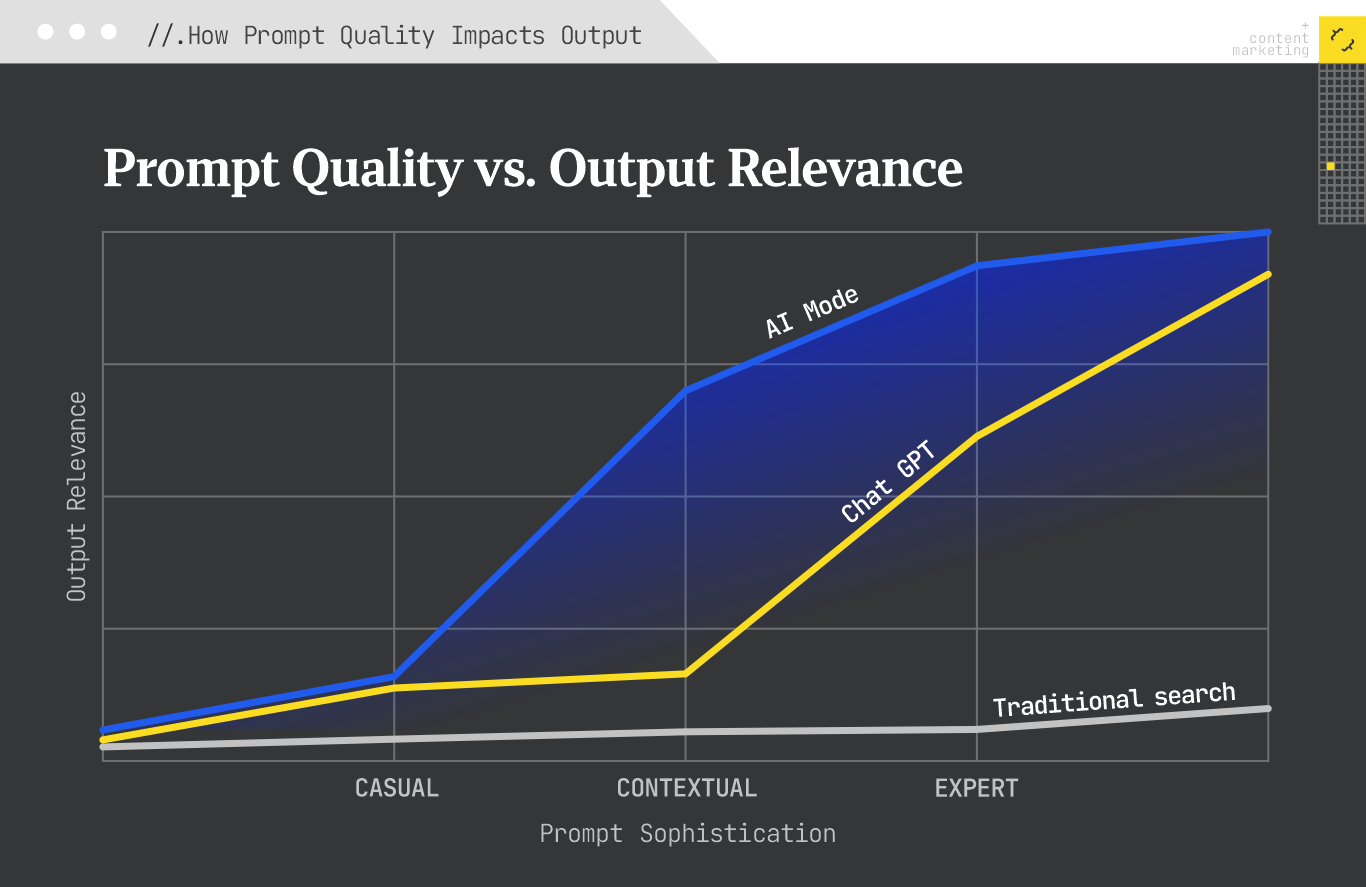

Search has always been a form of self-expression. But with generative AI in the mix, your ability to get the right answer depends on how well you can articulate the question. One vague prompt can return something generic. A precise, context-rich prompt? That gets you gold.

Here’s a simple truth about AI Search: What you put in shapes what you get out. Garbage in, garbage out. Yet most people treat AI prompts like keyword search. That’s what they’re used to. Search engines have trained them to use “keywordese.”

Let’s break that down:

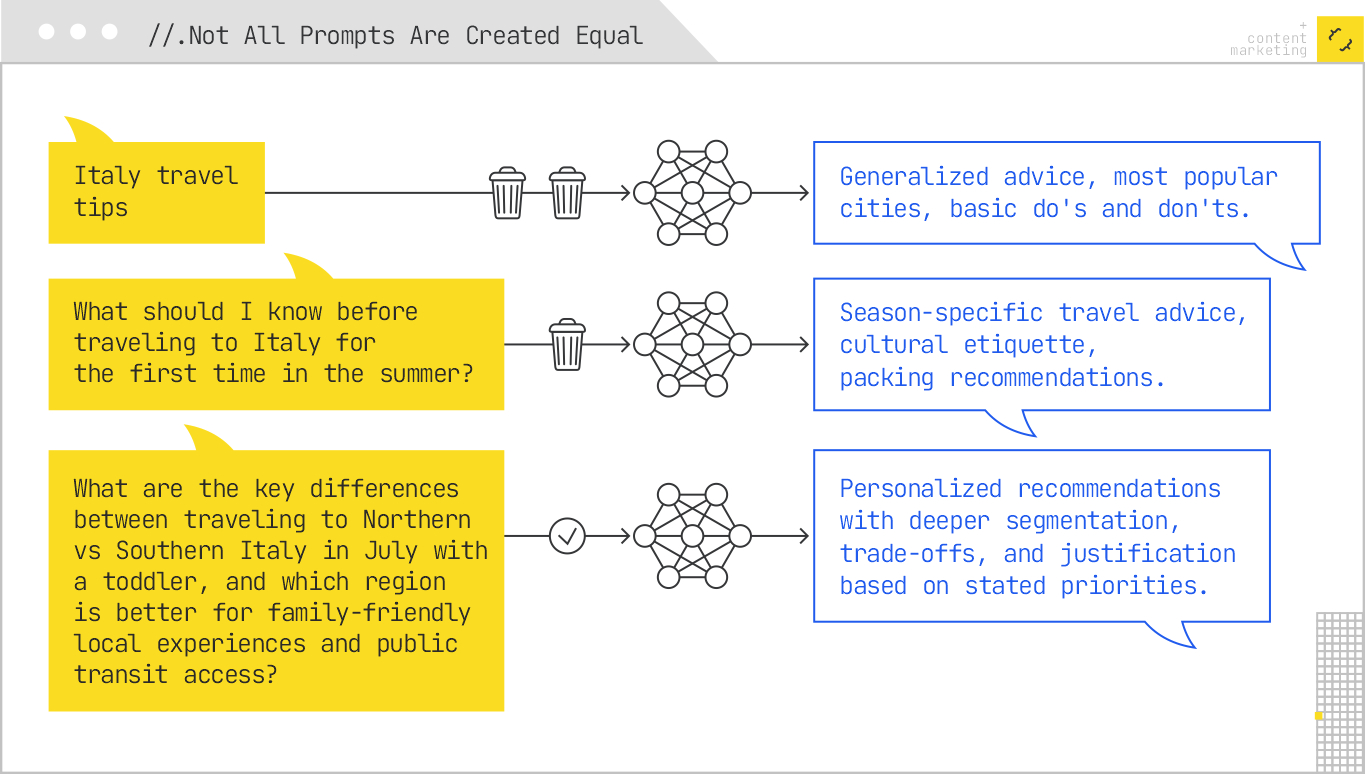

Prompt Quality | Example | Output Quality |

Casual Prompt | “Italy travel tips” | Generalized advice, most popular cities, basic do’s and don’ts. |

Intermediate Prompt | “What should I know before traveling to Italy for the first time in the summer?” | Season-specific travel advice, cultural etiquette, packing recommendations. |

Advanced Prompt | “What are the key differences between traveling to Northern vs Southern Italy in July with a toddler, and which region is better for family-friendly local experiences and public transit access?” | Personalized recommendations with deeper segmentation, trade-offs, and justification based on stated priorities. |

In practice, it takes time to write a longer prompt. There’s still friction there. But a better answer requires context:

A well-structured prompt for AI Search includes:

As AI Search platforms get smarter, they increasingly reward specificity. The more context a prompt offers, the more accurate and tailored the synthesis becomes. That doesn’t mean most people will be using longer prompts, but over time, we can expect search behavior to change.

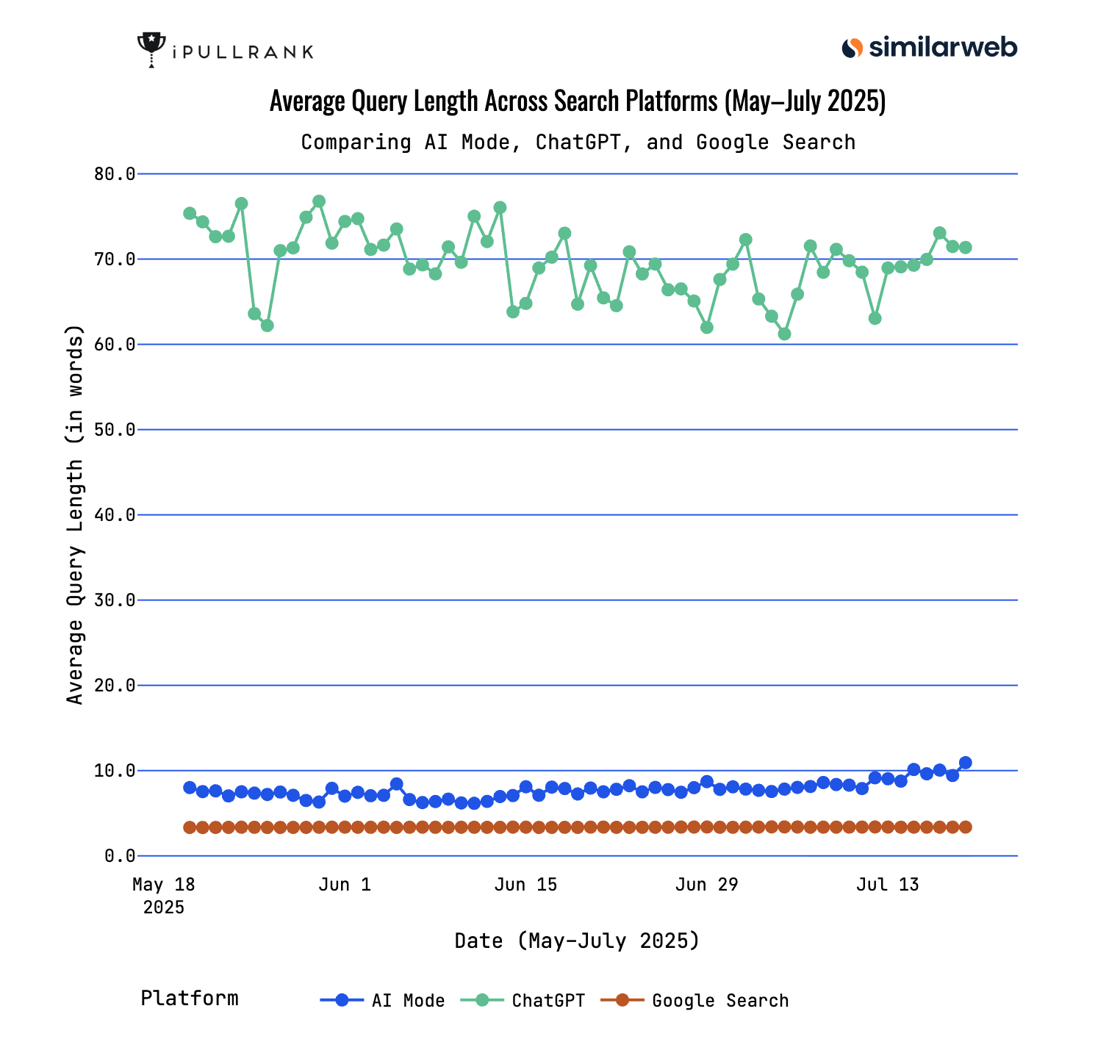

We are seeing subtle indications of that behavior shift via early AI Mode data:

People searching on AI Mode use slightly more words than those using traditional Google Search, but significantly fewer words than those using ChatGPT. That’s likely due to unfamiliarity with the functionality of AI Search.

AI Mode and search-enabled ChatGPT filter and weigh the web based on how your prompt sets the stage. With a vague query, they infer your intent. With a strong prompt, they align to it precisely.

That’s why seasoned users (especially researchers, analysts, and SEOs) use more sophisticated prompts to:

This is where we’re headed: Prompt fluency is the new search literacy.

Over time, AI Search platforms will reduce the friction of prompting by pulling context from everything they already know about the person searching. Depending on the tool, that could include your device type, location, search history, preferences, past chats, and even behavior patterns. The more context the system has upfront, the less you’ll need to spell out in the prompt itself.

Both Google and ChatGPT already provide local search results tailored to your location and search intent. We see different results in Google depending on whether you’re searching from a computer or a phone.

All the data these tools collect from you will enhance your search experience, but this also raises concerns about data privacy and whether the best answers are what you want to hear versus what you need to hear.

Search used to be a guessing game.

You’d punch in a few keywords, scan a wall of blue links, maybe click one or two, then head back to the search bar when the result wasn’t quite right. Rinse and repeat. It was a clunky back-and-forth. Less about finding the answer, more about figuring out how to ask the question the way the engine wanted.

Search is no longer a one-and-done transaction. It’s a dialogue, one that unfolds over multiple turns as users clarify, refine, and expand on their original queries. This shift toward iterative discovery is quietly, yet fundamentally, reshaping how we understand search behavior, content performance, and optimization strategies.

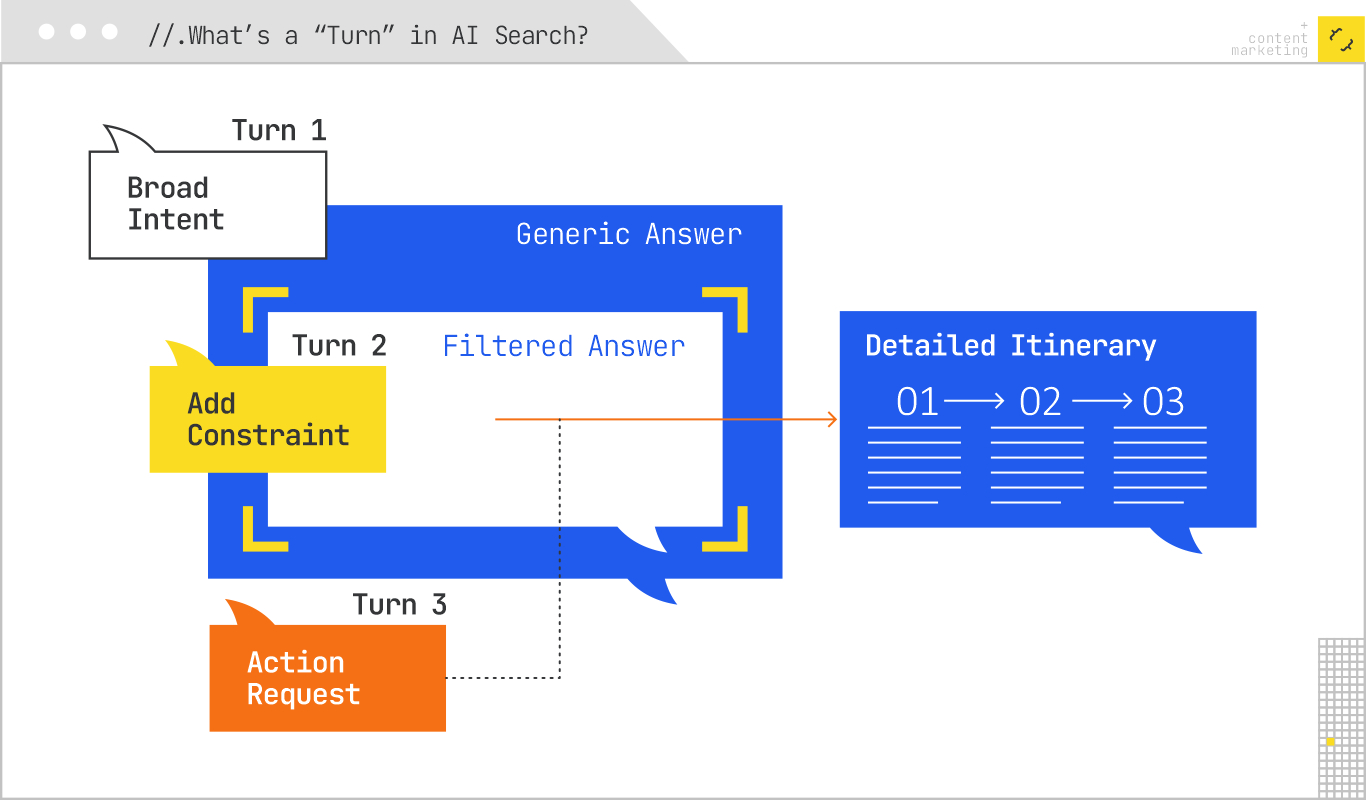

A turn is a single back-and-forth between the user and the AI:

According to LLM monitoring software Profound, in August 2025:

Most people are having a brief exchange at this point.

In multi-turn search, context compounds. Each turn builds on the last. And that’s where things get interesting.

Whereas traditional search relied on short, disconnected queries (“best toddler bikes”), generative platforms invite follow-ups (“what about ones that are easy to store?” … “are any under $100?” … “what colors are available?”). Each new turn deepens the session’s understanding of your intent.

This behavior aligns with what we saw in Google’s 2020 “messy middle” study that we referred to earlier: People gather, filter, and compare in loops before taking action. AI Search just accelerates and condenses that loop into a single interface.

Perplexity CEO Aravind Srinivas recently said that users on that platform often start with general questions, then progressively narrow in on specifics.

“[As a user] you ask a question, you get an answer… But users do follow up — often narrowing or adjusting based on what they see.”

Rather than starting over with a new search, users iterate naturally—each prompt shaped by the last response. It’s conversational, fluid, and cognitively closer to how people think when they’re learning or comparing.

Here’s a sample three-turn search flow showing how a typical AI Search journey builds context and moves a user toward action:

User Goal: Get an overview of the possibilities.

Search Behavior Change: In traditional search, this would be several separate keyword queries (“Austin attractions,” “Austin BBQ,” “Austin music venues”). AI Search collapses it into one broad, synthesized answer.

User Goal: Filter based on constraints.

Search Behavior Change: No need to re-enter the query from scratch; context from Turn 1 is retained.

User Goal: Create a ready-to-use plan.

Search Behavior Change: The AI is now functioning like a personalized trip planner—combining search, filtering, and action initiation in a single flow.

This is the outcome we hope to see, but the current conversational search experience is far from perfect. In practical use, results from AI Mode, ChatGPT, or Perplexity often fall short in terms of accuracy, sourcing, or depth.

Recommendations can still be inconsistent, outdated, or shaped by the AI’s training data rather than the freshest or most authoritative insights.

Right now, they’re “good enough” for many users: fast, confident, and convenient. And because the outputs keep getting marginally better with every model update, that’s often all it takes for people to stick with them instead of clicking deeper. The risk for brands is that “good enough” will eventually mean users never click through to you at all, your expertise is still in the answer, but the AI gets all the credit and the customer relationship.

So, how does it improve with a more personalized result?

People are already treating AI-driven answers as if they’re authoritative, often without verifying them. Due to automation bias, people tend to “accept AI output without question,” particularly when it’s framed in confident, natural language. This isn’t surprising: Humans are hardwired to value efficiency, and as long as the answer feels complete, we stop searching.

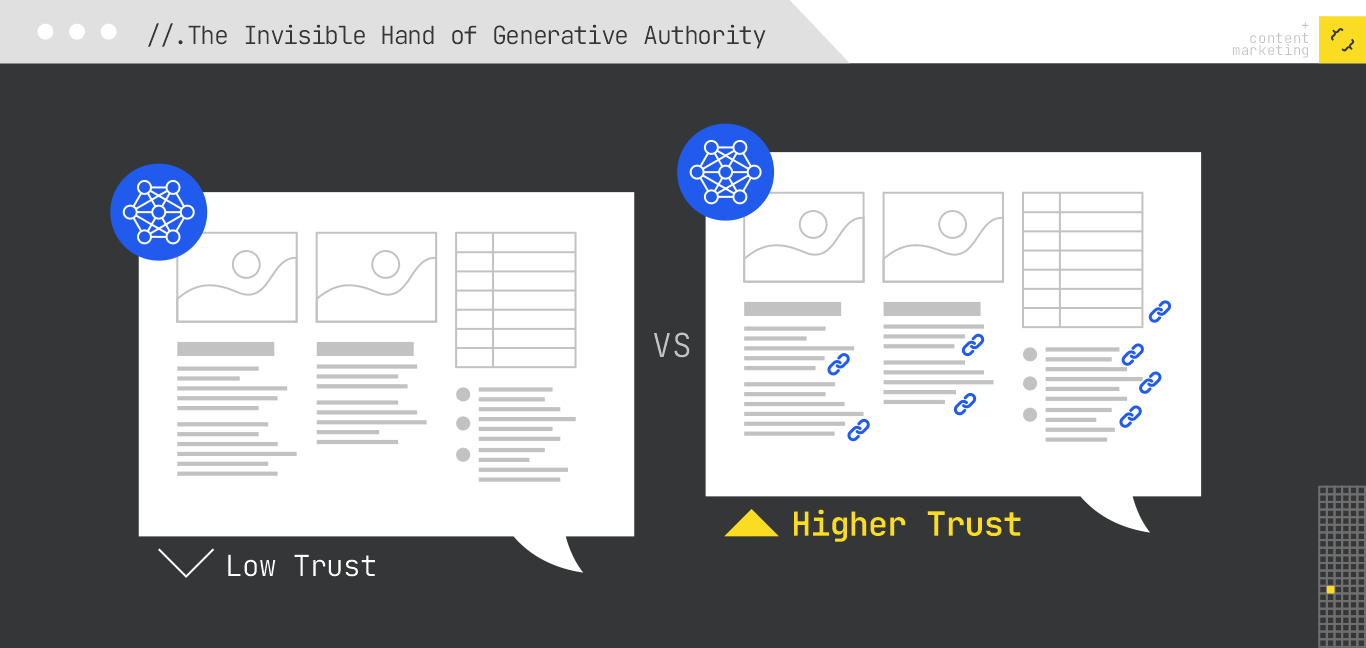

A large, randomized experiment by Haiwen Li and Sinen Aral of MIT tested how design choices shape trust in AI Search. Its findings: People trust AI Search less than traditional search by default, but links and citations significantly increase trust — even when those links are wrong or hallucinated. Showing uncertainty and lack of confidence reduces trust. In short, presentation details can inflate misplaced confidence.

And everyone’s susceptible, regardless of their education level. Despite the assumption that more educated people possess a higher level of critical thinking, participants with higher levels of education (college degree or higher) were more likely to trust GenAI information, and significantly more willing to share it, than those with no college degree were.

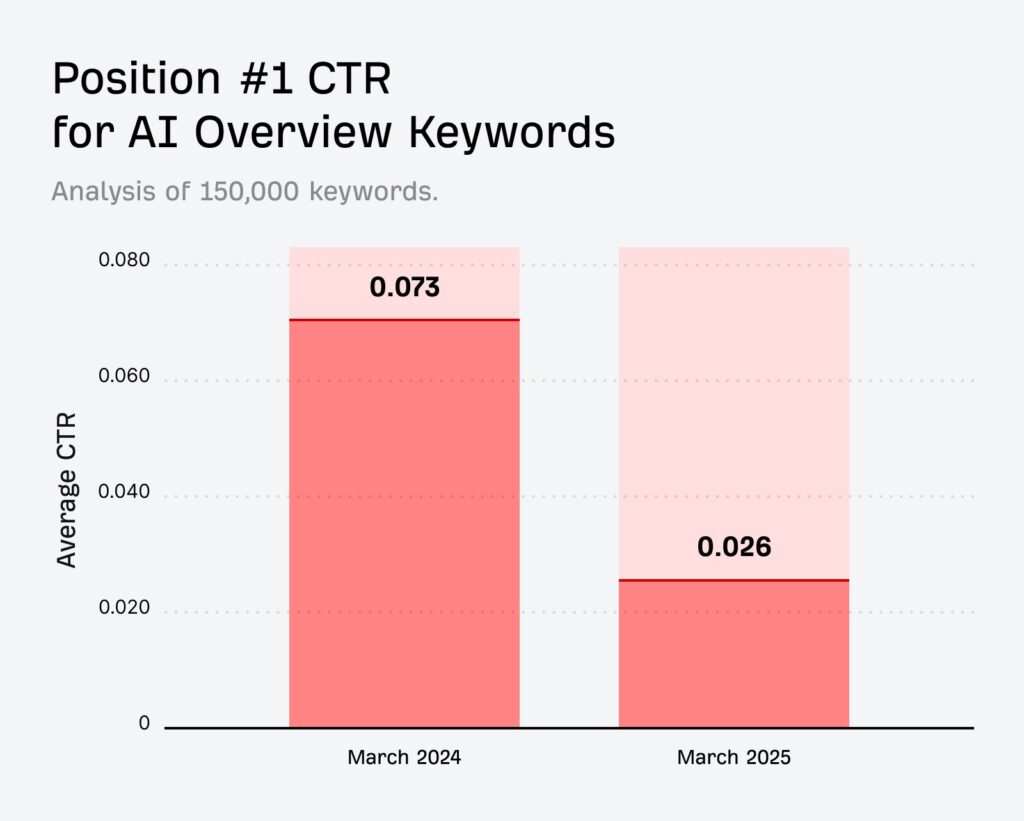

Independent reporting reinforces this risk. A Choice Mutual audit found 57% error rates in Google AI Overviews for life-insurance queries — yet the summaries still look convincing to lay readers. And a Pew analysis shows behavior shifting alongside trust: When an AI summary appears, users click traditional links about half as often (8% vs. 15%).

Strategic implications:

However, it’s important to be aware of the potential impacts of new model releases: An updated LLM model can change everything. We should therefore expect volatility in respect to the quality of citations.

Recent research from AirOps on the state of AI Search found:

The trust dynamic involved in AI Search becomes even more potent when combined with context retention. Once a platform decides you’re a credible source, its ability to recall and reuse that trust in future responses compounds your visibility.

AI systems like ChatGPT and Google’s AI Mode maintain continuity in two primary ways:

Google AI Mode is leveraging personal context from across your account — search history, Gmail, Drive, YouTube viewing habits, even calendar info — to deliver intuitive, personalized responses. If you search for “Things to do in Brooklyn this weekend,” it can recommend venues based on your itinerary, previous reservations, and stated interests like live music or local food. AI Mode will also provide answers based on past searches and entire search journeys.

While OpenAI doesn’t pull from your Gmail or calendar, ChatGPT does retain conversational context within a session. It remembers what you asked previously and responds accordingly — like a human conversant. If you began with, “Summarize this article about electric car battery life,” and then later added, “Now explain cost comparisons for home charging,” ChatGPT would adapt its next response to your earlier prompt — making the entire session coherent.

In ChatGPT (with memory on), this context can persist beyond a single session. You can ask follow-ups days later, and it still “remembers” the background. Google’s AI Mode appears to do this within the session only—for now. But persistent context is likely coming there as well.

This trust-context pairing raises new strategic questions:

In either case, your position in the AI’s “mental model” of trustworthy sources becomes as critical as any keyword ranking ever was.

The technical mechanics of personal context and memory are more than just engineering feats. Once an AI system knows who it’s talking to, those details don’t just help it remember your itinerary or summarize yesterday’s news — they begin to steer the entire search experience. What starts as convenience quickly turns into a customized information loop, where the AI decides what to highlight, omit, or frame based on what it’s already learned about you. This is where the conversation shifts from “how” AI remembers to “what” that memory does to the truth you see.

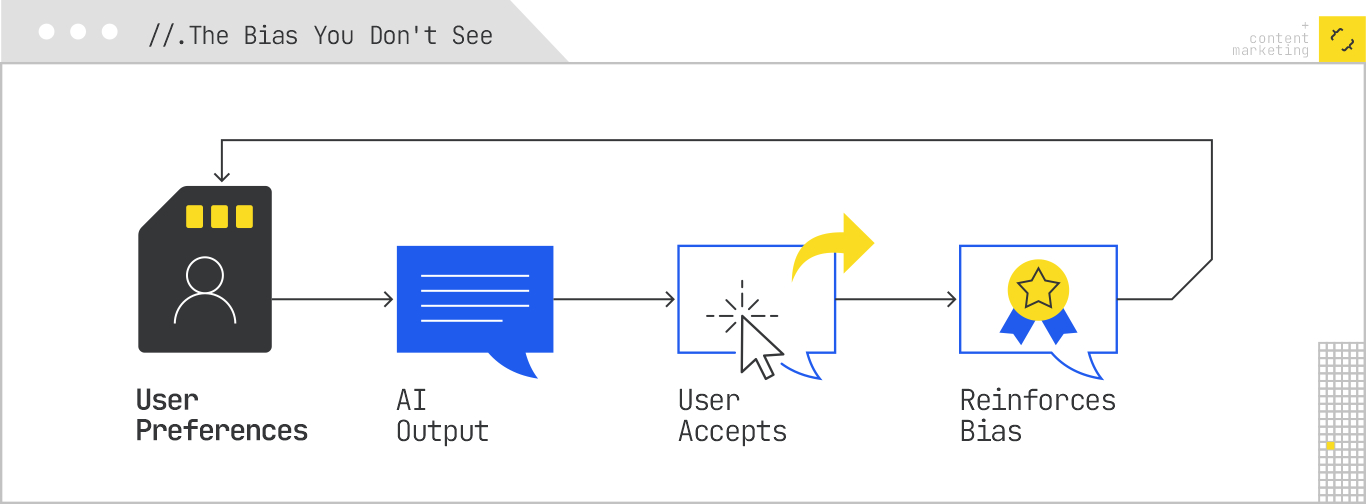

Generative search systems increase their trust in you the more they know about you. Your preferences — your location, browsing habits, even the persona you project — shape what the AI recommends. This creates a self-reinforcing loop: The outputs reflect your biases, the AI adapts to those biases, and you become more comfortable relying on answers that fit your worldview.

Garrett Sussman’s “AI Is Rewiring Search” presentation at SEO Week 2025 illustrated this feedback loop with live demonstrations, showing how identical prompts produced different results in Google AI Mode, ChatGPT, and Perplexity when tested with varying persona and location contexts. The takeaway was clear: Personalization doesn’t just adapt answers to you but rewrites your information environment.

These shifts are amplified by platform-level alignment strategies:

Each system’s alignment influences the expression of its bias. Claude’s consistency reflects its values charter, ChatGPT’s answers follow human-preference tuning, and Google’s outputs optimize for surface-level accuracy and click safety. When personal details or “contextual primes” are in play, these biases deepen. This is why two users can enter the same query and receive completely different recommendations — not just in tone or format, but in terms of what brands, sources, and perspectives are surfaced.

This is the “rewiring” in action: Your identity and prior behavior become inputs to the query architecture itself. Search is now a modal, context-driven experience, shaped by the AI’s interpretation of who you are.

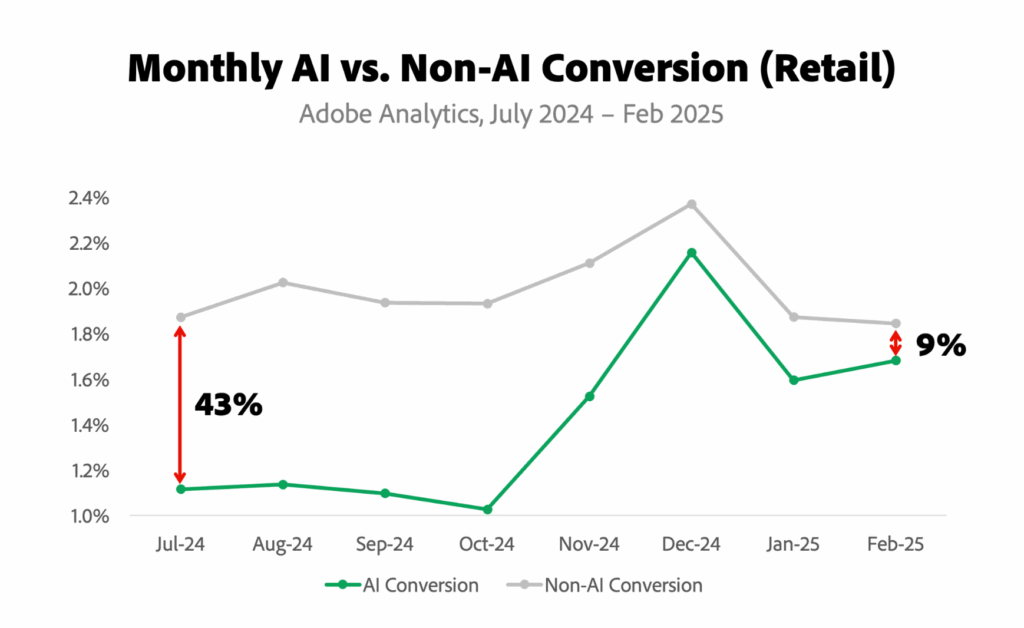

Once personal context and memory are embedded into the search experience, bias shifts from being about what is shown to shaping why a user clicks at all. That behavioral change is central to Google’s defense of AI Overviews. Click volume for certain queries has declined, but Google states that the clicks that remain are more intentional and more likely to lead to deeper engagement. In its view, an AI-powered snippet filters out quick-bounce visits and surfaces the users who are ready to take action.

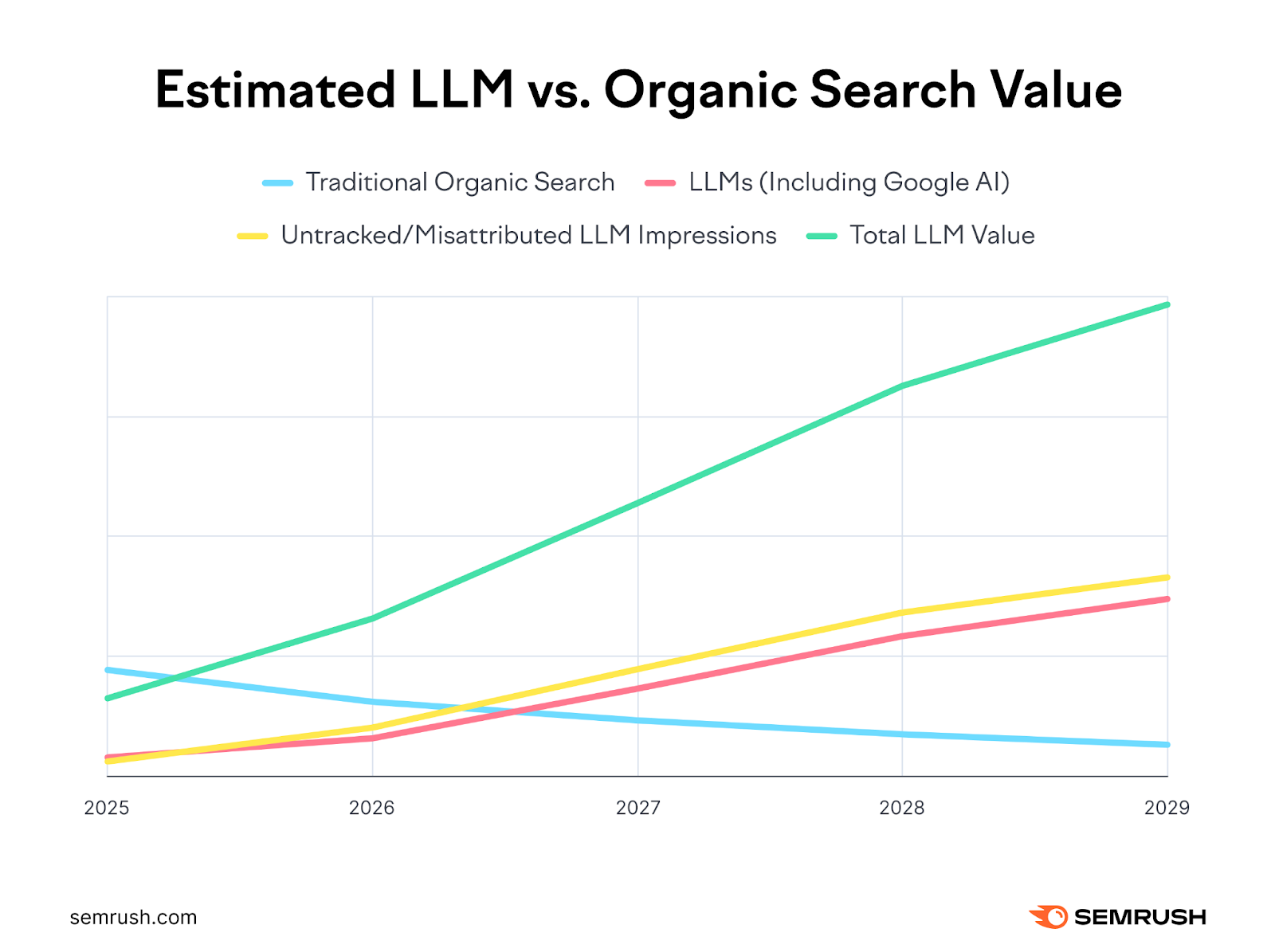

Source: Semrush AI Search study

Elizabeth Reid of Google described AI Overviews as “the most significant upgrade” in search history, asserting they lead to more searches and more valuable clicks. According to Google’s data, overall organic click volume remains stable year over year, and the proportion of “quality” clicks — defined by the company as those after which the user does not quickly return to the results page — has grown.

Google attributes this growth to AI Overviews’ ability to answer low-intent questions, such as “When is the next full moon,” in-line while still encouraging further exploration for more complex or transactional topics.

Many in the SEO community, however, remain skeptical. Independent traffic data often tells a different story, with some publishers reporting significant losses. Critics point out that Google is both controlling the feature and determining the metrics for its success. This raises questions about the objectivity of the quality-click narrative.

Even if Google’s data is accurate, the mechanics described create a structural change for SEOs and Generative Engine Optimizers.

Source: Adobe Analytics Report

Adapting to this shift requires a focus on being present in the spaces where AI Overviews end and user curiosity continues. That means:

In practice, this means moving away from a focus on ranking for a query, and toward ensuring visibility in the specific information gaps that drive clicks and build trust in this more competitive attention environment.

In summary, AI-driven search is changing how people find and use information, providing answers directly and often reducing the need to click through to websites. While this can mean fewer visits for many sites, there’s an argument that the visits that do come through tend to be more intentional, and thus more valuable.

Either way, since generative platforms have an ever greater influence on what people see as credible, brands need to show clear expertise and make it easy for AI systems to recognize and surface their content. The key is to focus on visibility in today’s AI Overviews, while also preparing for a future where AI-first search may be the main way people find information.

If your brand isn’t being retrieved, synthesized, and cited in AI Overviews, AI Mode, ChatGPT, or Perplexity, you’re missing from the decisions that matter. Relevance Engineering structures content for clarity, optimizes for retrieval, and measures real impact. Content Resonance turns that visibility into lasting connection.

Schedule a call with iPullRank to own the conversations that drive your market.

The appendix includes everything you need to operationalize the ideas in this manual, downloadable tools, reporting templates, and prompt recipes for GEO testing. You’ll also find a glossary that breaks down technical terms and concepts to keep your team aligned. Use this section as your implementation hub.

//.eBook

The AI Search Manual is your operating manual for being seen in the next iteration of Organic Search where answers are generated, not linked.

Prefer to read in chunks? We’ll send the AI Search Manual as an email series—complete with extra commentary, fresh examples, and early access to new tools. Stay sharp and stay ahead, one email at a time.

Sign up for the Rank Report — the weekly iPullRank newsletter. We unpack industry news, updates, and best practices in the world of SEO, content, and generative AI.

iPullRank is a pioneering content marketing and enterprise SEO agency leading the way in Relevance Engineering, Audience-Focused SEO, and Content Strategy. People-first in our approach, we’ve delivered $4B+ in organic search results for our clients.

We’ll break it up and send it straight to your inbox along with all of the great insights, real-world examples, and early access to new tools we’re testing. It’s the easiest way to keep up without blocking off your whole afternoon.