Now that you understand how Relevance Engineering drives content’s ability to rank in AI Search and LLMs and how to engineer content for visibility, let’s discuss the art of Relevance Engineering. Gaining visibility in large language models means moving past traditional keyword mapping and providing content that matches models on a semantic level. Instead of focusing on keyword usage, the goal is to create content that is highly relevant to a user’s query and easy for search engines and LLMs to extract information from. There are a number of factors that go into how search engines view relevancy in order to rank and serve AI-driven results. Let’s take a look at those.

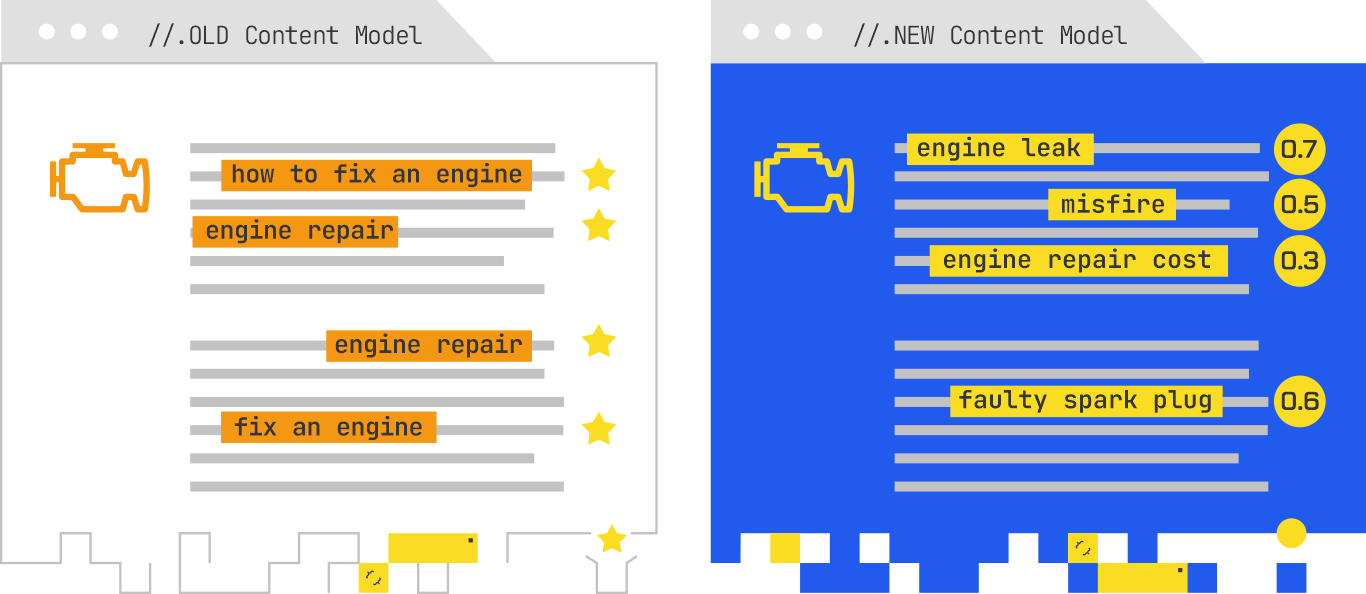

Search engines have long evolved past the keyword density model. It’s no longer viable to include a specific keyword in content multiple times to signal its relevance for that keyword. Modern algorithms use models like BERT and GPT and no longer just look for keywords. They analyze entire passages to understand meaning or semantic relevance.

Semantic scoring measures the conceptual and contextual relevance of a piece of content and goes beyond simple keyword matching. It assigns a numerical score that indicates how well the meaning of the content aligns with the keyword or topic.

For example, a piece of content on engine repair would include target keywords like “how to fix an engine” or “engine repair” and in the old model would repeat those queries in key areas. In the semantic model, it’s not enough to simply overstate keywords. The most relevant piece of content would use related terms and phrases like “engine leak”, “engine repair cost”, “faulty spark plug”, or “misfire”. This shows a comprehensive understanding of the topic and increases the semantic score.

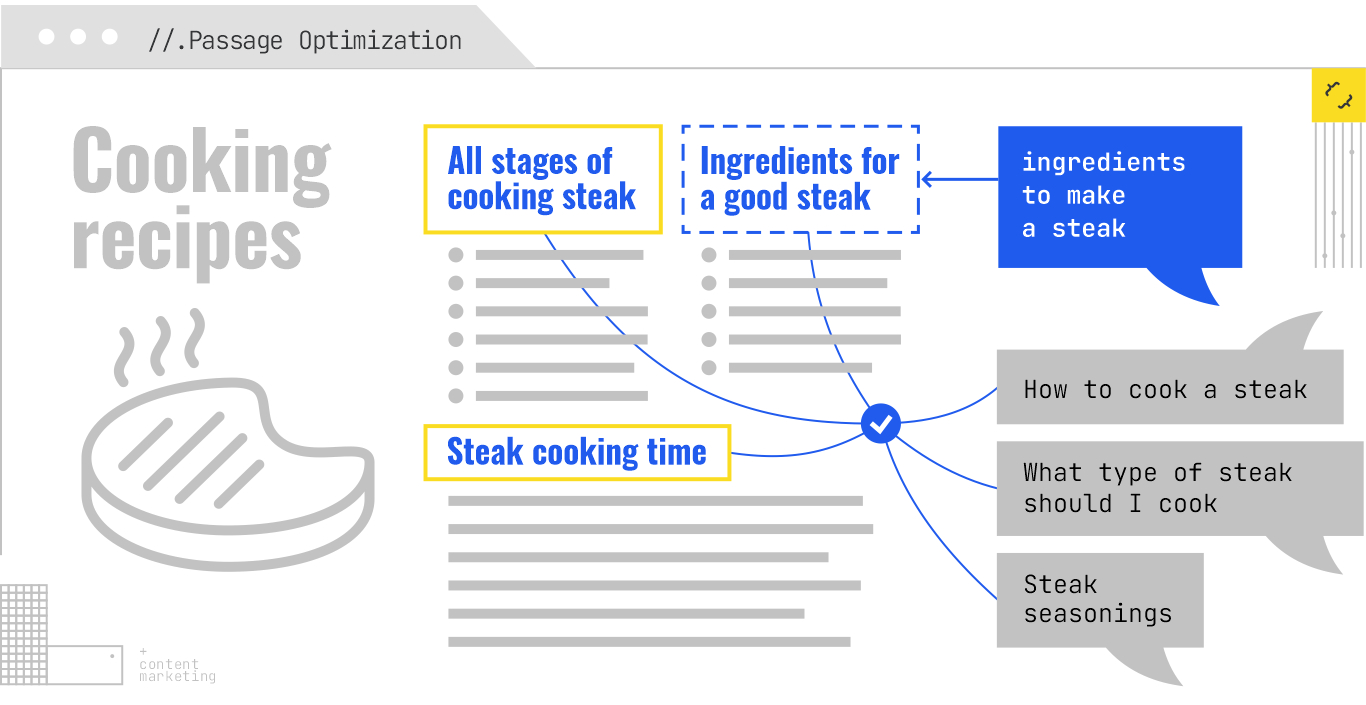

Passage optimization is semantic scoring in action. It’s about structuring content for relevance but also for extractability. AI and retrieval augmented generation operate based on the retrieval of various sets of information to build the whole set of results. It’s pulling passages from a wide set of inputs to directly answer a query.

Optimizing for extractability means that content should be organized into easily defined sections. Headings and subheadings should be clear, and passages should answer queries directly and succinctly. The combination of query/passage is defined as a semantic unit, and these units are used to power AI search.

For example, a recipe landing page has various sections of information, including ingredients, steps, and timing. Each of these sections should be labeled with a clear, query-based heading like “How to cook a steak,” or “What type of steak should I cook”, or “Steak seasonings.” A clear structure and content architecture allows search engines to find the exact passage that answers the search like “ingredients to make a steak.”

This combination of machine learning and content strategy creates a new approach to content that transitions from simple keyword insertion to engineering well-structured, semantically rich content that meets both users’ and search engines’ needs.

Semantic scoring and passage optimization are parts of the larger grouping known as embeddings. Embeddings are numerical representations of a word, phrase, or document in a vector space. Content Engineering in practice is about improving those embeddings, therefore increasing the relevance and proximity of words and phrases within that vector space.

Here are a few tactics to improve your content with Relevance Engineering:

AI is rapidly evolving and marketers, content marketers, and creators have to think beyond the traditional user and consider how a new audience sees their work. LLMs view your content in ways that humans don’t and it’s powerful to be able to simulate this view to test if your content engineering is effective. Two ways to do this are prompt injection and retrieval simulation.

Prompt injection is where a user adds an input crafted to manipulate an LLM to perform an unintended action, foregoing its original instructions. This is primarily a security breach, but it can be a powerful tool for content marketers. By understanding how an LLM can be “injected” with new prompts, you can simulate how your content may be interpreted. This involves creating prompts to test the limits of how your content is understood.

Retrieval simulation takes prompt injection further. Instead of testing the output, it simulates the entire process of how LLMs find, use, and serve your data. A Retrieval Augmented Generation (RAG) system retrieves your content from its knowledge base and then uses that data to parse an answer.

Retrieval simulation involves:

Retrieval simulation is a powerful tool to help marketers better understand how LLMs see and use their content. It has a variety of applications and can help you proactively identify and fix gaps in your content.

A couple of questions to ask as you simulate content retrieval:

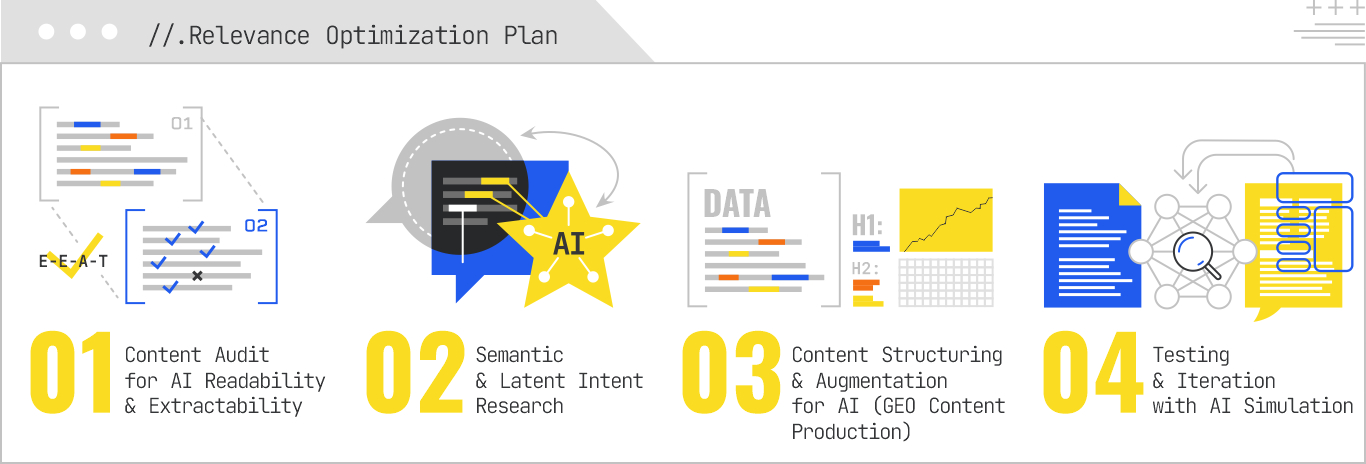

The process of Relevance Engineering is no different from the standard auditing, research, and optimization process. The inputs and outputs are simply different. Here is a Relevance Optimization Plan you can implement across your content to better understand its place in AI. This is a starting point and iPullRank can support your team on content auditing, keyword research and content creation for this new AI-powered world.

If your brand isn’t being retrieved, synthesized, and cited in AI Overviews, AI Mode, ChatGPT, or Perplexity, you’re missing from the decisions that matter. Relevance Engineering structures content for clarity, optimizes for retrieval, and measures real impact. Content Resonance turns that visibility into lasting connection.

Schedule a call with iPullRank to own the conversations that drive your market.

The appendix includes everything you need to operationalize the ideas in this manual, downloadable tools, reporting templates, and prompt recipes for GEO testing. You’ll also find a glossary that breaks down technical terms and concepts to keep your team aligned. Use this section as your implementation hub.

//.eBook

The AI Search Manual is your operating manual for being seen in the next iteration of Organic Search where answers are generated, not linked.

Prefer to read in chunks? We’ll send the AI Search Manual as an email series—complete with extra commentary, fresh examples, and early access to new tools. Stay sharp and stay ahead, one email at a time.

Sign up for the Rank Report — the weekly iPullRank newsletter. We unpack industry news, updates, and best practices in the world of SEO, content, and generative AI.

iPullRank is a pioneering content marketing and enterprise SEO agency leading the way in Relevance Engineering, Audience-Focused SEO, and Content Strategy. People-first in our approach, we’ve delivered $4B+ in organic search results for our clients.

We’ll break it up and send it straight to your inbox along with all of the great insights, real-world examples, and early access to new tools we’re testing. It’s the easiest way to keep up without blocking off your whole afternoon.