Search as we know it is in the midst of a metamorphosis. Kafka cockroach style. And nobody knows what it will look like on the other side.

The classic standalone search engine, 10 blue links, is a thing of the past. The current iteration of Google is a blended amalgamation of generated slop, ad oversaturation, zero-click features, and a tiny smattering of organic links and listings. For AI Mode and ChatGPT, it’s essentially a feature within general AI assistants.

In the not-so-distant future, users might not “go search” on a website at all; instead, they’ll simply converse with an AI assistant that handles search tasks in the background. This final chapter examines how AI-first discovery transforms search, the emergence of pure Generative Experience Optimization (GEO), and cutting-edge developments (from Google’s Project Mariner and Astra to OpenAI, Amazon, and Meta) that indicate an assistant-driven future of search.

In practical terms, advanced GEO addresses the probabilistic nature of search. Everything is uncertain. Technical limitations are shifting and breaking.

We just don’t know how this all will play out, but there are clues.

One of the biggest shifts in future search is moving beyond the keyboard. Typing is a form of communication, but it’s also a source of friction. We can’t type as fast as we can think, look, gesture, or talk.

Voice search, visual search, and even “embodied” search are expanding how people find information. AI assistants are enabling truly multimodal interactions – you might speak a query, show the AI what you’re looking at through a camera, or even rely on wearables that continually interpret your surroundings.

Voice search is a running joke in the world of SEO, but it is already ubiquitous via digital assistants like Siri, Alexa, and Google Assistant. But next-gen AI? That’s making voice interactions far more powerful.

Google’s Project Astra, for example, features native audio dialogue that can understand various accents and languages in real time and respond fluidly in 24 languages.

This lets users ask complex questions naturally, as if chatting with a human, and get immediate answers read back to them. Meanwhile, Amazon’s new Alexa+ (a generative AI overhaul of Alexa) takes it further. It’s more conversational and capable, able to discuss anything from organizing your day to finding products to buy, all through natural voice dialogue.

Voice interfaces backed by LLMs make search feel like talking to a smart friend rather than typing keywords.

Visual search is likewise becoming mainstream. We’re moving into an age where you can point your phone (or smart glasses) at something and ask the AI assistant for information. Google’s advancements in this space are significant: its Lens visual search can identify objects or translate text in images, and now Project Astra enables real-time Q&A via your camera feed.

In Google’s Search “Live” mode, you can tap a Live button and ask questions about what you’re seeing through your phone’s camera – Project Astra will stream live video to an AI model and instantly explain or answer questions about your view.

Discovery in physical spaces adds a completely new digital layer to our real-world engagement. Imagine looking at a landmark and asking your glasses for its history, or scanning a product and having the AI pull up reviews and best prices. Search is becoming sight-driven when needed, not just word-driven.

By embodied search, we refer to search capabilities integrated into our physical world and devices – essentially, search that’s aware of context and space. AR (augmented reality) glasses are a prime example. Google’s smart glasses prototype (born from Project Astra’s demo) shows how an AI assistant could “see the world as you see it” and overlay information or guidance in real-time.

In this mode, the AI is embodied in your environment, describing what’s around you, helping you navigate, or even performing tasks in physical contexts. For instance, an embodied search assistant could help a visually impaired user by identifying obstacles and signage, or guide a user through cooking by visually recognizing ingredients and giving step-by-step instructions. The assistant essentially becomes your eyes and ears when needed.

Crucially, these multimodal interactions demand that search engines (and content creators) accommodate non-text queries and results.

Search engines must index and understand images, audio, and 3D data just as well as text. For marketers and SEOs, it means ensuring content is accessible in all these forms, providing rich imagery, AR experiences, and voice-ready content, because the AI assistant of the future will favor content that it can present hands-free and eyes-free.

The companies at the forefront are investing heavily here: Google with Lens and Astra for vision, Amazon with Alexa’s new ability to navigate the web and apps “behind the scenes” for you, and Meta reportedly exploring search that works through conversational AI across Instagram and WhatsApp (imagine asking your messaging app to find a restaurant nearby via AI). The search box is no longer the only entry point. Our cameras, microphones, and sensors are becoming critical search inputs of the future and reducing friction.

How comfortable are you with sharing all of your private data with these tech organizations to access a new level of search product?

Another hallmark of future search is extreme personalization. AI’s deep user understanding and large data context mean search results will increasingly be tailor-made for each individual. We’re not just talking about localized results or past-search history, we’re talking about AI that knows you (your preferences, interests, past interactions) and uses that to deliver or even synthesize the exact information you need.

Google has unparalleled amounts of user data and is poised to leverage it in AI-driven search. The government is unlikely to break up such a powerful monopoly anytime soon, despite the various ongoing anti-trust trials.

An AI-first search engine can consider your entire search and browsing history, your Gmail context, your YouTube views, etc., to craft results or answers that fit your personal context.

For example, if you ask, “What should I cook for dinner?” an AI-enhanced search could recall that you recently looked up BBQ recipes and that you rated a particular Kansas City sauce highly the previous week. It might then suggest a new vegan recipe and even show a summary from a cooking video you’d enjoy.

This hyper-personalization is an explicit goal in Google’s projects: Project Astra learns and retains user preferences to provide personalized answers. It uses your past interactions as context, employing deep reasoning and memory to tailor recommendations (say, shopping suggestions that match your style or dietary needs). In other words, the AI search agent builds a profile of what you like and adapts its answers to you, not a generic user.

OpenAI is also pushing in this direction. ChatGPT’s custom instructions and memory feature (rolled out in 2024) letting the assistant remember user-provided details across sessions preferences, writing style, frequent topics and apply them to future answers. That means the same question can yield completely different responses for two users, because the assistant’s personalization layer shapes which sources it pulls, how it frames information, and what follow-ups it suggests. This personalization extends into search mode: if you’ve previously asked about “best CRMs for enterprise SaaS” and indicated a preference for open-source tools, ChatGPT can bias future search results toward that profile without you restating it. The long-term implication is that OpenAI’s assistant becomes a search filter for you as a persona, not just for a query.

Amazon’s Alexa+ is doing something similar on the home front. Alexa+ is described as highly personalized – she knows what you’ve purchased, what music you love, your preferred news sources, etc., and you can explicitly teach her more (like family birthdays, or “my daughter is vegetarian”). When you later search or ask for something, Alexa uses that knowledge to filter and enhance results. If you ask for a family dinner idea, Alexa will remember your daughter’s vegetarian diet and your partner’s gluten allergy and only suggest appropriate recipes, for instance. This level of personalization transforms search from “ten blue links that might fit most people” into one tailored answer or action that fits you.

Meta is also sitting on a goldmine of personal data across its social platforms, and it’s reportedly developing an AI search engine integrated with its apps to leverage that context. Because Meta’s AI (like the Meta AI chatbot in WhatsApp or Instagram) can know your social graph and interests, it could personalize answers heavily – e.g., recommending restaurants your friends have visited or tailoring news answers based on pages you follow. Hyper-personalization is a double-edged sword (amazing convenience, but raising privacy questions), yet it’s clearly where search is heading. Users will come to expect that their AI assistant “just knows” their needs.

From a strategic standpoint, content creators and SEOs will need to prepare for an era where everyone sees something slightly different. Optimizing for the “average user” becomes tricky when AI is curating results one person at a time. It puts even more emphasis on producing content that addresses specific niches or personas deeply (so the AI can match it to the right user profile). It also means trust and data ethics become vital – users must trust the assistant with their data to get these personalized benefits. But if done right, a hyper-personal AI search can be like a concierge who already knows your tastes. The future search engine might sift through millions of pieces of content and billions of data points, but deliver one answer that feels eerily made for you.

Hand-in-hand with personalization is the concept of memory in AI search assistants. Unlike a traditional search engine that treats each query as isolated, future AI assistants will retain context across multiple interactions and sessions. This means the AI remembers what you asked earlier today – or last week or even last year – and can use that context to inform current results. Search becomes a continuous conversation rather than one-off questions and answers.

We already see early signs of this in conversational AI. For example, ChatGPT can carry context within a single chat session – if you ask follow-up questions, it “remembers” what you were talking about. Future systems will extend this across sessions and devices. Project Astra introduces cross-session, cross-device memory as a core feature: it integrates different types of data and “remembers key details from past interactions”. You might start a conversation on your phone, then continue on smart glasses later in the day – Astra’s assistant will recall what you discussed and pick up right where you left off. This persistent memory enables far more intelligent assistance. For instance, you could ask “Hey, what was that Thai restaurant I searched last month that I wanted to try?” and the assistant will know exactly which one you meant because it has kept track of your past search intents.

Memory in search also unlocks proactive assistance. If the AI knows your context and retains it, it can anticipate needs. Amazon hints at this with Alexa+ being proactive – e.g., suggesting you leave early for an appointment if traffic is bad, or reminding you when a product you looked at is on sale. In search terms, an AI might remind you, “You asked me about digital cameras yesterday; do you want to see the top deals that came up since then?” This flips search from reactive (user-initiated) to a more continuous support role. Google’s vision of a “universal AI assistant” leans heavily on such memory: Sundar Pichai has discussed how future Google assistants will maintain long-term memory to enable ongoing conversations and multi-step tasks for users. In practical terms, it means you won’t have to repeat or rephrase context that you already provided – the assistant recalls it. If you spent a week planning a trip via your AI assistant, when you return a month later and say, “Book me a flight to that same destination in spring,” the assistant’s memory of the previous trip planning will let it understand exactly what to do.

For marketers, this memory aspect of search means user interactions with content might not be one-and-done. If someone consulted your website via an AI assistant, details from that content could live on in the assistant’s memory. Ensuring up-to-date information and making a strong positive impression in those interactions is key, because the AI might summarize or reuse that info later for the user. It also means new opportunities: content or answers can be delivered at the right time proactively. A user might not explicitly search “time to change car oil” but if months ago they asked about car maintenance schedules, the AI could proactively surface a reminder or relevant content (perhaps from an auto brand’s site) when the time comes. In essence, search with memory turns the AI into an ongoing research partner that grows more useful the more you use it. It’s a far cry from today’s forgetful search engines, and it will raise the bar for relevance and timeliness in content that AIs choose to remember.

As AI assistants take on more complex tasks, they can’t exist in isolation. They need to fetch data, use tools, and even work with other AI agents. Enter open protocols like the Model Context Protocol (MCP), an emerging standard aimed at making different AI systems interoperable by sharing context and capabilities.

MCP essentially provides a common language for AI models and tools to talk to each other securely and efficiently. For search and discovery, this is a big deal: it means your AI assistant can tap into various databases, apps, and services on the fly, and carry the relevant context over.

Anthropic introduced MCP as a way to break AI systems out of data silos. Traditionally, hooking an AI up to a new data source or app required bespoke integration. MCP changes that by defining a universal protocol for connecting AI assistants to external data, content repositories, and services. For example, using MCP, an AI could retrieve information from your company’s internal wiki or a cloud drive, then use that context when answering a query – all through a standardized approach. In the search context, MCP might enable an assistant to seamlessly incorporate real-time personal or enterprise data into answers. If you search “status of Project X,” a personal AI could use MCP to query your project management app and respond with the latest updates, rather than just giving generic web results.

What’s powerful is that MCP is designed to be two-way and persistent. It’s not just the AI pulling data; data sources can push updates to the AI as well. This means the AI can maintain a live context. Consider how search results could improve when an AI remains connected to, say, your calendar, email, and favorite news sources continuously. Ask “what should I do today?” and the AI can answer with a synthesis: your schedule (from Calendar via MCP), the weather (from a weather API), and maybe relevant news for your industry (from a news feed) – all integrated because the protocol allowed it to maintain those context connections. Essentially, MCP and similar efforts aim to give AI assistants dynamic memory and tool use across platforms, which will make search queries vastly more powerful and personalized.

From an industry standpoint, open standards like MCP prevent a future where each tech giant’s AI is a closed ecosystem. Instead, your Google assistant, your car’s AI, and your work’s AI tool could collaborate, passing context under a common protocol. As MCP matures, we might see AI agents that can “search” on each other’s behalf – e.g. your personal AI handing off a sub-task to a specialized AI (like a legal research agent) and then aggregating the results. This obviously overlaps with another protocol we’ll discuss next, Google’s A2A. The key difference is MCP focuses on connecting to data and tools (vertical integration of context into one agent’s workflow), whereas A2A is about multiple agents working together (horizontal integration between agents). Both are complementary and together will fundamentally amplify what “search” can do. Instead of a user manually stringing together queries across sites and apps, the AI agents will coordinate in the background to deliver a multi-faceted answer or complete a multi-step task, all enabled by these interoperability standards.

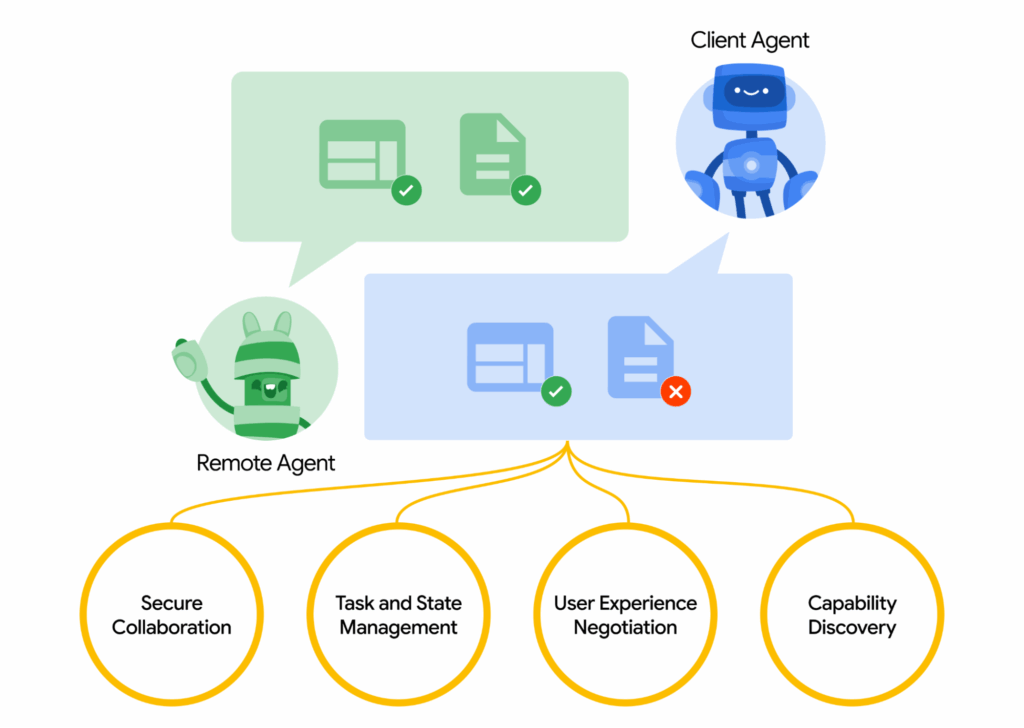

In the quest to make AI assistants more capable, Google has spearheaded another crucial piece of infrastructure: Agent-to-Agent communication, known as A2A. Launched as an open protocol in early 2025, A2A is about enabling different AI agents to communicate, collaborate, and delegate tasks to each other.

Think of it as putting multiple specialized minds together. Where MCP connects an AI to data/tools, A2A connects AI agents themselves, even if they’re built by different providers. This can supercharge search by breaking complex queries into parts that different agents handle in parallel – all coordinated seamlessly behind the scenes.

Here’s how it works in simple terms: A2A defines a standard way for one agent (the “client” agent) to find and instruct another agent (the “remote” agent) to do something.

For example, suppose you ask your AI assistant, “Plan my weekend trip and book everything I’ll need.” That’s a multi-step request involving flights, hotels, maybe restaurant reservations. With A2A, your primary assistant could spin up or message a travel-booking agent to handle flights and hotels, a restaurant agent to find popular dining spots at your destination, maybe even a deal-finder agent to apply discounts. Each of these agents can communicate their progress and results back to the main assistant, which then presents you with a cohesive answer or itinerary. All of this happens via the A2A protocol’s structured communication: agents advertise their capabilities (so they can discover who can do what), they share “task” objects and updates, and they exchange any necessary context or results (called “artifacts”).

Google’s introduction of A2A signals an understanding that no single monolithic AI can do everything perfectly. Instead, a network of interoperable agents can tackle user needs collaboratively. For search, this means queries that were once too broad or complex can now be handled by orchestrating multiple agents. For instance, a user might effectively ask a chain of questions in one go (“find me a house, negotiate the price, and set up internet service when I move”). Rather than one AI trying to be a jack-of-all-trades, a real estate agent-AI, a contract lawyer-AI, and an ISP setup-AI could each work on their piece and communicate results. A2A provides the rules and structure (such as a shared language and playbook) for this cooperation, including capability discovery (identifying the right agent for a sub-task) and user experience negotiation (ensuring the format of responses, whether text, image, form, etc., aligns together).

Google has rallied over 50 partners (enterprise software firms, startups, etc.) behind A2A’s development. The Linux Foundation is even hosting it as an open project. This broad support suggests that soon, we’ll see a rich ecosystem of interoperable agents. Imagine an AI assistant in Search that, when posed a question like “How do I improve my business’s online presence?”, might consult an SEO expert agent, a social media strategy agent, and a web analytics agent, then merge their insights for you. From the user’s perspective, it’s still one “answer,” but under the hood, multiple AIs cooperated. The scale and accuracy of search will explode as specialized agents handle pieces of the puzzle, with each agent leveraging its own expertise or proprietary data, all coordinated through A2A.

Importantly, A2A also hints at a future beyond single-platform dominance. Perhaps your personal AI on your phone (maybe from Apple) could use A2A to enlist help from a Google agent or an OpenAI agent when needed, and vice versa. This opens possibilities for cross-platform search experiences where, say, an AI in your car can ask your home assistant to check something on your PC. All these agents talking and sharing means the user gets the best of all worlds. For search marketers, it means thinking about how your content or service might be utilized by specialized agents. Providing APIs and structured data that agents can consume is likely to become as important as traditional SEO metadata. If agent X needs to query your site’s info to complete a task, being A2A-ready (or MCP-ready) could determine whether your solution is included in the AI-driven answer.

Bringing it all together, we see every major tech player reimagining “search” through the lens of AI assistants.

Google is arguably leading with a multi-pronged approach: its Gemini AI model powers both AI Mode and ambitious projects like Astra and Mariner. Google’s Project Mariner exemplifies the “assistant that can act” – it allows an AI agent to browse websites and complete tasks like buying tickets or ordering groceries simply by chatting with the user. In fact, Google’s search leadership views Mariner’s agents as part of a fundamental shift where users delegate tasks to AI instead of doing them manually on websites. Mariner is currently in labs and rolling out to early users (via an “AI Ultra” subscription) with plans to integrate into Google’s AI Mode soon. Meanwhile, Project Astra is bringing real-time multimodal smarts into search – features like asking questions about a live scene (via your camera) and getting answers nearly instantly. Astra is also behind the scenes boosting Google’s voice and video understanding (as seen in Gemini Live’s low-latency conversations). The message from Google’s I/O 2025 announcements is clear: search is becoming immersive and agentic. Google even previewed an “Agent Mode” in Chrome where browsing, search, and task execution blend together via AI. It’s not hard to imagine a near future where using Google means conversing with a Gemini-powered aide that can not only fetch info but complete actions.

OpenAI, partnered deeply with Microsoft, is tackling search from the conversational AI angle. ChatGPT’s Search mode combines natural language queries with live web results and cited sources, essentially turning the chat interface into a more intuitive search engine. Their Operator agent prototype shows how that same assistant can go beyond finding information to actually performing multi-step actions on the web — filling forms, booking reservations, or completing transactions — much like Google’s Mariner. In 2025, OpenAI also introduced Agents as a platform feature: persistent, customizable AI entities that can be tailored to a user or organization’s exact needs. An Agent can remember context over time, follow a brand voice, have access to specific datasets, and run specialized search or reasoning workflows automatically. For example, a marketing-focused Agent could continuously monitor competitor announcements, pull relevant search data daily, and proactively surface opportunities — without the user explicitly prompting it each time. This pushes OpenAI’s search experience toward continuous, personalized discovery, where finding information is just one layer of a larger, context-aware relationship with the assistant.

Meta, under Mark Zuckerberg’s direction, is launching a bold push to commandeer elite AI minds. In 2025, Zuckerberg formed the Meta Superintelligence Labs—a high-stakes initiative focused on advancing artificial superintelligence—which recruited top-tier talent from Apple, OpenAI, Anthropic, and Google, and is backed by massive financial offers and infrastructure investments (e.g., billion-dollar compensation packages, multi-billion-dollar data center spending). Notable hires include Shengjia Zhao, a co‑creator of ChatGPT, brought on as chief scientist, and Matt Deitke, a 24-year-old AI prodigy recruited with a staggering $250 million offer. Zuckerberg reportedly maintains a “literal list” of AI stars he’s targeting, and is prepared with explosive offers—some described as high as $300 million over a few years. This campaign has created ripple effects across the industry—OpenAI, for example, has countered with elevated retention bonuses and equity incentives.

Others in the landscape shouldn’t be overlooked. Microsoft, through Bing and its partnership with OpenAI, effectively turned Bing into an AI-first search engine early on. Bing’s chatbot can answer complex questions with cited sources and was a template that arguably spurred Google’s SGE. Microsoft is also integrating the same into Windows (Copilot in Windows 11) – effectively bringing search into the operating system level. Apple has been quieter but is rumored to be working on its own LLMs and possibly an AI search service (and with devices like Vision Pro AR headset, they will certainly have a play in visual/embodied search). And a plethora of startups (like Perplexity.ai, Neeva – which was acquired by Snowflake, etc.) are exploring AI-driven search experiences that rely purely on AI Q&A or community-curated knowledge.

Any single company does not own the future of search (probably). When search becomes an intelligent service everywhere, it becomes a data competition and a stickiness competition. The common theme is that whether it’s Google’s “search as an agent,” OpenAI’s chat-based search, Amazon’s assistant commerce, or Meta’s social AI search, they all point to search being woven into the fabric of our interactions, predominantly via AI assistants.

For people, this sounds like a promise of incredible convenience. What happens when we get search that is conversational, proactive, contextual, and actionable? For content creators and marketers, it means adapting to a world where getting discovered may no longer mean just ranking #1 on a webpage. Still, being the trusted source an AI pulls into a rich answer, or being the service an AI agent chooses to fulfill a task. The search box is morphing into a dialogue with an AI that can do anything. As we close this manual, one thing is evident: the future of search is a personal AI that searches, decides, and acts, all in one. And optimizing for that future starts now.

If your brand isn’t being retrieved, synthesized, and cited in AI Overviews, AI Mode, ChatGPT, or Perplexity, you’re missing from the decisions that matter. Relevance Engineering structures content for clarity, optimizes for retrieval, and measures real impact. Content Resonance turns that visibility into lasting connection.

Schedule a call with iPullRank to own the conversations that drive your market.

The appendix includes everything you need to operationalize the ideas in this manual, downloadable tools, reporting templates, and prompt recipes for GEO testing. You’ll also find a glossary that breaks down technical terms and concepts to keep your team aligned. Use this section as your implementation hub.

//.eBook

The AI Search Manual is your operating manual for being seen in the next iteration of Organic Search where answers are generated, not linked.

Prefer to read in chunks? We’ll send the AI Search Manual as an email series—complete with extra commentary, fresh examples, and early access to new tools. Stay sharp and stay ahead, one email at a time.

Sign up for the Rank Report — the weekly iPullRank newsletter. We unpack industry news, updates, and best practices in the world of SEO, content, and generative AI.

iPullRank is a pioneering content marketing and enterprise SEO agency leading the way in Relevance Engineering, Audience-Focused SEO, and Content Strategy. People-first in our approach, we’ve delivered $4B+ in organic search results for our clients.

We’ll break it up and send it straight to your inbox along with all of the great insights, real-world examples, and early access to new tools we’re testing. It’s the easiest way to keep up without blocking off your whole afternoon.